Preface

© 2021 Vector Informatik GmbH - All rights reserved - https://www.vector.com/ - This material may not be reproduced, displayed, modified or distributed without the express prior written permission of the copyright holder. Squore is protected by an Interdeposit Certification registered with Agence pour la Protection des Programmes under the Inter Deposit Digital Number IDDN.FR.001.390035.001.S.P.2013.000.10600.

Foreword

This edition of the Getting Started Guide was released by Vector Informatik GmbH.

It is part of the user documentation of the Squore software product edited and distributed by Vector Informatik GmbH.

For information on how to use and configure Squore, the full suite of manuals includes:

User Manual |

Target Audience |

|---|---|

New users before their first installation |

|

IT personnel and Squore administrators |

|

End users, new users wanting to discover Squore features |

|

Continuous Integration Managers |

|

Squore configuration maintainers, Quality Assurance personnel |

|

Eclipse IDE users |

|

End Users, Squore configuration maintainers |

|

End Users, Continuous Integration Managers |

|

End Users, Quality Assurance personnel |

|

You can also use the online help from any page when using the Squore web interface by clicking ? > Help. |

Licence

No part of this publication may be reproduced, transmitted, stored in a retrieval system, nor translated into any human or computer language, in any form or by any means, electronic, mechanical, magnetic, optical, chemical, manual or otherwise, without the prior written permission of the copyright owner, Vector Informatik GmbH. Vector Informatik GmbH reserves the right to revise this publication and to make changes from time to time without obligation to notify authorised users of such changes. Consult Vector Informatik GmbH to determine whether any such changes have been made. The terms and conditions governing the licensing of Vector Informatik GmbH software consist solely of those set forth in the written contracts between Vector Informatik GmbH and its customers. All third-party products are trademarks or registered trademarks of their respective companies.

Warranty

Vector Informatik GmbH makes no warranty of any kind with regard to this material, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Vector Informatik GmbH shall not be liable for errors contained herein nor for incidental or consequential damages in connection with the furnishing, performance or use of this material.

This edition of the Getting Started Guide applies to Squore 19.1.18 and to all subsequent releases and modifications until otherwise indicated in new editions.

Responsabilities

Approval of this version of the document and any further updates are the responsibility of Vector Informatik GmbH.

Contacting Vector Informatik GmbH Product Support

If the information provided in this manual is erroneous or inaccurate, or if you encounter problems during your installation, contact Vector Informatik GmbH Product Support: https://support.squoring.com/

You will need a valid customer account to submit a support request. You can create an account on the support website if you do not have one already.

For any communication:

-

support@squoring.com

-

Vector Informatik GmbH Product Support

Squoring Technologies - 9/11 rue Matabiau / 31000 Toulouse - FRANCE

Getting the Latest Version of this Manual

The version of this manual included in your Squore installation may have been updated. If you would like to check for updated user guides, consult the Vector Informatik GmbH documentation site to consult or download the latest Squore manuals at https://support.squoring.com/documentation/latest. Manuals are constantly updated and published as soon as they are available.

1. Introduction

This document is the Getting Started Guide for Squore.

It is indented as a follow up to the Squore Installation and Administration Guide and will help you understand how to use the Squore user interface to create and update projects. It is divided into several chapters, as detailed below:

-

The Tools at Your Disposal provides details on where to find the sample Squore projects.

-

Accessing Squore will guide you through your first access to Squore as a user.

-

Creating Projects and Versions covers ways of creating new projects and versions.

-

Understanding Analysis Results describes the user interface and functionality you will use in Squore on a daily basis.

-

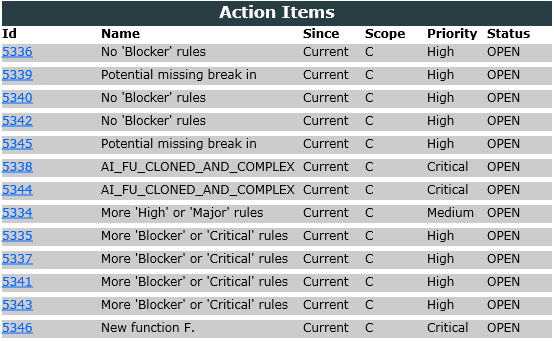

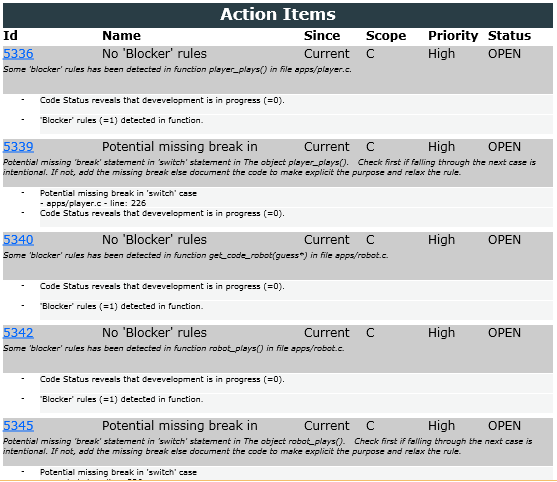

Managing Your To-Do List With Squore helps you integrate action items suggested by Squore into your workflow.

-

Going Beyond Source Code shows how you can work with artefacts that are not source code.

-

Track Your Favourite Indicators shows how you can track your favourite items and consult Squore results on mobile devices.

-

Focus on Your Milestones guides you through the introduction and management of milestones and objectives in Squore.

-

Communicating With Squore covers all reporting features of Squore.

-

Keep it Tidy: Project Maintenance in Squore helps you maintain a Squore installation.

-

Repository Connectors and Data Providers detail the various Repository Connectors and Data Providers you can use when launching analyses.

2. The Tools at Your Disposal

Default Users and Sample Projects

Squore ships with a collection of sample projects that we will refer to throughout this guide. Each project consists of one or several versions of the source code of an application. The code can be found in Squore Server and Squore CLI in the folder <SQUORE_HOME>/samples. If you do not have access to the sample projects, contact your Squore administrator to obtain a copy of the code.

Squore ships with a database that contains two sample users that you can use to familiarise yourself with all the functionality available:

-

admin/admin is the default user that can manage the server installation, reload the server configuration after changes and perform access management tasks for the Squore installation.

-

demo/demo is the default Squore power user that can create, review and manage projects, as well as give team members visibility or management privileges on the projects he himself manages.

You can use these two default users, but we recommend that you change their passwords after your first connection. The privileges and permissions assigned to these default users can be modified as needed. You can familiarise yourself with Squore permissions and privileges by referring to Understanding Profiles and Roles.

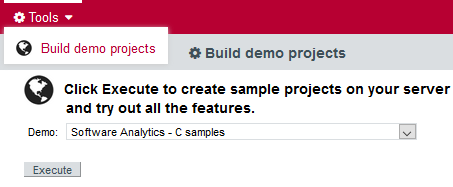

You may choose to read this manual from beginning to end or jump straight to a specific topic. Logging in as the demo user gives you access to a Tools menu that allows reproducing the examples shown in this manual. Click Tools > Build Demo Projects and select the Software Analytics - C samples to get started.

|

The menu is only accessible to the user demo or any user who belongs to a group called demo. Contact your Squore administrator if you do not have access to the Tools to create the sample projects. |

Getting More Help

If at any moment you have doubts about how a feature works, Squore offers help in HTML and PDF formats. A Wiki and support site is also available.

Online Help

The Squore online help can be accessed from anywhere in Squore by clicking on the ? > Help menu entry.

The online help is contextual and provides information in a popup window about the page that you are currently viewing in Squore.

User Guides and Support Wiki

The Squore user guides are available in PDF and HTML format by clicking the ? > User Guides menu entry in Squore. You can download a copy for offline use.

The Squore Support Wiki provides release notes, known issues and hints and tips for current and past Squore versions. Visit http://openwiki.squoring.com for more information.

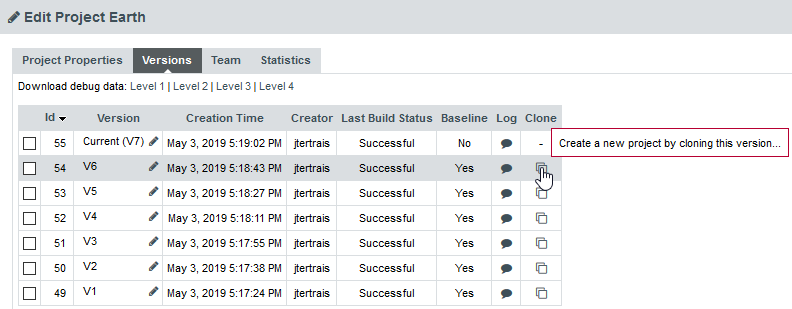

Review Log Files and Download Debug Data

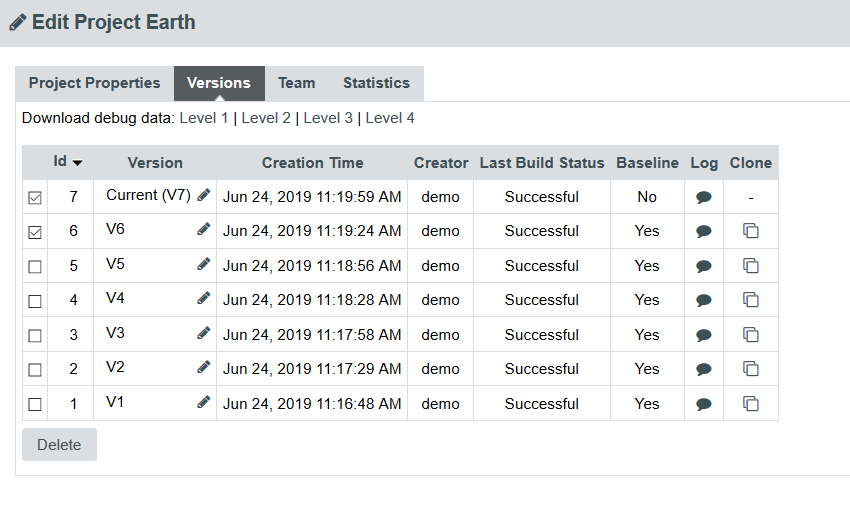

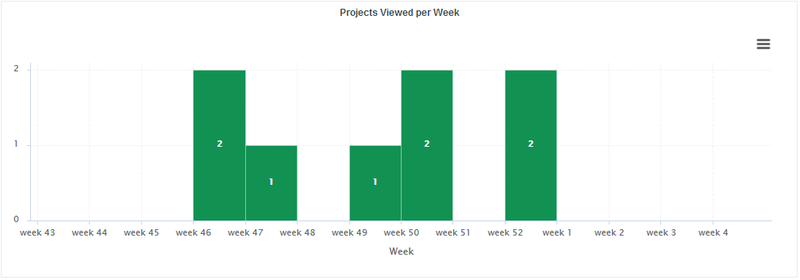

Every owner or Project Manager of a project can retrieve the analysis log files for their projects without the need to consult an administrator. This is done by accessing the Manage page for a particular project and viewing the Versions tab (Projects page > Manage icon > Versions tab) as shown below:

Clicking the Log icon opens a page showing the project’s client and server logs for that analysis, as well as configuration and output files will open in a new browser tab.

Clicking the one of the Download Debug Data links above the versions table downloads a zip file of the logs and project data that can be further analysed to understand problems during analysis. The lower levels include only data related to the latest analysis, while the higher levels include information related to the history of the entire project.

A debug data zip file contains a collection of logs, temporary and output files for each one or more versions of the project. Each version folder can contain the following items:

-

A DataProviders folder containing the output files generated by each Data Provider run during the analysis.

-

A [DataProviderName].log file for each Data Provider included in the analysis.

-

A conf.xml file summarising the project parameters used for the analysis.

-

A output.xml file containing the output information requested with the --filter parameter during the analysis.

-

A build.log file containing the information relative to actions carried out on the server during the analysis.

-

A build_client.log file containing the information relative to actions carried out on the client during the analysis.

-

A excluded.log file containing the list of all files not included in the analysis and the reason for their exclusion. Note that this file is only generated if some files were excluded.

-

A table.md5 file containing state information about the analysed source code, if any.

-

A storage folder containing information about the analysed source code, if any.

|

If you do not want to download the entire debug package, note that the main log files can also be downloaded individually from the Projects page by clicking on the project status label. |

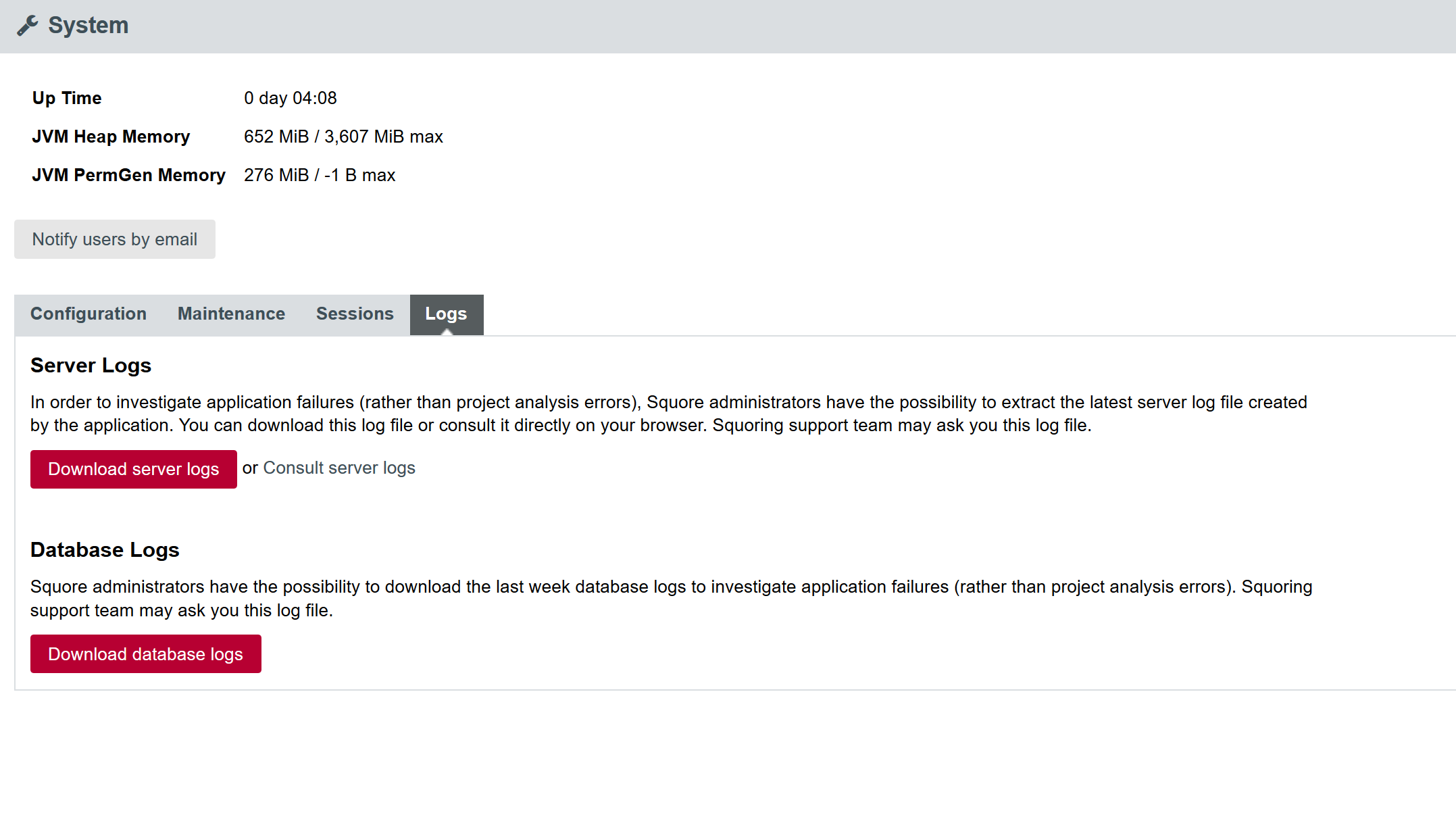

In order to investigate application failures (rather than project analysis errors), Squore administrators have the possibility to extract and download Squore Logs file created by the application. You can access the Logs if you have administrator privileges by clicking Administration > System > Logs tab in the toolbar after logging in.

In the Logs tab, administrators have two kind of logs available:

-

Server Logs: extract and download into a .zip file the latest server log file created by the application. It is also possible to consult the server log in the UI.

-

Database Logs: extract and download into a .zip file the last week database logs.

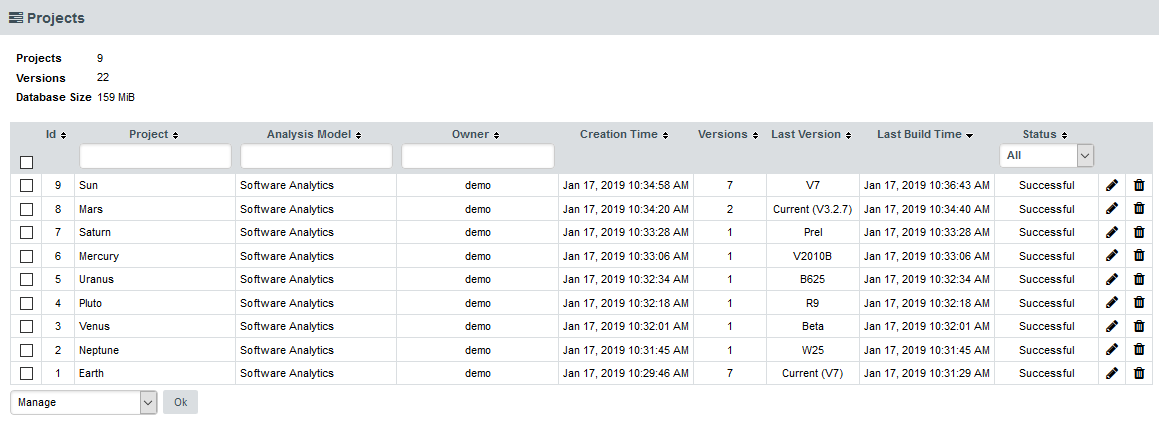

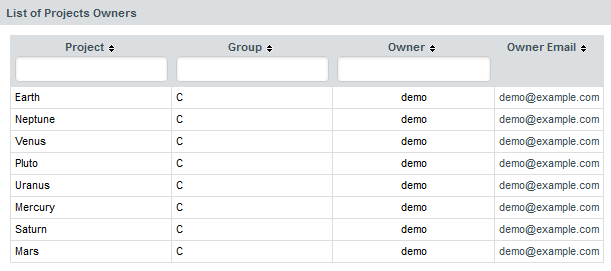

Administrators can also get debug information and manage any project created on the server by clicking Administration > Projects, which provides a detailed view of all projects created on Squore Server, on a summary page shown below.

3. Accessing Squore

This chapter walks you through your first access to Squore and covers the web interface and some ways to customise it to your liking.

Understanding Profiles and Roles

Before you start working with Squore, it is essential to understand how access management works. The various permissions and privileges that can be assigned to Squore users are grouped in profiles and roles respectively. A set of default roles and profiles is available when you first start the server. You can edit them, or create more as needed.

Use this simple trick to remember the difference between a profile and a role:

-

A Profile is a set of permissions granting access to certain Squore features to a user

-

A Role is a set of privileges for a user within a Squore project.

A Squore user with the Administrator profile can manage users, their roles and profiles. A Squore user with the Project Manager role for a project can create a new version of this project or give access to another user to this project’s analysis results.

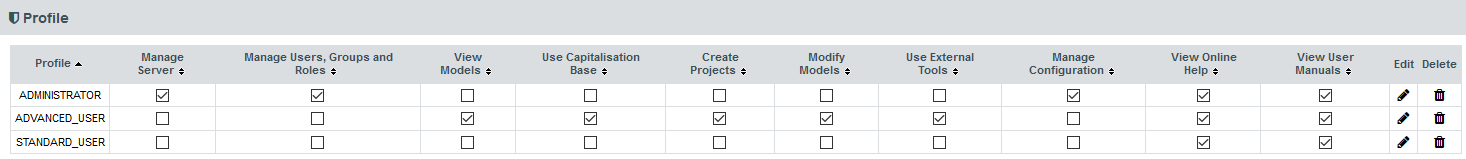

User Profiles

You can use profiles to grant or deny access to the following Squore features:

-

Manage Server: Configure the server, access server logs, manage all projects.

-

Manage Users, Groups and Roles: Complete access to user management on the server.

-

View Models: Allows users to use the Viewer and the Validator.

-

Use Capitalisation Base: Provides access to the Capitalisation Base feature to learn from past data in order to improve your model.

-

Create Projects: Allows users to run analyses.

-

Modify Models: Allows users to use the Dashboard Editor and the Analysis Model Editor, as well as view usage statistics for particular analysis models.

-

Use External Tools: View and use external tools configured by your Squore Administrator. To learn more about this feature, consult the Configuration Guide.

-

Manage Configuration: Allows users to reload the server configuration from disk.

-

View Online Help: Allows users to consult the online help from the web interface.

-

View User Manuals: Allows users to consult the product documentation from the web interface.

Three profiles are available by default, with permissions set as shown below:

Note that a profile can be assigned to a user or a group of users. It is, therefore, possible for a user to be a member of more than one profile. In this case, the user’s profile is the combination of all permissions from all the profiles they are a member of.

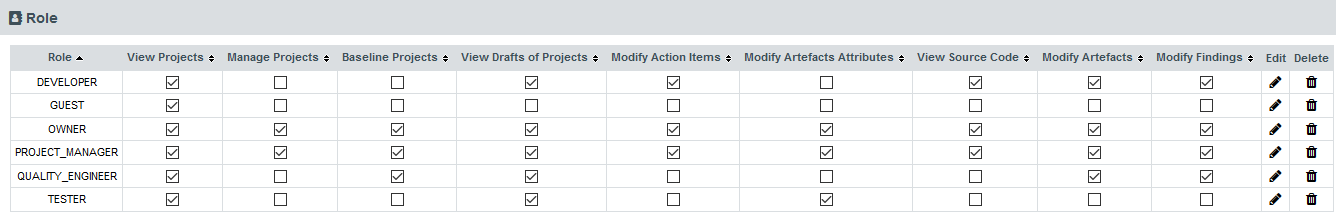

User Roles

A role is the set of privileges that a user enjoys in the context of a project. You can use roles to allow users to undertake these actions within the scope of a project:

-

View Projects: Allows a user to see a project in their project list and to browse this project’s analysis results.

-

Manage Projects: Allows a user to manage a project: rename it, create or delete versions, access project creation log files and add other users to the project team.

-

Baseline Projects: Allows a user to create a baseline version of a project that will not be overwritten by subsequent analysis. For more information about baselining, see Working with Draft and Baseline Versions.

-

View Drafts of Projects: Allows a user to view the current draft version of a project. Without this privilege, only baseline versions of a project are visible in the project portfolio. For more information about baselining, see Working with Draft and Baseline Versions.

-

Modify Action Items: Allows updating the status of Action Items from TODO to Relaxed for example. Without this privilege, the status is displayed as a read-only field.

-

Modify Artefacts Attributes: Allows a user to modify the value of attributes displayed in the Forms tab of the Explorer. Without this privilege, attributes are read-only.

-

View Source Code: Allows a user to click to view the source code of an artefact from any tab in the Explorer.

-

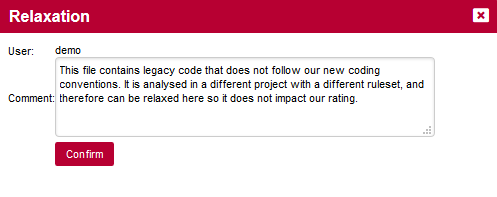

Modify Artefacts: Allows a user to add, delete, relax, exclude artefacts from the artefact tree. Users without this privilege can still view artefacts created by others.

-

Modify Findings: Allows user to change the status of violations on the Findings tab. Users without this privilege can view relaxed findings but cannot relax or unrelax them.

Six roles are available by default, with privileges assigned as shown below:

Note that a user can have multiple roles in a project. When you have multiple roles in a project, you combine privileges from all the roles that you are a member of.

|

The owner role is assigned automatically to the user who creates the first version of a project. A project has only one owner, and you can control how much a project owner can see and do by modifying the permissions of the OWNER role. An administrator can transfer ownership of a project to a new user if required. |

How Do I log into Squore?

Your Squore installation runs on http://localhost:8180/SQuORE_Server by default. By accessing this page in your browser, you will be redirected to the Squore login page , as shown below:

Type in a username and a password and click Login to log in.

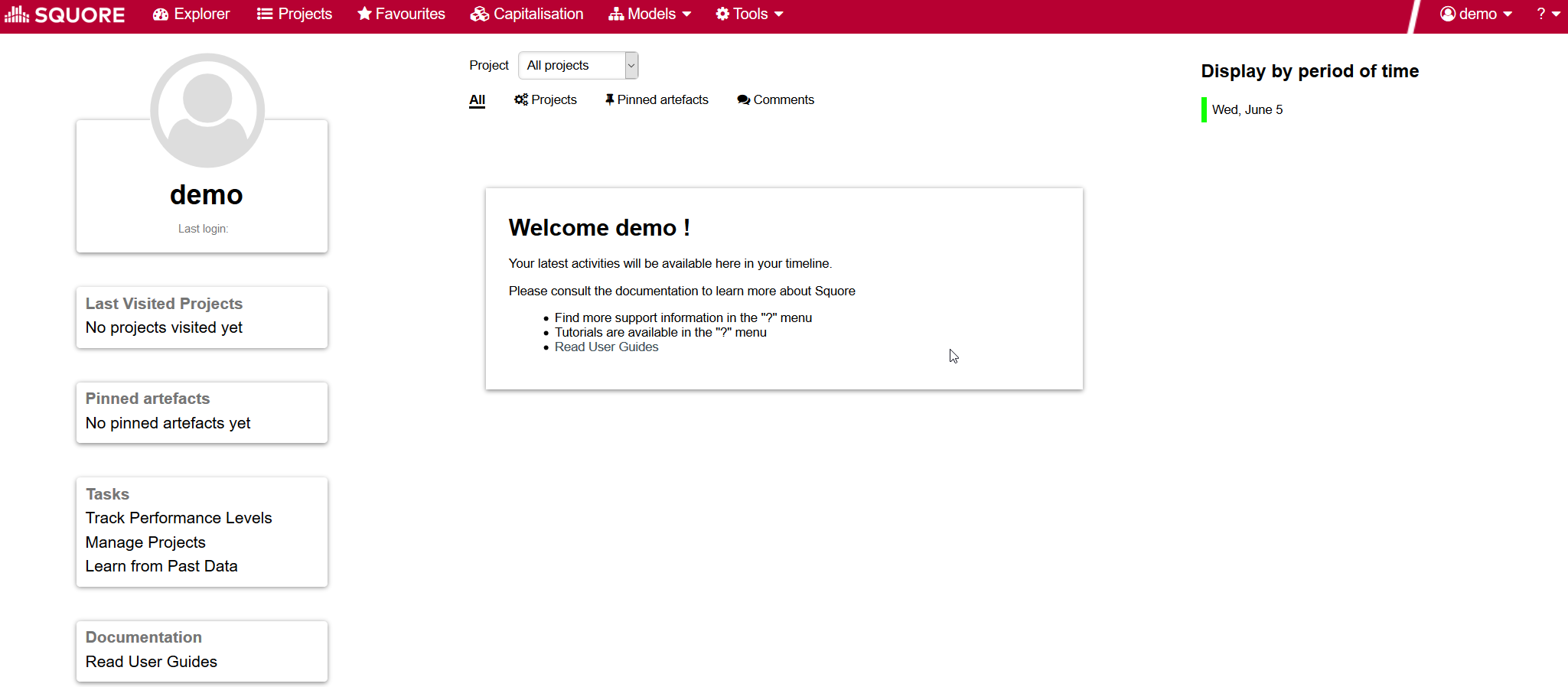

Where Do I Go From The Home Page?

To begin using Squore, log in as the demo user with demo as username and password on the login page. Click the Login button and wait for the Home page to open.

From the Home page, you can automatically return to the last projects or favourite artefacts that you had opened in the Explorer before logging out. A timeline of executed builds and news mechanism is available as well. You can also get links to the help and other features available for your account.

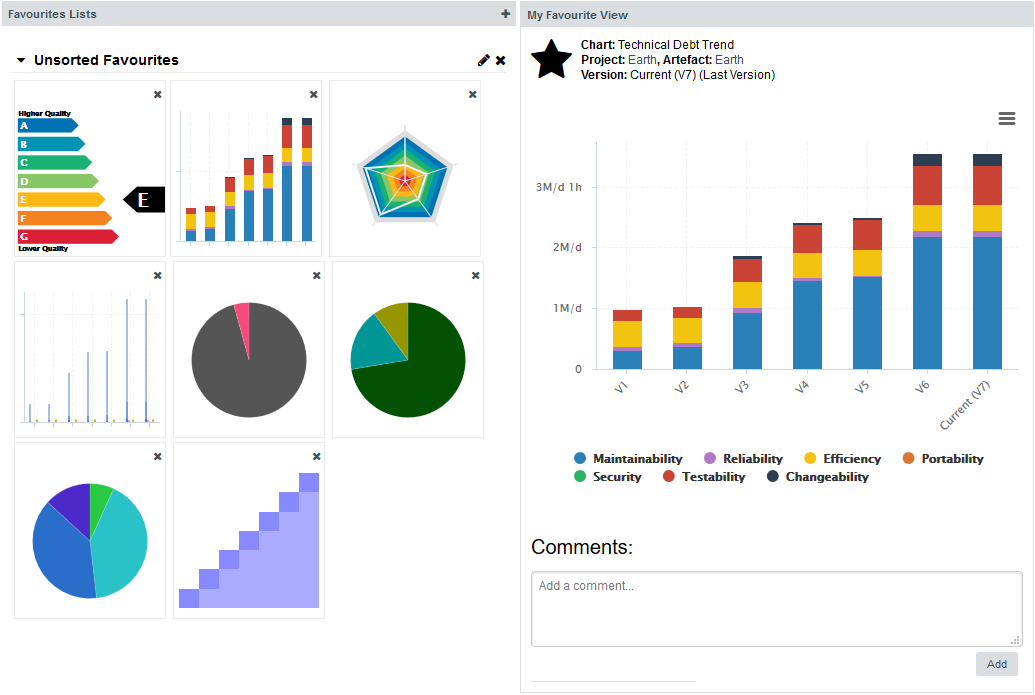

As the demo user, you are an advanced user of Squore and have access to the following functionality from the toolbar :

-

Explorer, where you can review your analysis results.

-

Projects, where you can launch new analyses and manage your projects.

-

Favourites, where you can view and manage your favourite charts across projects.

-

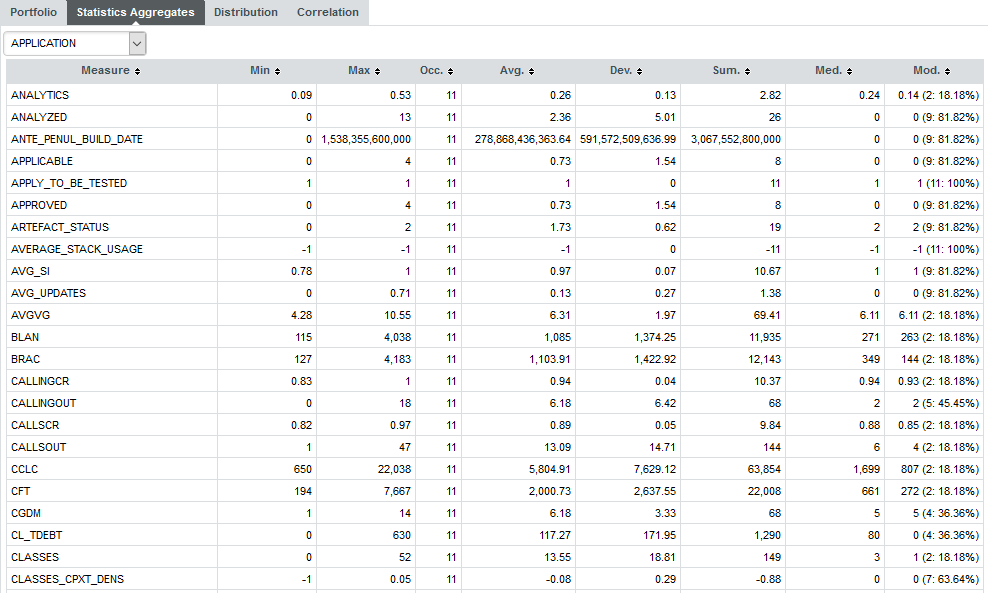

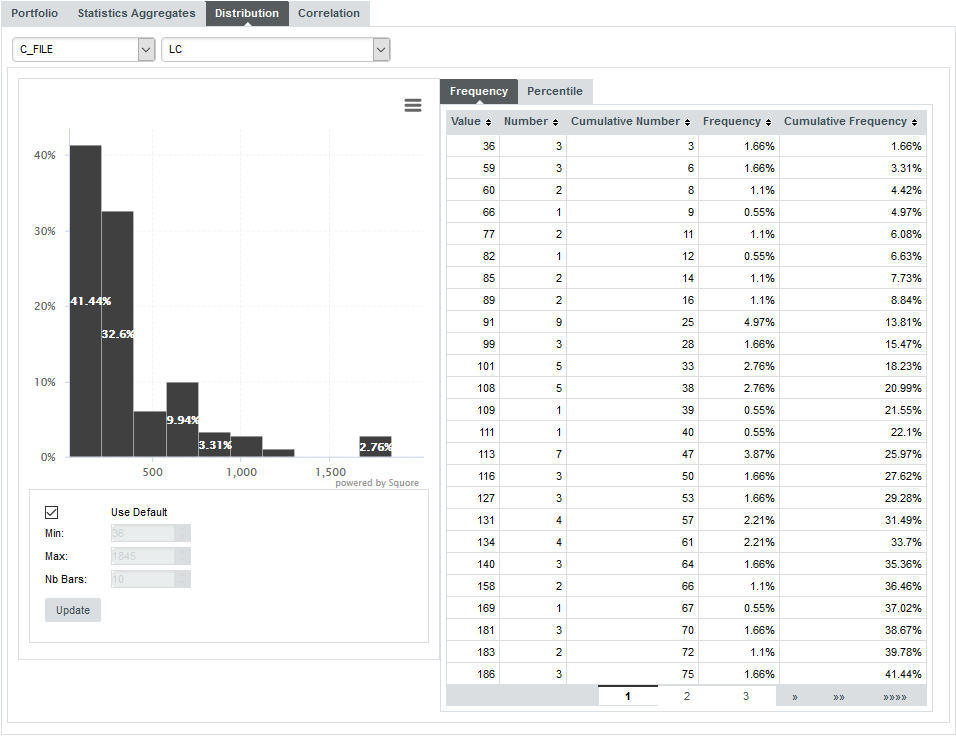

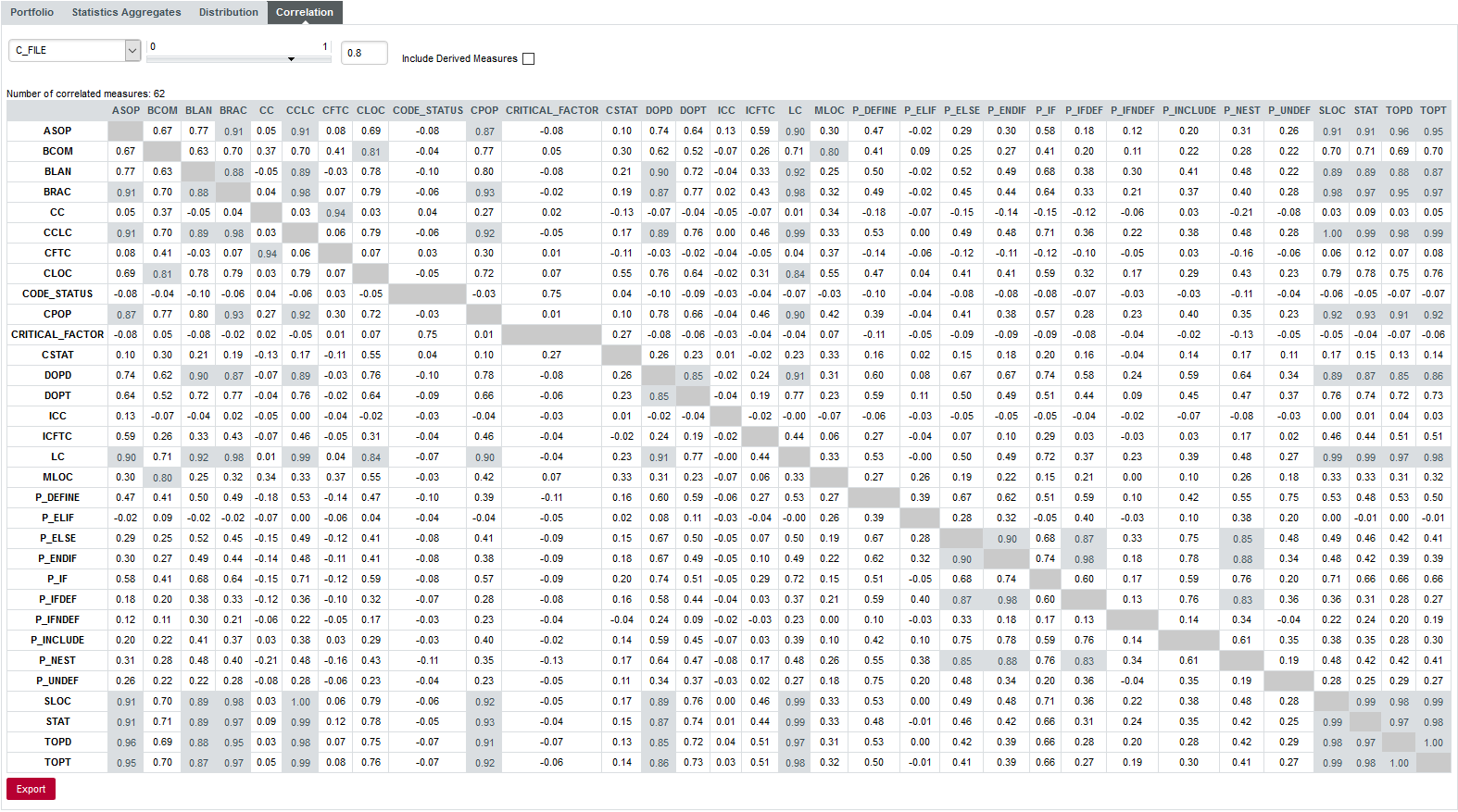

Capitalisation, where aggregated statistical data can be found.

-

Models, under which you can examine all characteristics of your model and edit your dashboards.

-

Tools, which contains shortcuts to scripts that recreate demo projects. Note that only the demo user and members of the demo group have access to this menu by default.

-

<username>, where you can set your preferences and log out from Squore.

-

?, where online help, user manuals and application information can be found.

|

If you are looking for the administration tools, log in as an administrator of Squore using admin as the username and password. You will gain access to the Administration menu where you can configure access management and administer the server. |

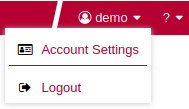

How Do I log out of Squore?

You can log out of Squore by clicking your user name in the menu bar and selecting the Logout option. Note that if you close your browser without logging out, your session will automatically time out after two hours.

Can I Tweak the Squore Look and Feel?

Using a Different Theme

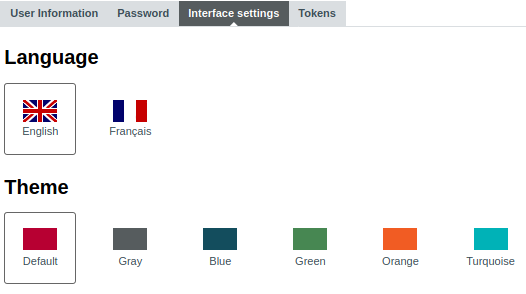

The Squore look and feel can be adapted to your liking, with six provided themes. They are accessible by clicking on your <username> in the menu bar, and then Account Settings > Interface settings. Select one of the available colour schemes to change the color of the interface. Your changes are saved using a browser cookie.

User Interface Language

You can use Squore in various languages. English and French are provided by default, and your Squore administrator can add more as needed. If you want to change the language of the Squore user interface, click on your <username> in the menu bar, then Account Settings > Interface settings, and select one of the flags available. The changes are applied immediately and your preferences are saved even after you log out.

4. Creating Projects and Versions

In this chapter, you will learn about the various ways to create a project in Squore: using the UI, using a command line tool or triggering analyses in a continuous integration environment.

How Do I Create a Project in Squore?

Creating a project in Squore is as easy as following a wizard that will prompt you for information about the source material to analyse, and the external Data Providers to add to the analysis results.

The example below assumes that the source code for the sample project used is available on a network share. The path to the source files to analyse is relative to the server.

In order to create a project for the sample application Neptune2, follow these steps:

-

Access http://localhost:8180/SQuORE_Server in your browser. The log-in page appears.

-

Log in as the demo user with the login/password combination demo/demo.

-

Click the Login button. You are presented with the Squore Home page.

-

Click Projects to switch to the projects view and click Create Project to create the Neptune2 project.

-

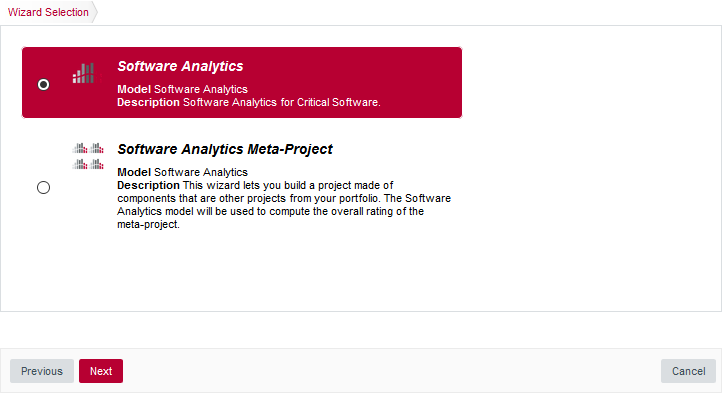

The Wizard Selection screen appears. Project wizards allow you to use different analysis models and tools to analyse your projects. For this demo, click the Software Analytics wizard to start creating the project.

The Wizard Selection screen

The Wizard Selection screenIf your Squore installation has been customised to provide only one project wizard, the Wizard Selection is skipped.

-

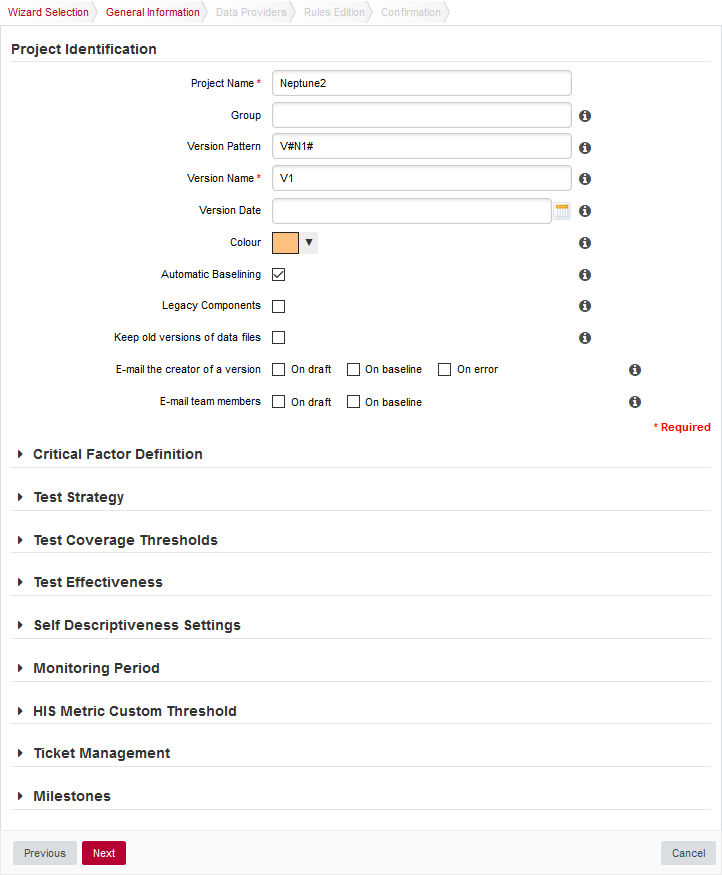

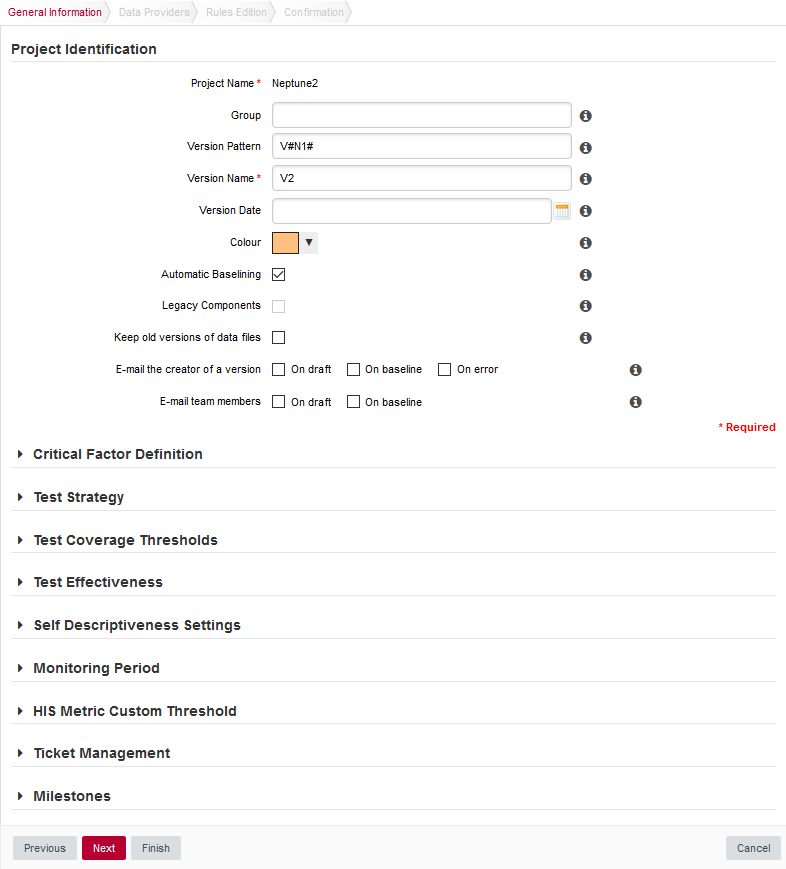

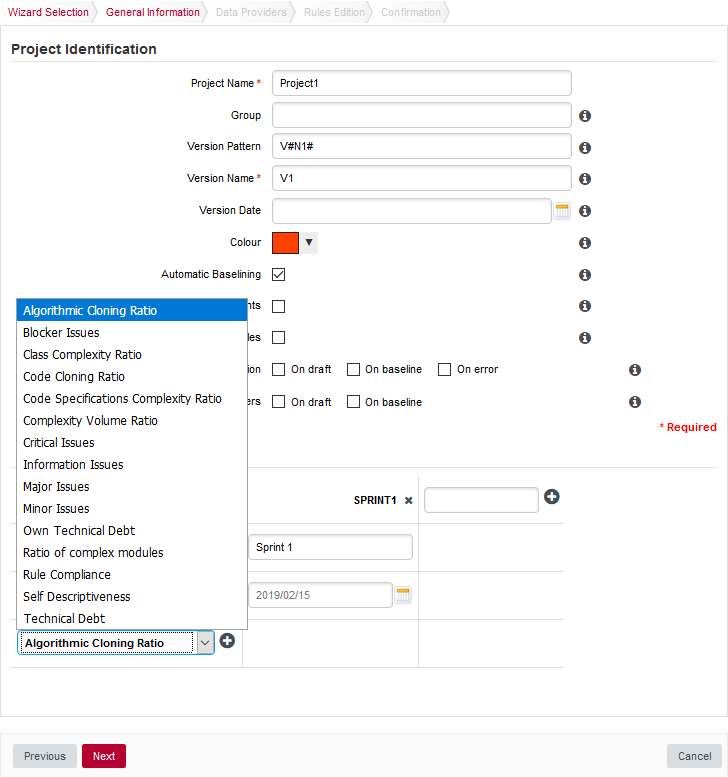

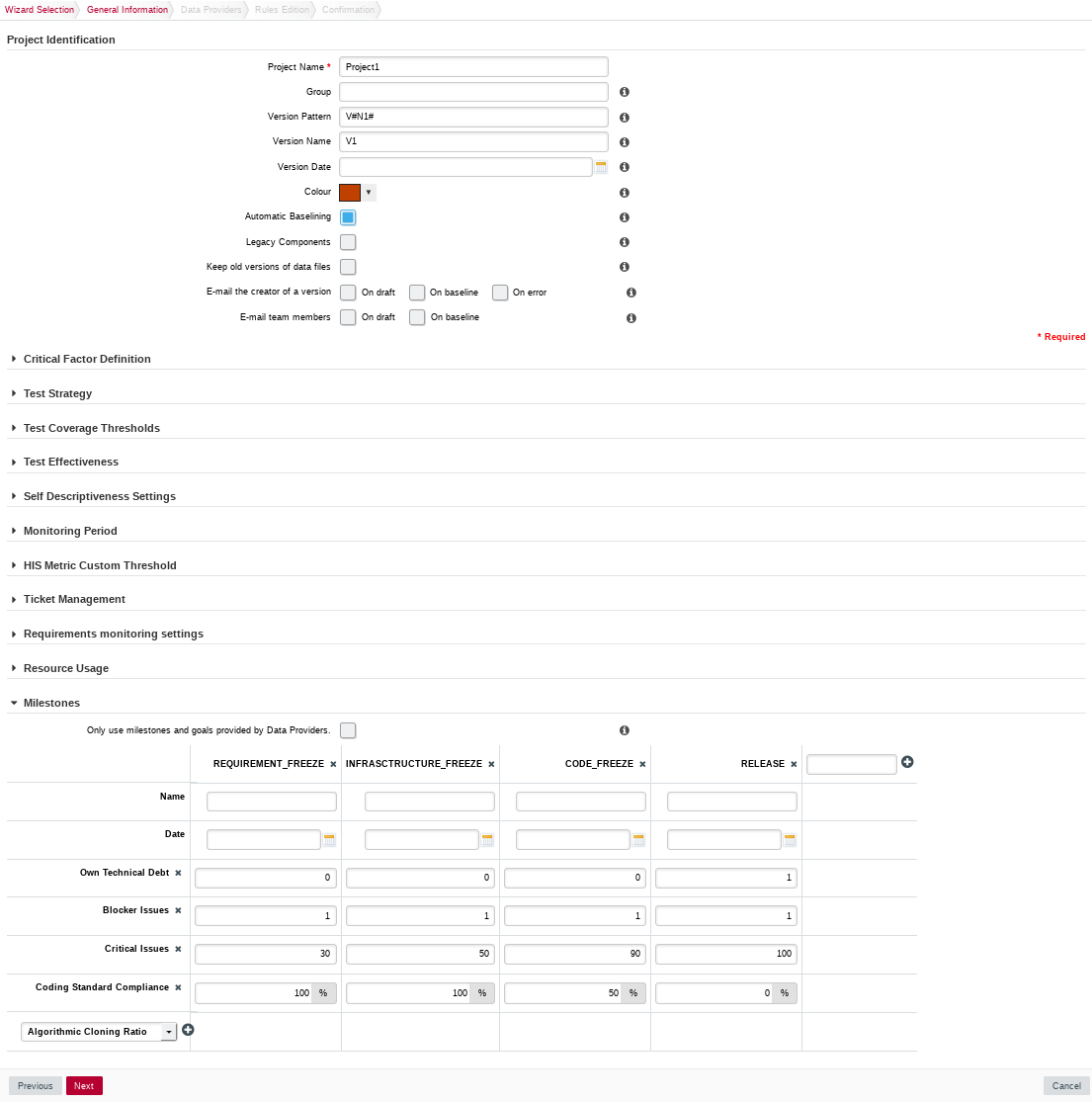

The General Information screen appears.

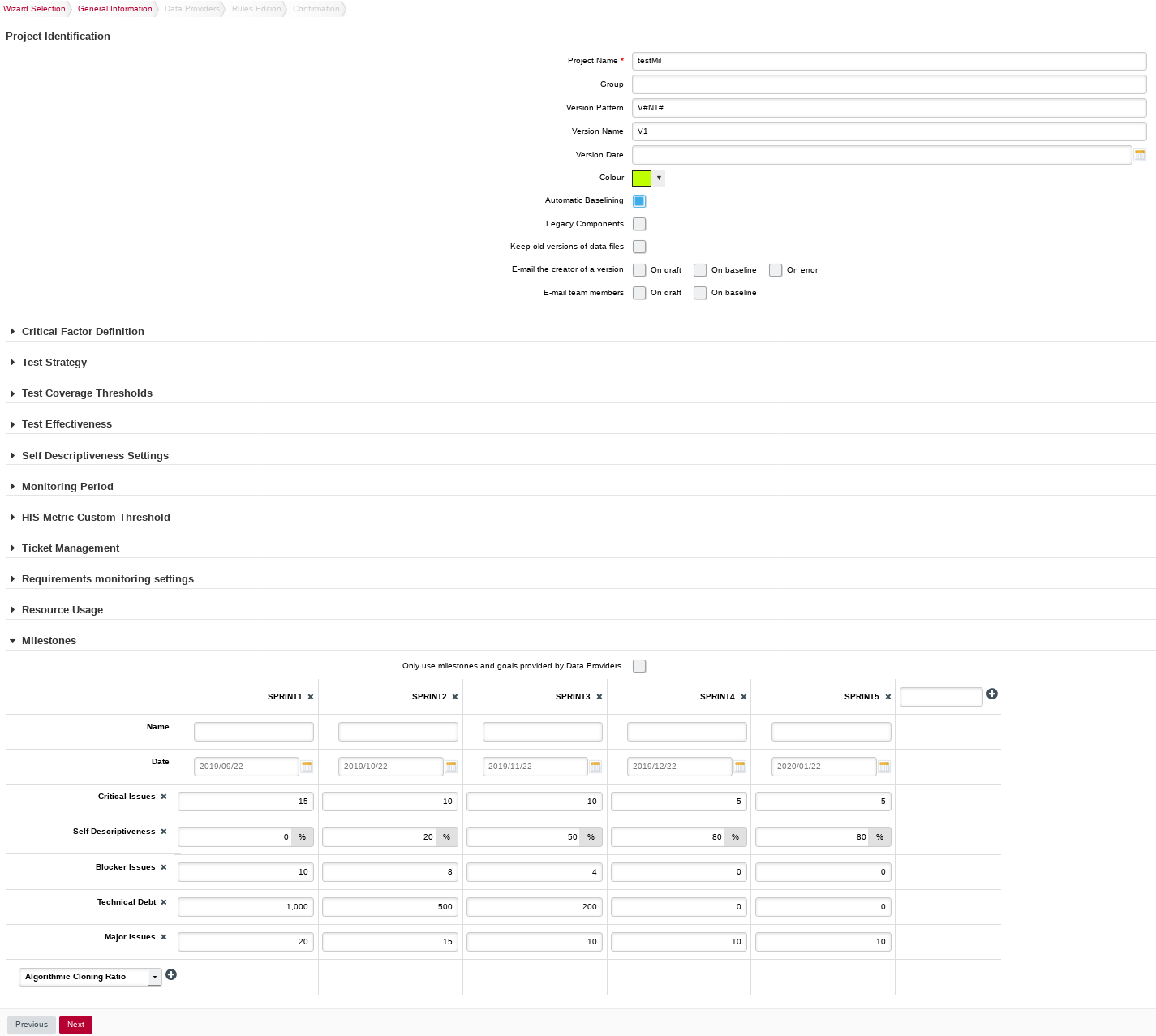

The General Information screen

The General Information screenOn this screen, you can enter the information relative to your project in the Project Identification section

The Software Analytics model offers extra parameters below the Project Identification section, but you can ignore them for now.

-

Click the Next button. The Data Providers screen is shown:

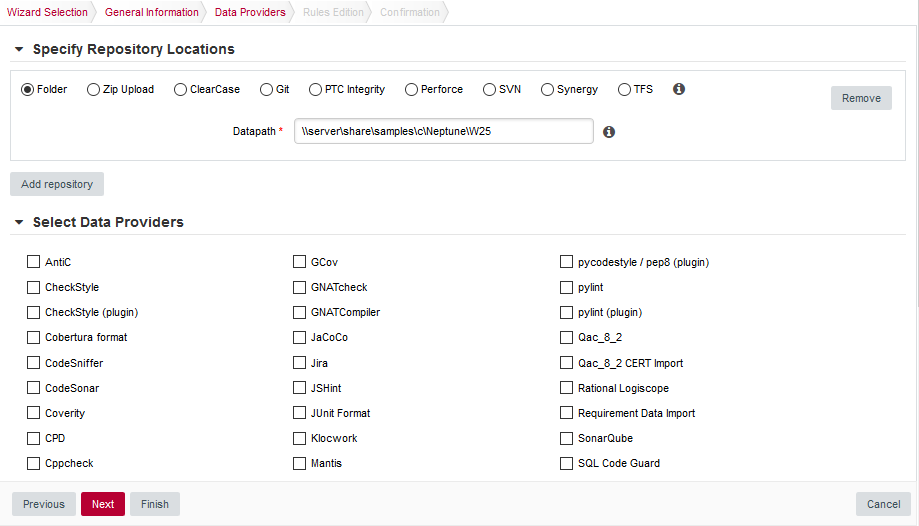

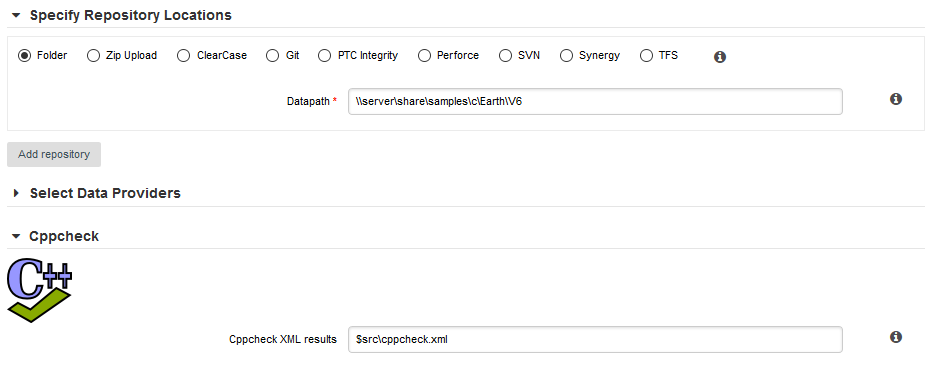

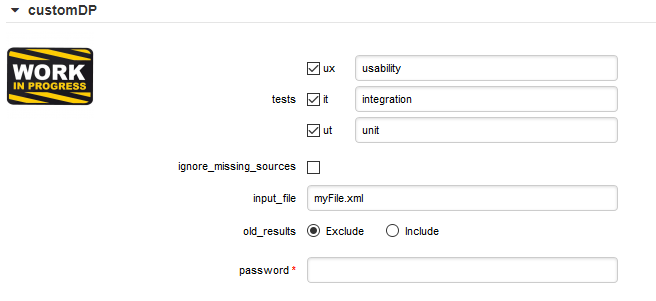

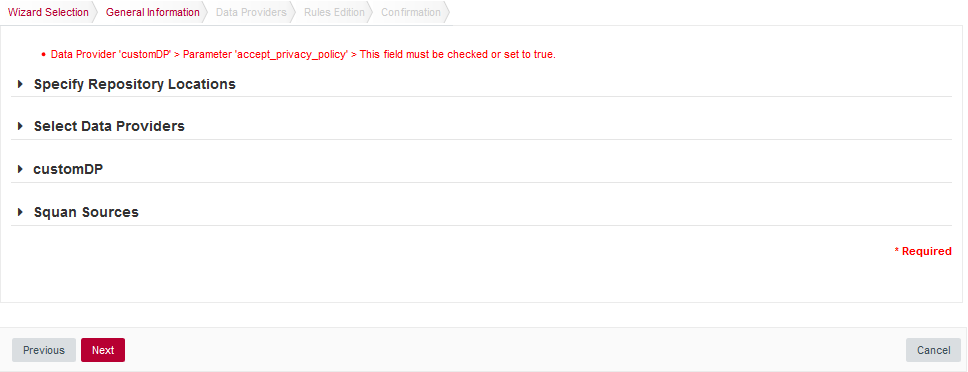

The Data Providers options screen

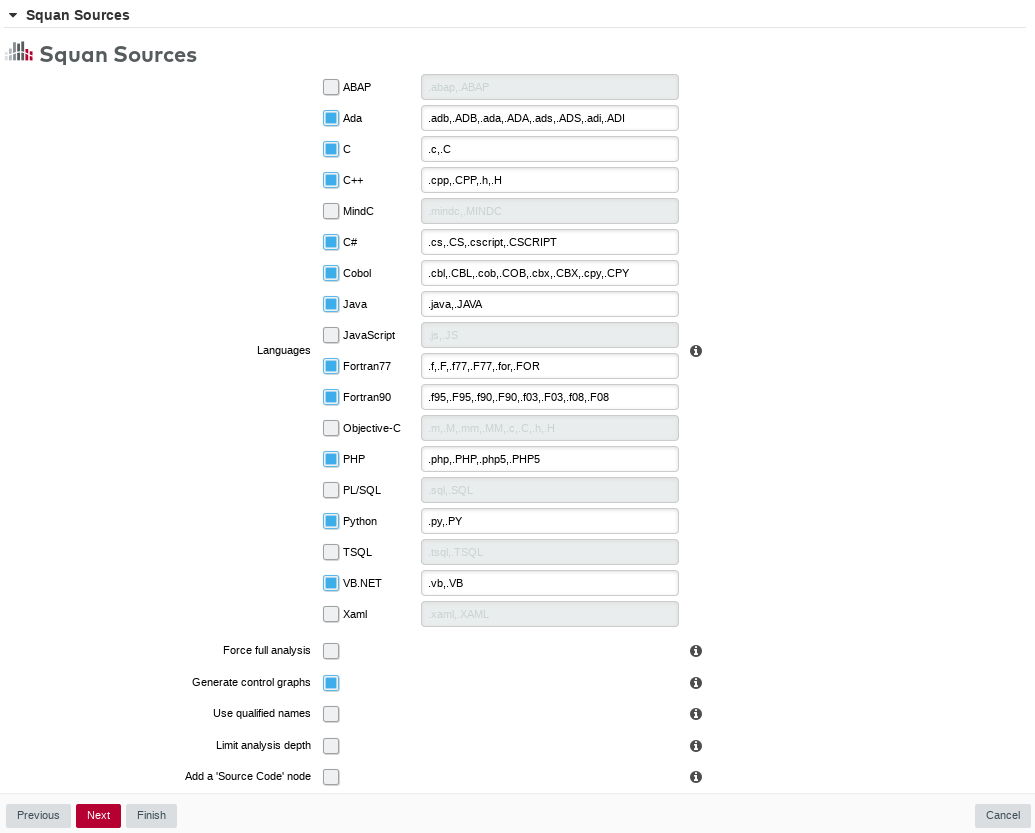

The Data Providers options screenThis screen allows configuring the repository locations and tools that will be used in your analysis. Set the source code files option to Folder. In the Datapath text box, type the path to the Neptune2 source code: \\server\share\samples\c\Neptune\W25.

The only Data Provider used in our analysis is Squan Sources, the source code analyser, so you can leave all the other tools unchecked.

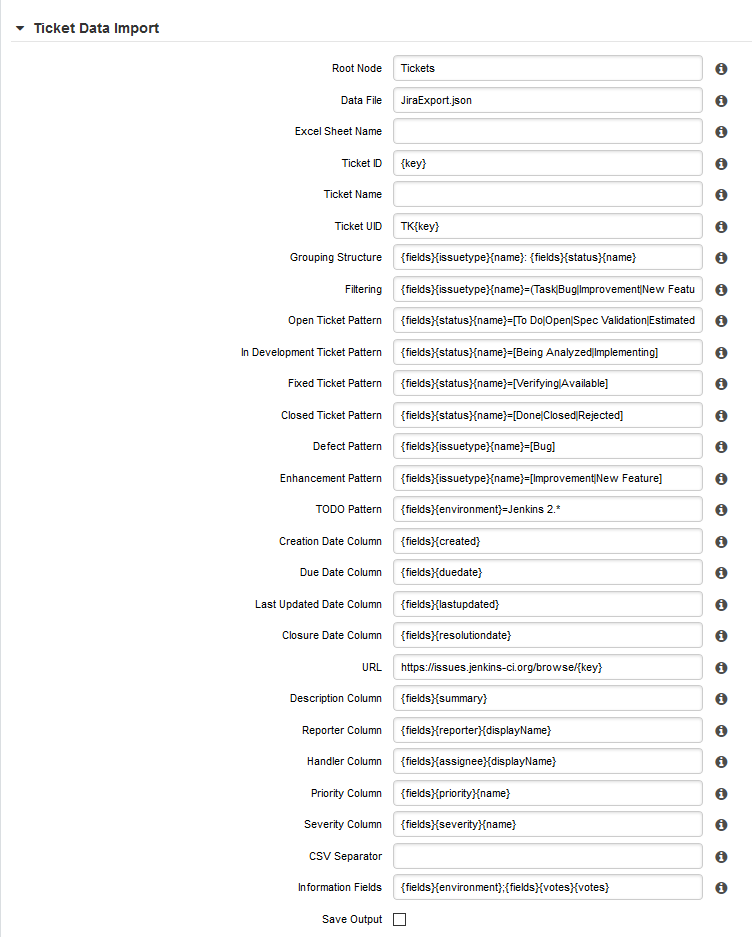

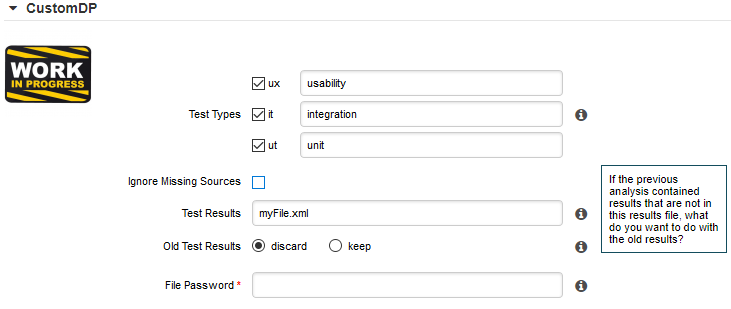

If you want to learn more about the available Repository Connectors and Data Providers, consult Repository Connectors and Data Providers .

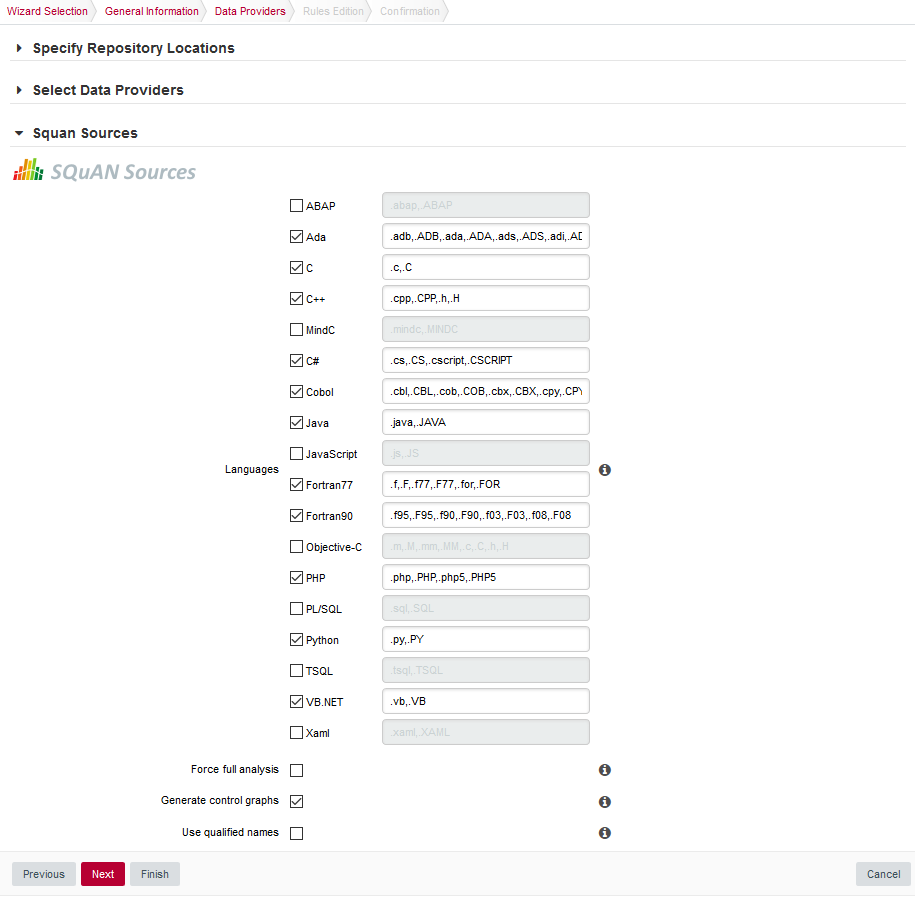

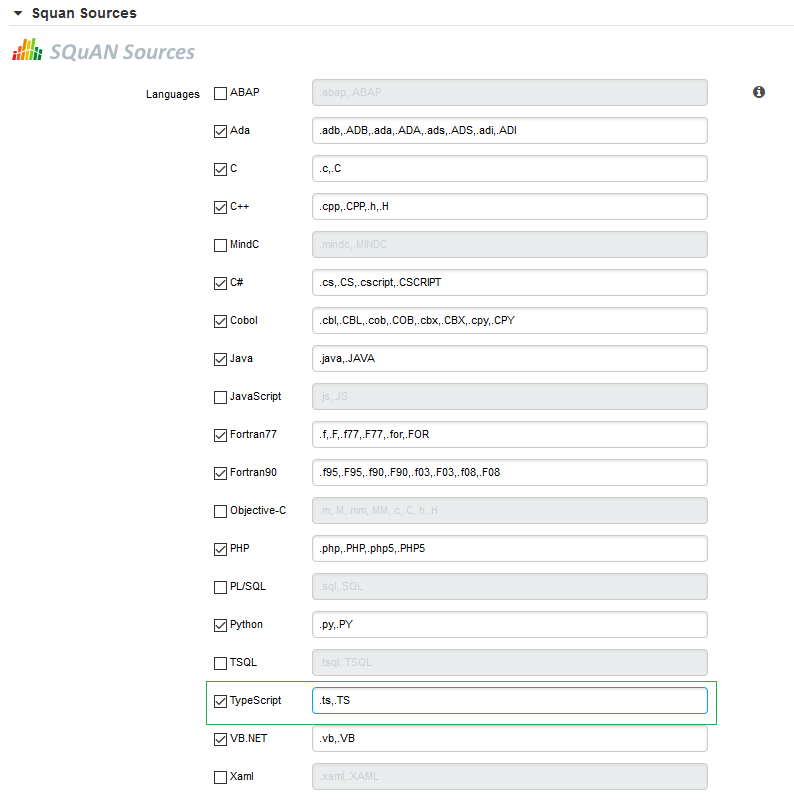

In the Squan Sources parameters, ensure that C is one of the programming languages selected, as shown below:

The Squan Sources Data Provider parameters

The Squan Sources Data Provider parameters -

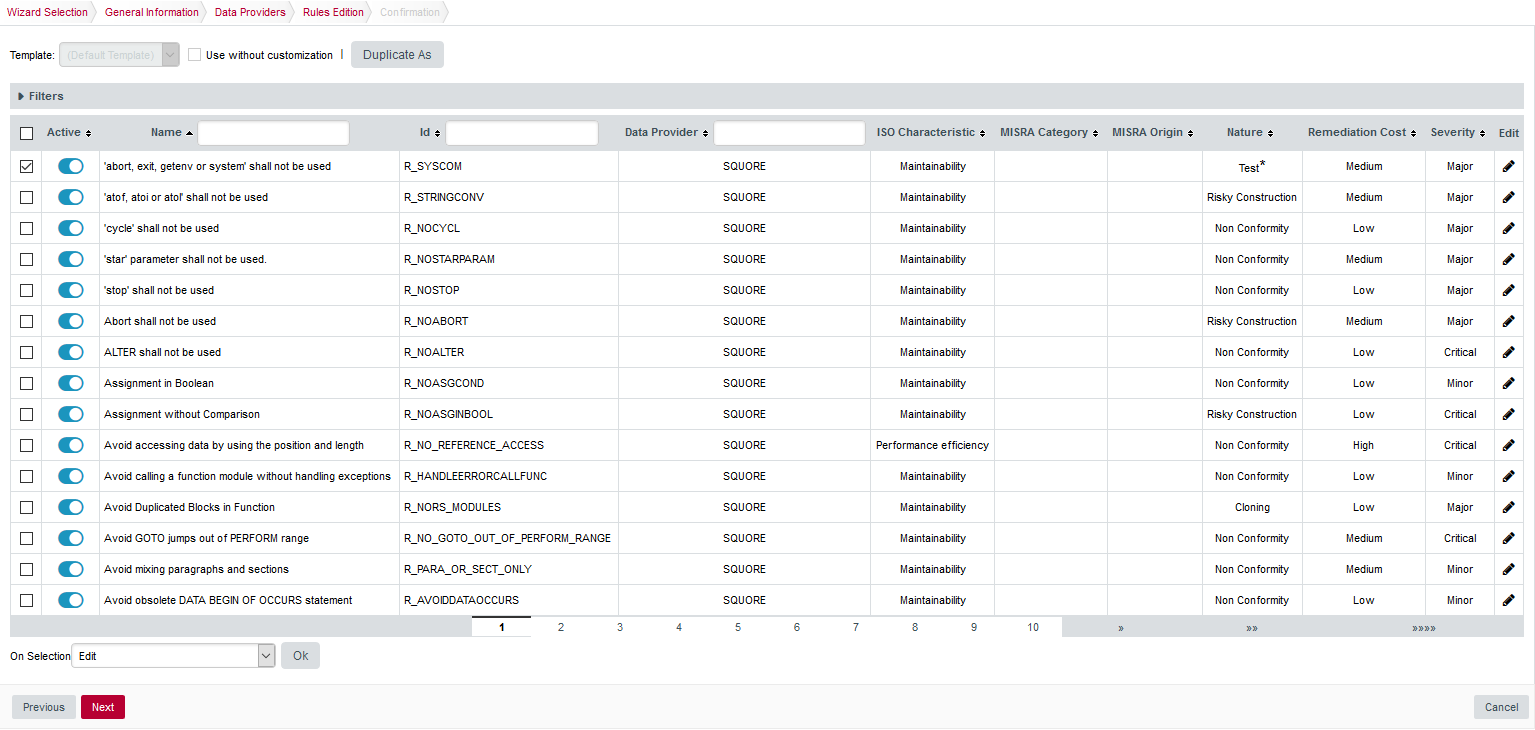

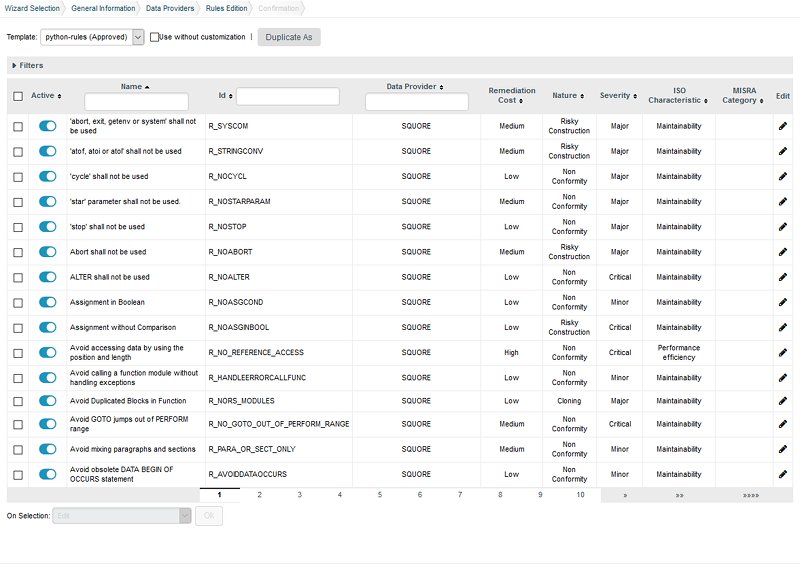

Click the Next button to read the Rules Edition screen. This screen allows you to tweak the ruleset available in the analysis model.

The Rules Edition screen

The Rules Edition screenThe table displays the entire model’s ruleset, which you can filter and sort by data provider or category. Each rule can be turned on or off, and you can click the Edit button to adjust the categories for each rule. Note that any modifications from the original configuration are displayed with an asterisk.

Click the Next button when you are satisfied with your modifications. Note that your modifications are applied for any subsequent analysis of this project and do not affect other projects using the same model.

This screen may not be enabled in your wizard, as your administrator may have disabled it in your configuration. Your administrator can also decide to make modifications to the ruleset that apply to any project created with this model using the Analysis Model Editor. Consult Analysis Model Editor and Using Ruleset Templates to learn more.

-

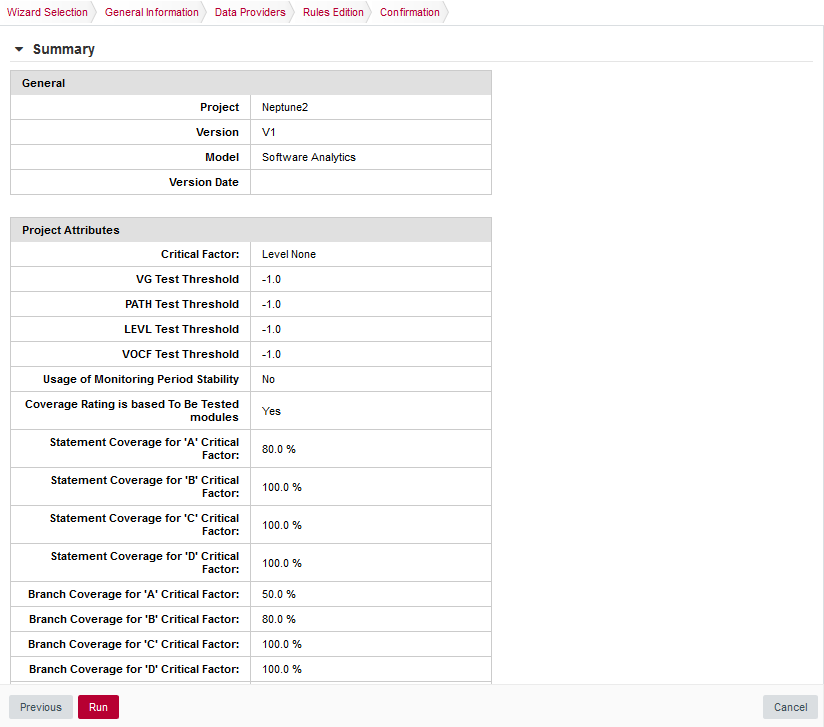

Before launching the analysis, a summary of your selections is displayed. Review the information and click Run to confirm the project creation.

The Rules Edition screen

The Rules Edition screenThe summary page lists all lists all the options you specified for the project creation and also allows outputting them in various formats so that you can repeat the project creation in command line. For more information about reusing the project parameters in a different context, consult the online help or Can I Create a Project Via the Command Line?.

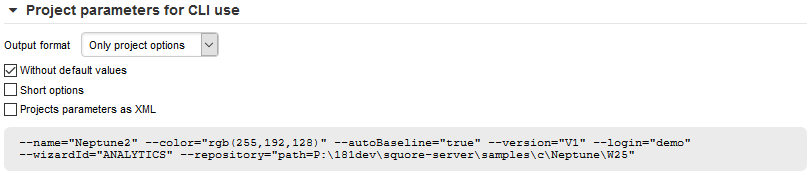

Project Parameters for CLI use

Project Parameters for CLI use

How Do I Know the Project Creation Was Successful ?

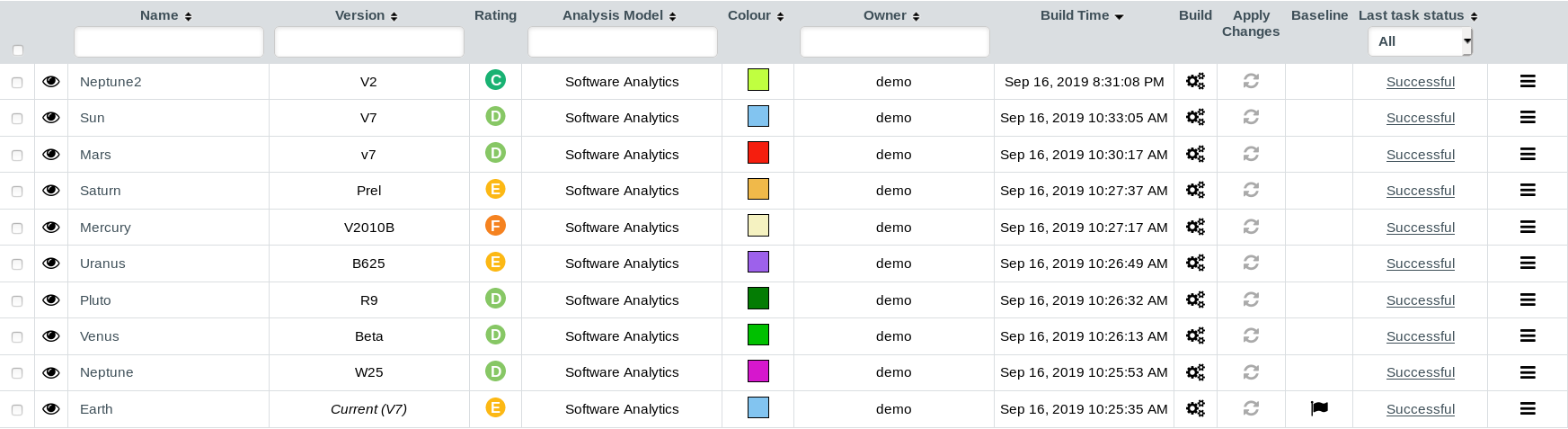

When the project analysis completes, Squore shows you the list of projects. Neptune2 appears in the list, together with information about the current version and its computed rating:

To consult the results of the analysis, click on the project name to view the Squore Dashboard. More information on how to read the Dashboard is available in Where Are My Analysis Results?.

In the last column you can see the status of the last executed task. If the status is at Successful then it’s creation was completed successfully.

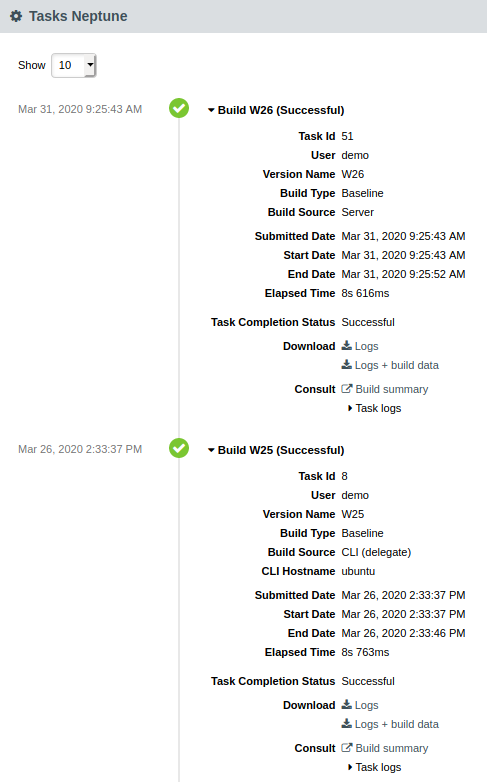

By clicking on the last task status you are redirected to the Project’s Tasks page :

In this page you have access to the list of all the tasks executed on this project, as well as their logs : version builds, reapply model, cloning, etc…

Creating Version 2 of My Project

Adding a version to an already-existing project is a simple procedure that is carried out from the Projects page.

Follow these steps to create version 2 of your project:

-

After logging into Squore, click on Projects.

-

Click the Build icon (

) for the Neptune2 project in order to access the source code file options.

) for the Neptune2 project in order to access the source code file options. -

The first screen of the wizard enables you to specify the version name and to modify some of the project attributes if necessary.

Parameters For the New Version of Neptune2

Parameters For the New Version of Neptune2 -

Click the Next button to reach the project language and source settings screen. On this screen, you can modify the path to the source code and point to the newer version. Note that by default, Squore displays the path used when analysing the last version. Leave the path as it was for version 1. We are going to create a version that analyses the same code in this example. If you scroll down to the code analysis option, you will notice that some of them are now disabled. This is because the project configuration was set in version 1 and is not allowed to be modified in subsequent analyses. This ensures that your project is scored using the same criteria every time you analyse new code.

Unavailable options when creating version 2 of a project

Unavailable options when creating version 2 of a projectYou can add new sources to the project at this stage if needed. Read more about projects using sources spread over multiple locations in Can I Create Projects with Sources From Multiple Locations?.

-

Click Finish and Run to launch the analysis of Neptune2 V2. When the analysis finishes, Neptune2 V2 will be listed in the list of projects on the Projects page.

Working with Draft and Baseline Versions

This section covers an essential workflow feature of Squore: baselining. While it is possible to keep every version of a project created in Squore, you may want to permanently keep analysis results only for particular milestones and work with an always updating draft version.

You can decide whether a version is a draft or a baseline when you create it, or after the analysis is finished.

Drafts and Baseline: The Basic Concepts

The most important thing to remember about a draft version is that it is a snapshot of your data at a given time. You can use it to compare the evolution of your project against the last baseline created. There is therefore only one draft version available per project (the latest version), which Squore creates automatically if your previous version was a baseline. A baseline version, on the other hand, is permanently saved and will not be overwritten the next time an analysis is launched.

When you create a draft version, it is always called Current and can be modified in several ways:

-

Forms can be updated

-

Attribute values can be modified so that a new value is taken into account in the next analysis

-

Artefacts can be manually added, modified or deleted

-

Folders and files can be relaxed or excluded from the project

-

Action Items can have their status changed

-

Rules and individual violations can be relaxed

Being able to view draft versions of a project is a user privilege that can be granted to users of a particular role, and so is the ability to baseline a project. For more information about roles, refer to Understanding Profiles and Roles. This means that as a project manager, you can give access to every version to users within your team, but can restrict the project visibility to the rest of the company to show them only baselined versions. You can also decide which members of your team are allowed to change the status of a version from draft to baseline.

Baselining at Version Creation

Use the Automatic Baselining option on the General Information screen of the project wizard to create a draft or baseline as follows:

-

When the Automatic Baselining box is unchecked, a draft version is created and all subsequent versions will be draft versions by default.

-

When the Automatic Baselining box is checked, a baseline version is created and all subsequent versions will be baseline versions by default.

Baselining After Review

You can use the Baseline option on the Projects page to create a baseline version of the current draft as follows:

-

Log into Squore and click on Projects.

-

Click the Baseline icon (

) next to the project you want to baseline.

) next to the project you want to baseline. -

Click the Baseline button to confirm.

After confirming the baseline creation, you are redirected to the Projects page and the last draft version becomes the new latest baseline. All changes made manually to artefacts and findings are kept, and will be incorporated the next time an analysis runs. Note that baselining is only available for users whose role allows the Baseline Projects privilege. For more information about roles, consult Understanding Profiles and Roles

|

Baselining manually is useful if you have reviewed the current draft and have not made any changes to the analysis results. If you have modified form entries or relaxed artefacts and findings in a way that should impact the rating, consider launching a new build or using the Apply Changes button instead of baselining. See Handling Manual Modifications for more information. |

Handling Manual Modifications

When you have made changes to form values or you have relaxed artefacts and findings in the current draft, there are two ways to get these changes reflected in the dashboard:

-

running a new analysis

-

clicking Apply Changes

Running a new analysis will allow you to change the source code repository settings and input files for data providers, or keep them. You can choose if this new analysis should produce a baseline or a draft version. In all cases, the artefacts you relaxed or excluded, the action items you modified and the findings you relaxed are taken into account to produce the rating of the new version.

Clicking Apply Changes ( ) allows you to merge the manual modifications to artefacts, forms, action items and findings into a new draft version without reanalysing source code and re-running data providers.

Manual modifications are simply merged with the already- existing results to update the rating, which is a lot faster than running a full analysis.

Note that Apply Changes is not available when you have excluded artefacts.

) allows you to merge the manual modifications to artefacts, forms, action items and findings into a new draft version without reanalysing source code and re-running data providers.

Manual modifications are simply merged with the already- existing results to update the rating, which is a lot faster than running a full analysis.

Note that Apply Changes is not available when you have excluded artefacts.

|

You can also use Apply Changes after modifying your analysis model to migrate a project to the new version of a model without running a full analysis. |

Can I Make Changes to My Project?

-

Changes to attribute values

-

Changes to source code locations

-

Changes to some of the Data Provider options

Project attributes are always editable when creating a new version of a project, except for the name of the project.

The location of the source code can always be modified. When editing a project, you can also add more source locations as needed, following the steps described in Can I Create Projects with Sources From Multiple Locations?.

Whether you can edit the settings used in the Data Providers for the project depends on their ability to support edits. This ability is defined by a Squore administrator via the configuration of the Squore wizards. For more information, refer to the Squore Configuration Guide.

Can I Create a Project Via the Command Line?

Instead of creating a project from the Squore web interface, you can create a project directly from the command line using Squore CLI. Squore CLI is a client for Squore that enables you to create and analyse projects locally and send the results to Squore Server. Alternatively, you can use Squore CLI to instruct Squore Server to carry out the analysis.

If you have installed Squore CLI on your computer, you can call it using Java, passing the parameters you would have passed in the web interface to create projects. The following is an example of the command line you can use to create a project using Squore CLI on Windows:

@echo off

java -Dsquore.home.dir="%SQUORE_HOME%" ^

-jar %SQUORE_HOME%\lib\squore-engine.jar ^

--url=http://localhost:8180/SQuORE_Server ^

--commands=DELEGATE_CREATION ^

--name=Mars2 ^

--repository "type=FROMPATH,path=\server\share\samples\c\Mars2\V3.2.6" ^

--color=rgb(103,25,237) ^

--version=1.0 ^

--login=demo ^

--password=demo ^

--filter=APPLICATION,MEASURE,LEVEL ^

--wizardId="ANALYTICS" ^

--dp "type=SQuORE"

echo done

pauseThe example above shows how to specify commands, parameters and project options to Squore CLI. This would create a project named Mars2 in version 1.0, analysing source code located in \server\share\samples\c\Mars2\V3.2.6 with the Data Provider SQuORE (the internal name for Squan Sources).

You can find more information about using Squore CLI in the Command Line Interface manual, which explains how to install the client and create projects.

How Do I Connect Squore to My Continuous Integration System?

If you use a Continuous Integration tool like Jenkins or CruiseControl, you can add Squore to your build process and analyse projects every time your code is compiled. This requires the installation of Squore CLI on the continuous integration server, and is therefore described in greater details in the Command Line Interface Manual.

Can Squore Pull Source From My Version Control System?

The source code analysed by Squore does not have to be located on the same machine as Squore Server or Squore CLI. When you create a project, you get the option to choose from a range of Repository Connectors to pull source code from:

-

Direct file system access (local drive, network share, mass storage media…)

-

Zip upload

-

A ClearCase view

-

A CVS checkout

-

A GNAThub database

-

Git cloning

-

An Integrity repository

-

A Perforce depot

-

A Subversion revision

-

A Synergy database

-

A TFS server

Each option requires different parameters, which can be specified from the project wizard, or via the command line. For more information, refer to Repository Connectors.

Can I Create Projects with Sources From Multiple Locations?

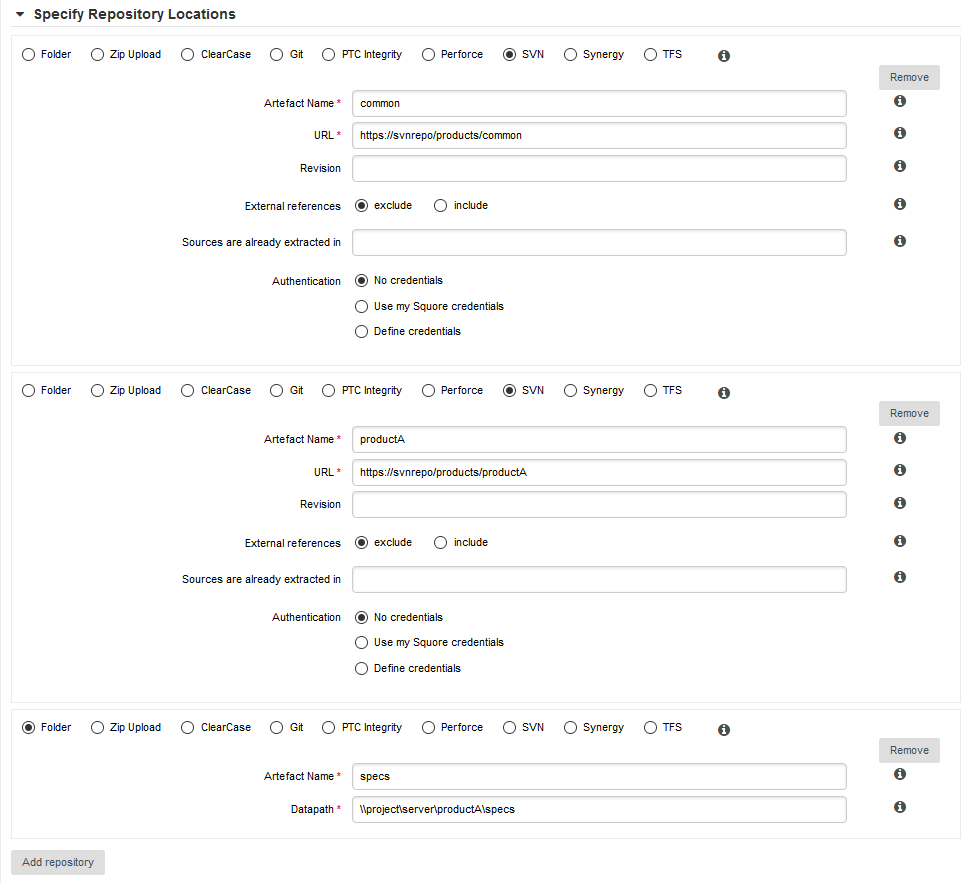

Squore provides support for analysing projects whose sources are spread over several locations or version control systems. If your source code resides in /products/common and /projects/myproject, you can specify these two locations in the Squore project wizard by clicking the Add Repository button. Similarly, if some of your code is managed by a SVN repository and the rest is handled by a Git server, you can configure both locations as part of the same project, as shown below:

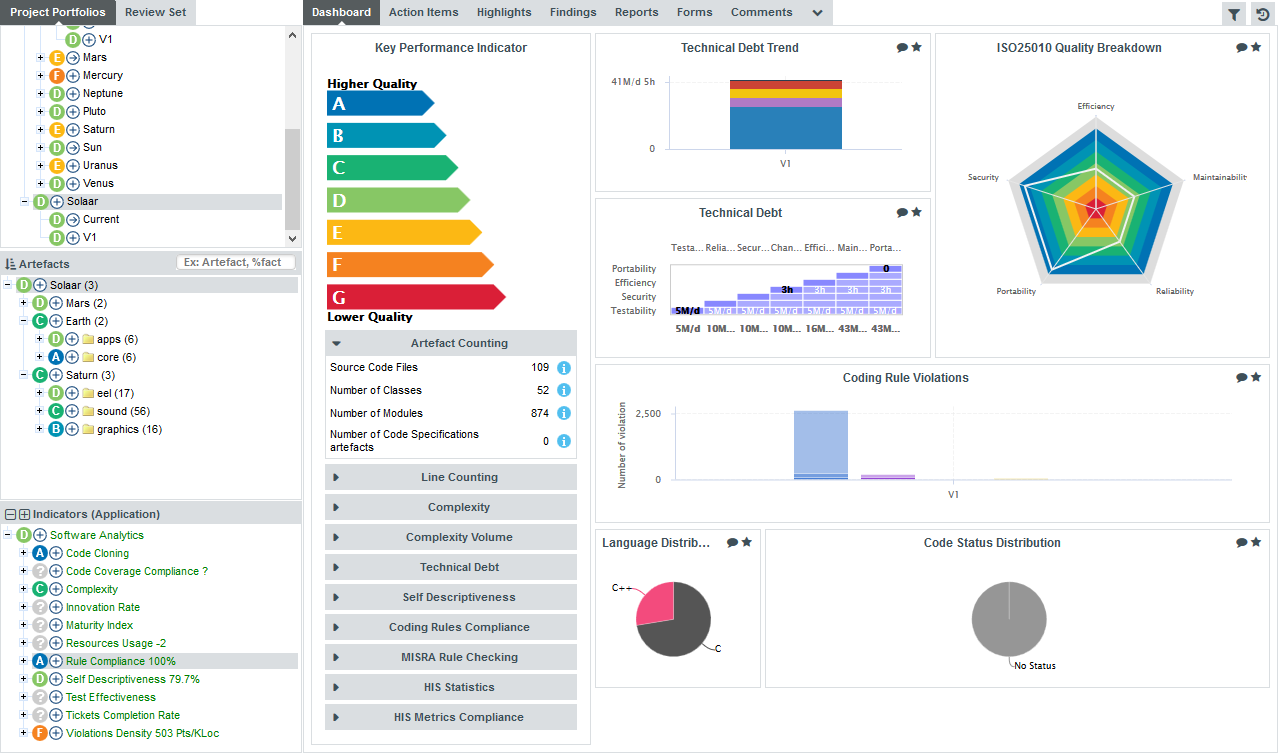

Where Are My Analysis Results?

Now that you have created a project, you are ready to start reviewing the analysis results in the main section of Squore, the Explorer, which consists of a set of trees for browsing through project artefacts and various dashboards to display the information associated with these artefacts.

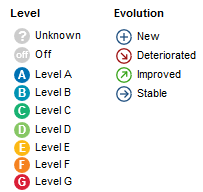

Common icons are used throughout the explorer to indicate the rating of a component and its evolution compared to the previous version. The image below shows the meaning of the different icons used:

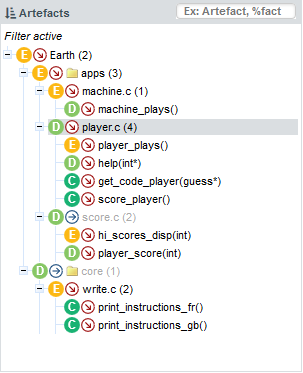

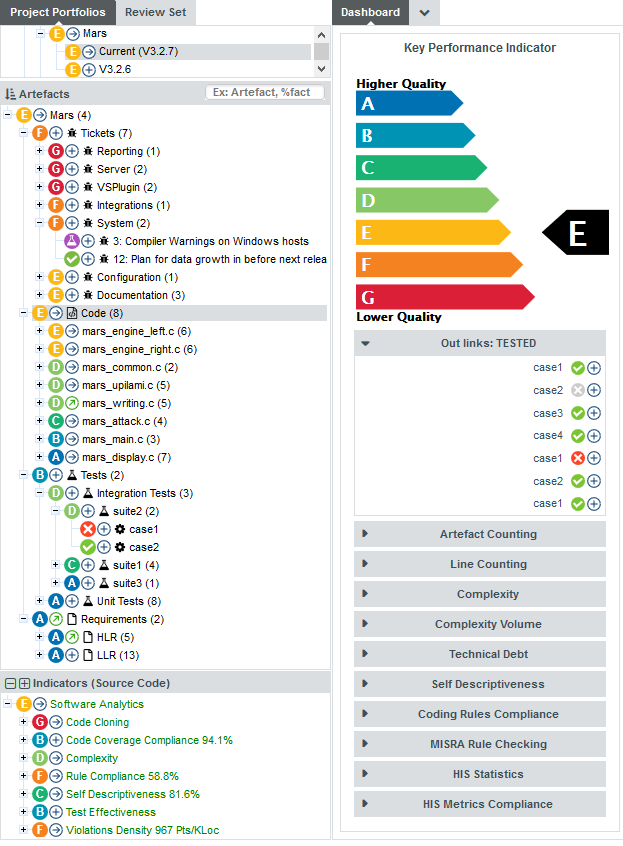

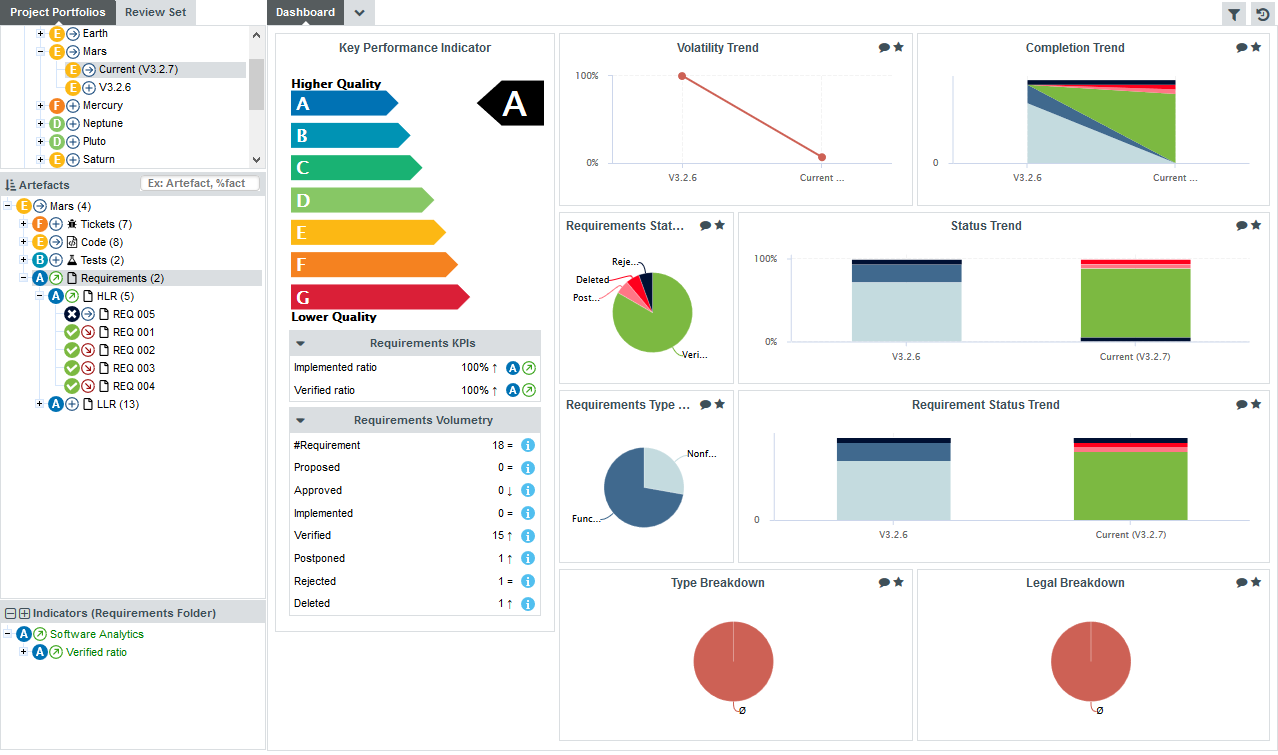

The Tree Pane

The left-hand part of the Explorer is a three-panel section containing expandable trees.

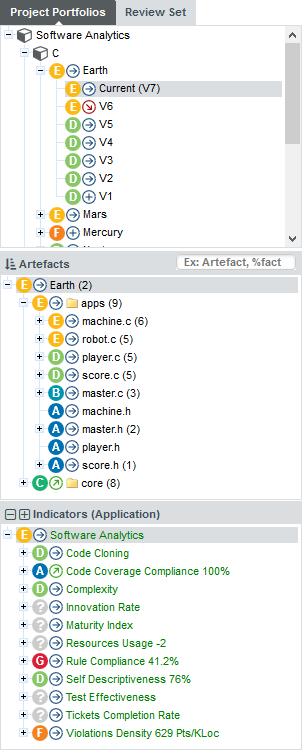

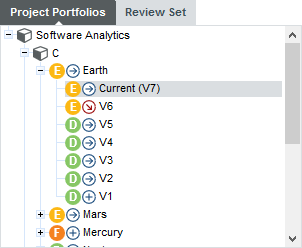

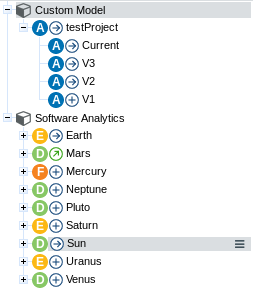

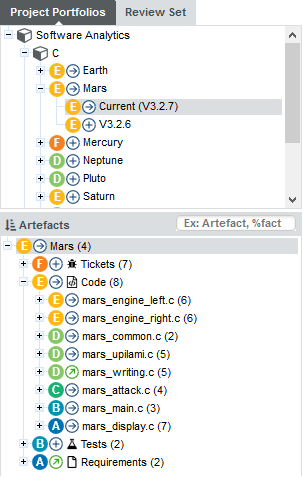

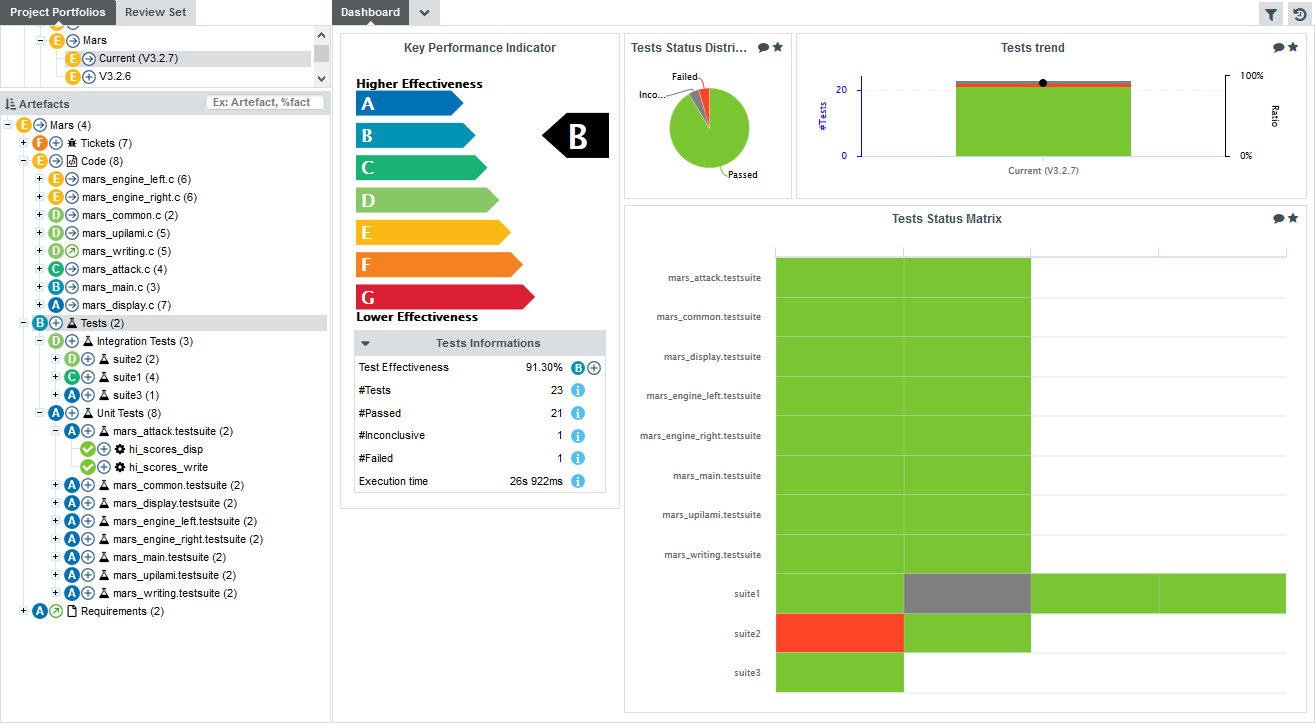

The Project Portfolios is a list of all the projects you have access to, grouped by analysis model. Each project is listed with its latest rating and evolution and can be expanded to show all versions of the project that were analysed with Squore.

The Review Set is a flat list of artefacts you collect from various projects in order to review them. This list is saved when you log out and log into Squore again.

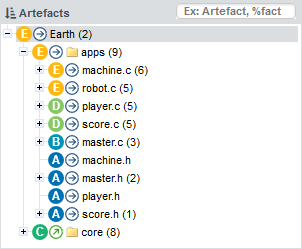

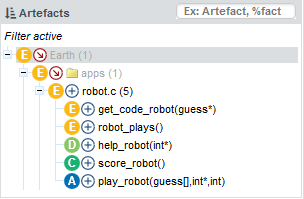

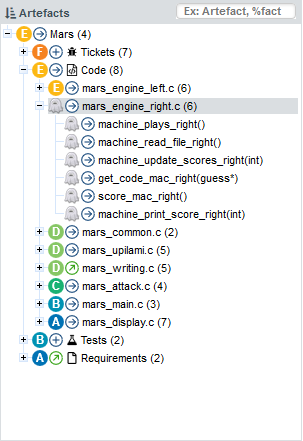

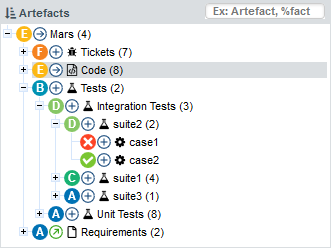

The tree in the middle panel is the Artefact Tree. When Squore analyses a project, it breaks it down into artefacts of various configurable types, down to the function-level for source code, according to the analysis model used. The artefacts in the tree are displayed for the version selected in the Project Portfolios. clicking a different version of a project refreshes the artefact tree with the ratings for the version just selected. Above the artefact tree are tools for sorting and searching artefacts. Each artefact is displayed with its current rating and can be expanded to reveal child artefacts if available. The number in brackets indicates the amount of child artefacts for the current artefact. You will learn about these tools later in Has the Quality of My Project Decreased Since the Previous Analysis? and How Do I Find and Keep Track of Artefacts?.

|

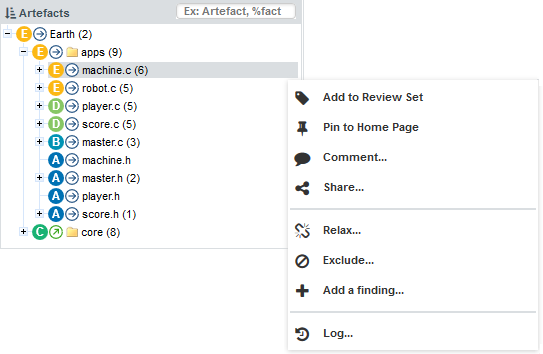

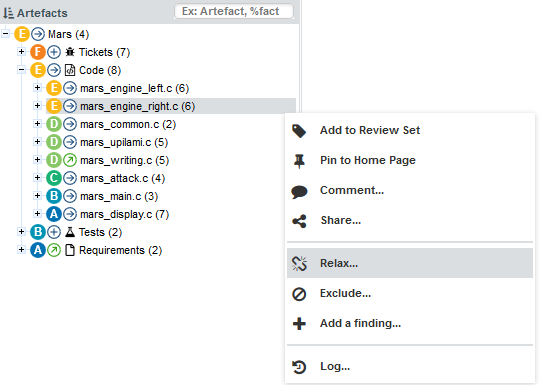

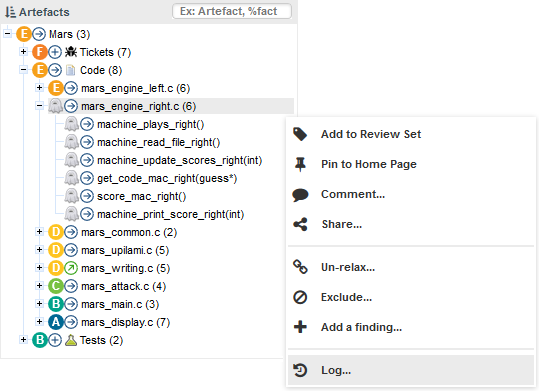

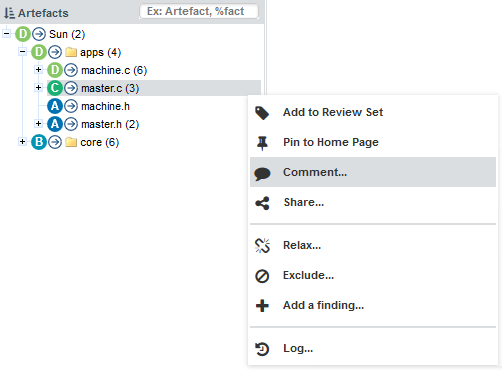

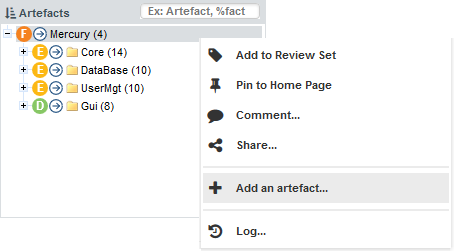

You can also interact with artefacts in the tree by using the Artefact Context Menu which can be accessed by hovering over an artefact name and clicking the menu icon.

The Artefact Context Menu

|

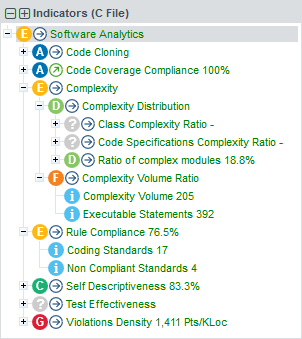

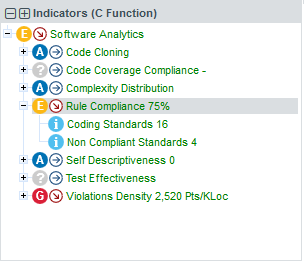

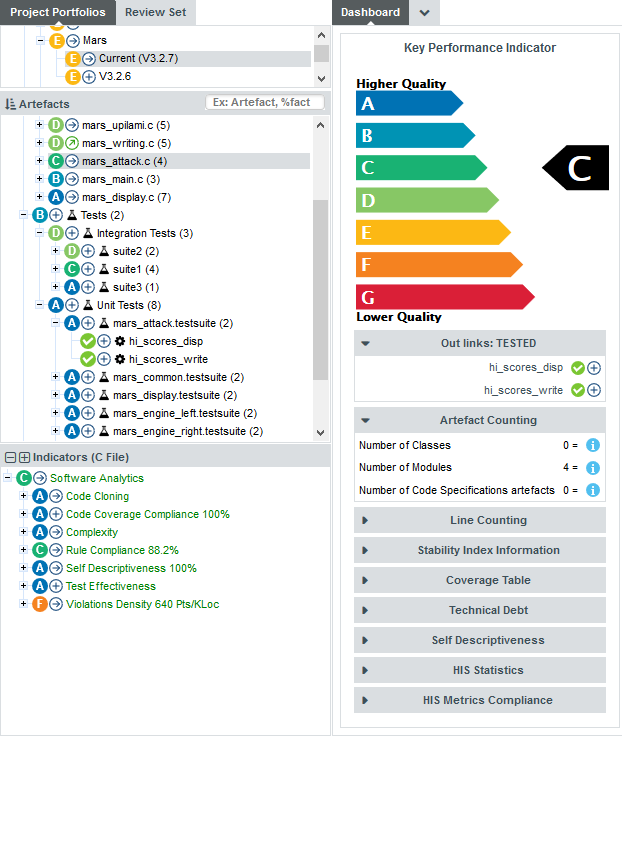

The bottom panel is the Indicator Tree , in which ratings for the indicators defined in the analysis model at the current level are displayed. Each indicator can be expanded to display the rating of each of its sub-indicators. The Indicator Tree displays statistics for the artefact currently selected in the Artefact Tree and refreshes when the selection is changed. The type of artefact selected in indicated in brackets. Two shortcut buttons can be found above the top node to quickly expand and collapse the entire tree.

Clicking one of the tree nodes reveals more information about the indicator, including the formula used by Squore to compute its value and rating.

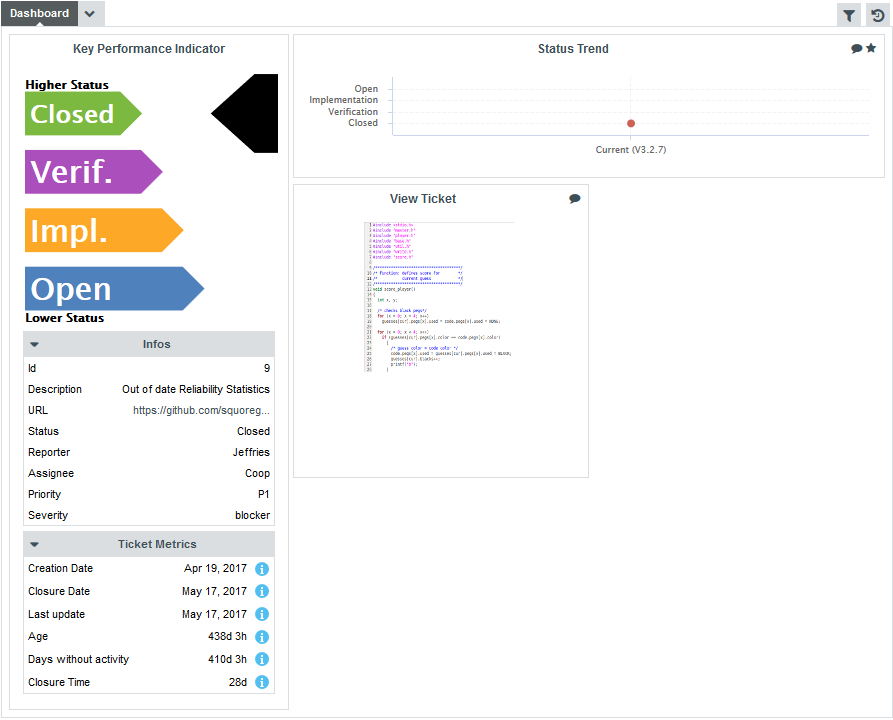

The Dashboards

The right-hand side of the Squore Explorer contains a series of tabs, the first of which is the Dashboard. The Dashboard is dynamic and always displays information about the artefact currently selected in the artefact tree. There is not one Dashboard, but a Dashboard per node in the tree. Additionally, Dashboards can be customised by a Squore administrator so that users see different Dashboards according to their groups and to their role in a project, thus highlighting different information for project managers, quality engineers and developers for example. Ask your Squore administrator about Dashboard customisation, or refer to the Squore Configuration Guide for more information.

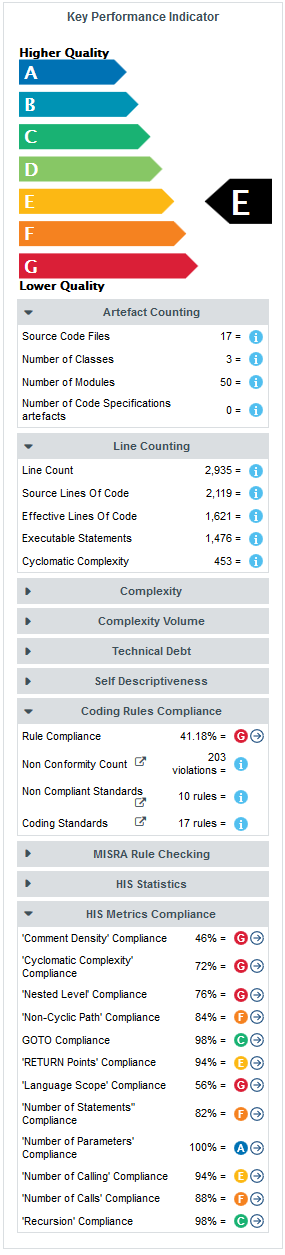

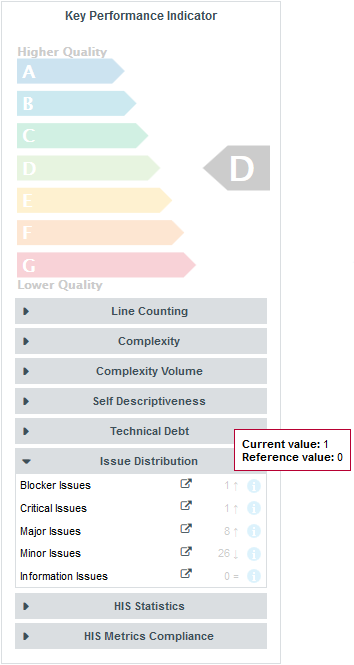

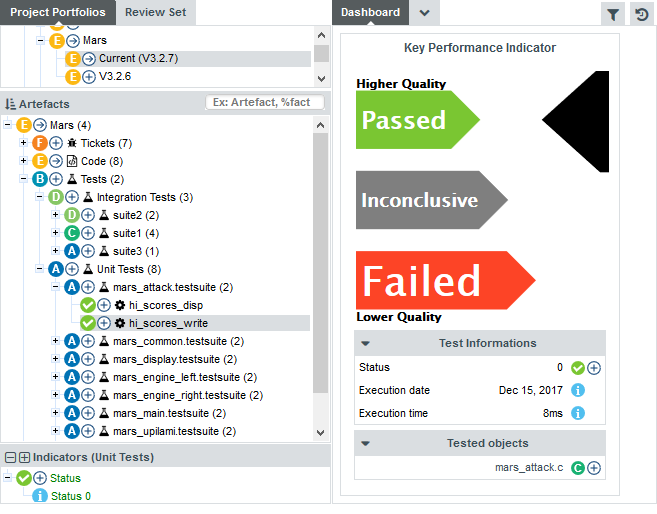

The left-hand area of the Dashboard contains the score card , which consists of a graphical representation of the key performance indicator for the current artefact, and some tables highlighting key metrics about the project.

Each table lines display a series of details about the key performance indicator:

-

The name of the metric (e.g. Rule Compliance). When clicked, a popup shows the way the metric is computed. Optionally, some metrics may allow an extra link to be displayed. This link shows the list of findings taken into account when calculating this metric (See Non Conformity Count).

-

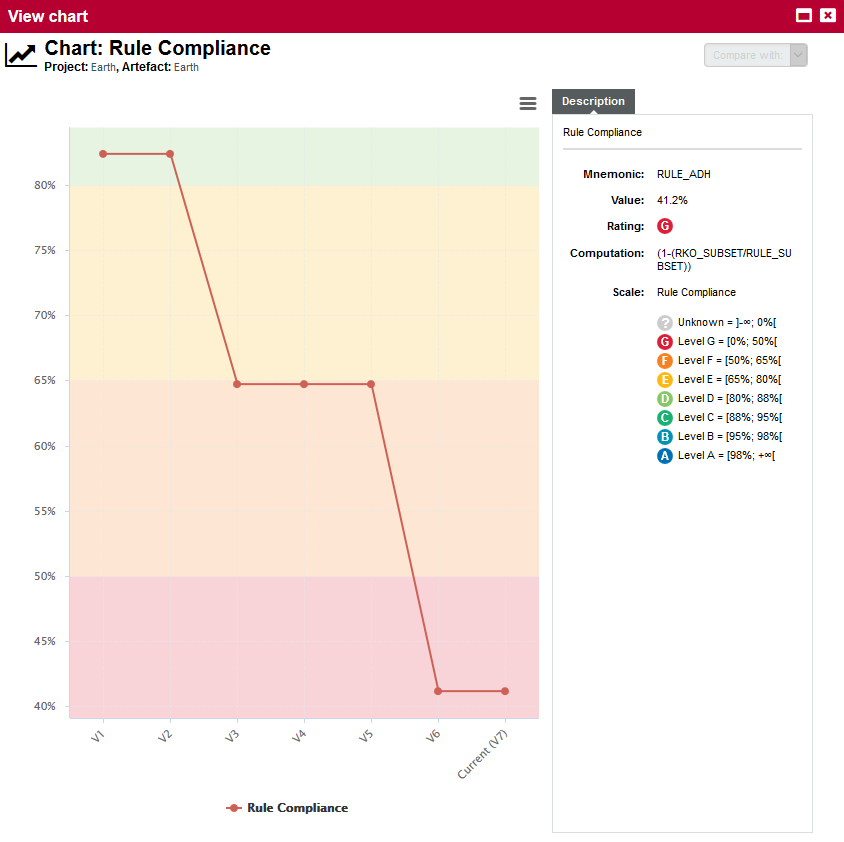

The raw value of the metric and its evolution according to the previous version (e.g. 41.18% ↓). Clicking a value ion this column displays a chart of the history of the last 10 values recorded for this metric.

-

If the metric displayed is an indicator, the rating of the indicator is displayed, along with its evolution (e.g. Level G, deteriorated). If the metric is a measure, then an information icon is shown. In both cases, you can click the information in this column to display more details about how the metric is computed.

The right-hand area of the Dashboard contains a series of charts representing key information about the current artefact. Clicking a graph opens a larger version of the image so you can analyse the data. Note that the available charts will differ depending on the type of artefact selected in the tree. Files and functions also include a Source Code link at the top right corner (for users who have the privilege to browse source code), which does not appear in the Dashboard for folders and applications.

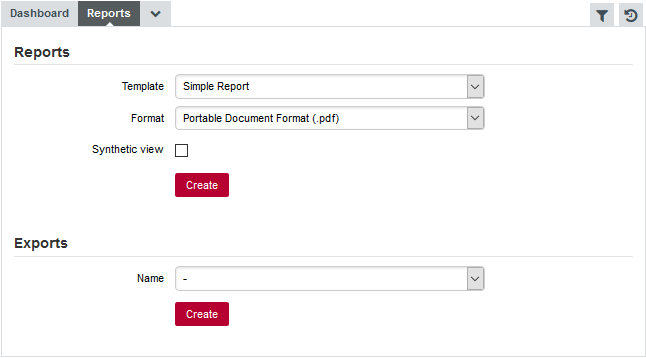

The Dashboard is only the first of a series of tabs in the Explorer. In the following chapter, you will find out more about the role of the Action Items, Highlights, Findings, Forms, Reports, Indicators, Measures and Comments tabs. Note however that like the Dashboard, the information displayed in each tab is always relative to the node currently selected in the Artefact Tree.

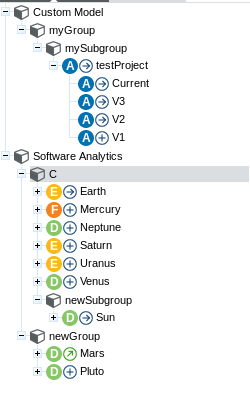

Organising Projects

By default, projects are sorted out according to their analysis model in the Project Portfolios.

But it is possible to create groups and subgroups in order to organise the project list.

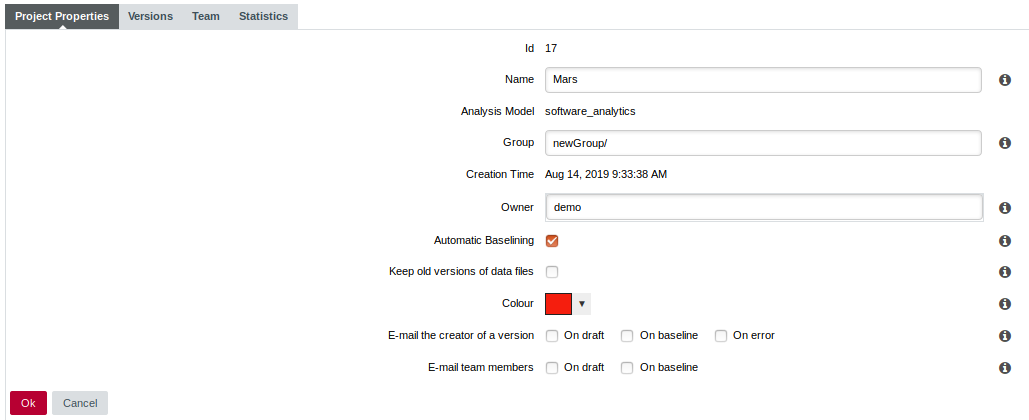

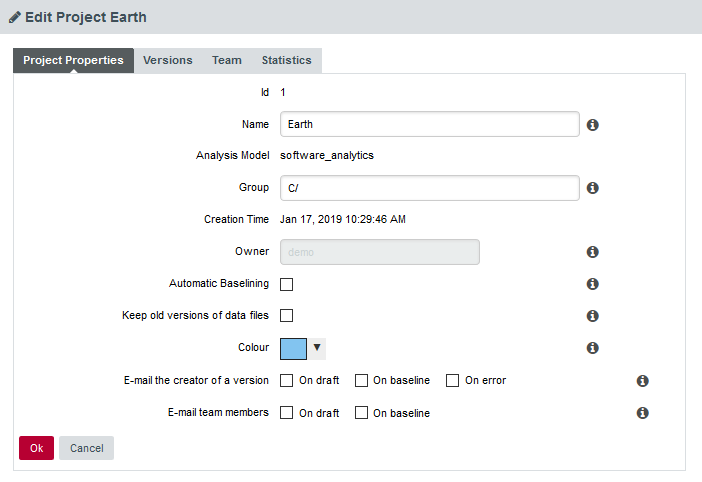

To do so, click on "Manage" for a given project and in the "Project Properties" tab, fill in the "Group" field :

It is possible to create subgroups as well, just type in the full path to your group : "myGroup/mySubgroup".

To remove a project from a group just delete the content of the "Group" field. If a group becomes empty after removing its last project, it will be automatically removed from the Project Portfolios.

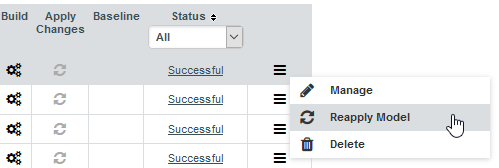

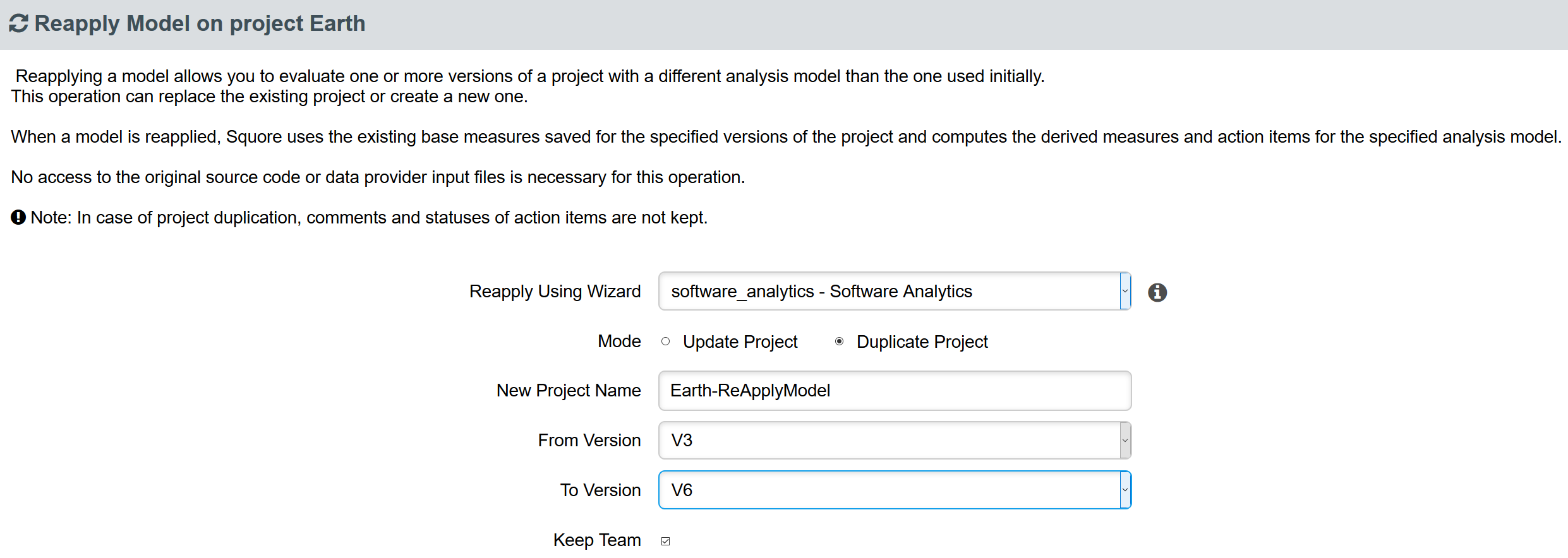

Reapply Model On Projects

This feature enables you to apply a new model, or an updated one, on an already existing project. This is done by using the project data in the database, without executing Repository Connectors and Data Providers.

Go to the "Projects" page, in the sandwich menu from the desired project, click "Reapply Model":

In the Reapply Model menu page, choose the desired option:

-

Update Project: will apply the new model onto the existing project, hence replacing it.

-

Duplicate Project: will create a new project from the selected one and then apply the new model onto the duplicate.

In case of a duplication the following options are available :

-

New Project Name: the name of the new project.

-

From Version: the project version from which the reapply will start.

-

To Version: the project version until which the reapply will be done.

-

Keep Team: whether or not the new project keeps the same team setup as the existing one.

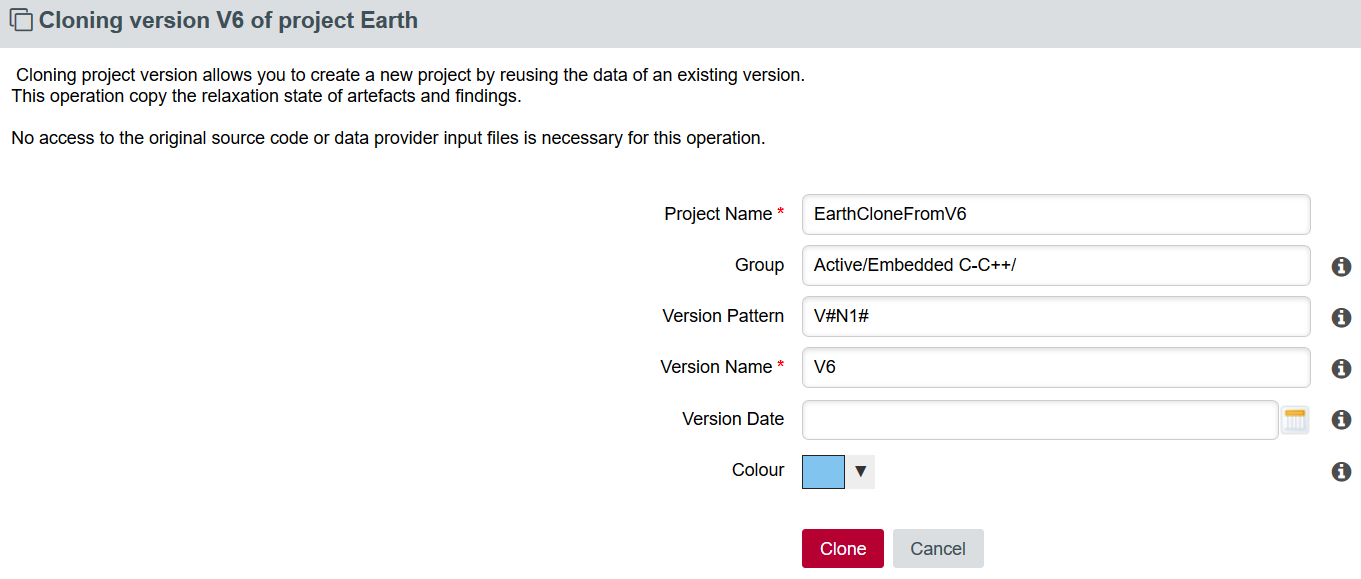

Cloning Projects

This feature enables you to branch a project while keeping all its version build information (relaxation states, milestones, etc…). First version of the new branched project is the one the clone has been created from.

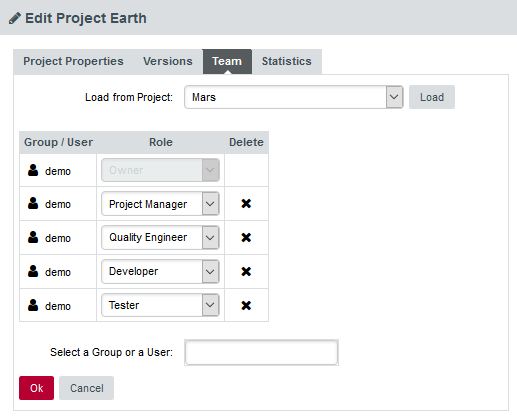

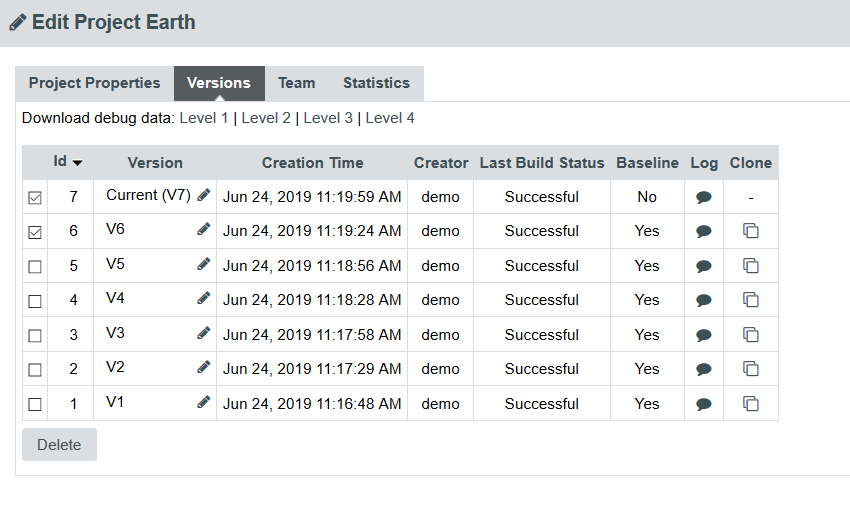

Go to the "Projects" page, click "Manage" on the desired project, in the "Versions" tab a new "Clone" column is available:

In the Clone project menu page, setup your project options:

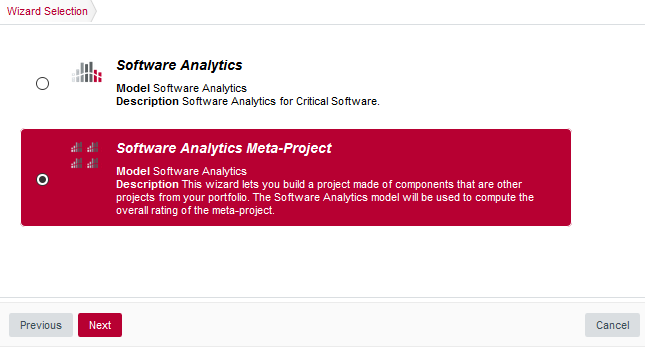

Creating Meta-Projects

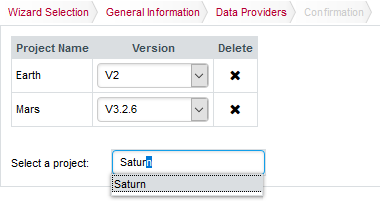

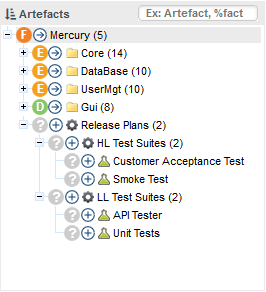

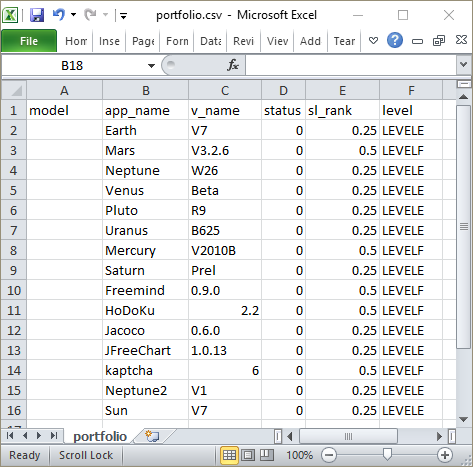

In contexts where your projects reuse code from other projects that you also analyse in Squore, you can create a meta-project that will show the analysis results from the various software bricks in a single project.

When you select a wizard that allows to create a meta-project, you do not have to specify any locations for source files or any data providers to run. Instead, you are presented with a project picker that allows you to tell Squore which sub-projects compose your meta-project. In the example below, we will create a project that uses Earth (V2), Mars (v3.2.6), and Saturn (Prel) as its parts. You can choose any baseline version of any project you have access to in Squore as a component of your meta-project.

When the analysis finishes, the meta-project is listed along with the other projects in the Project Portfolios. You can expand the Artefact Tree for your meta-project to browse the artefacts of the three sub-projects that are part of your meta-project, and consult all their Action Items, Findings and Highlights.

5. Understanding Analysis Results

This chapter describes the main features available in the Explorer. By the end of the chapter, you should be able to make the most of the information and decisions presented by Squore and start applying them to improve your development practices.

Has the Quality of My Project Decreased Since the Previous Analysis?

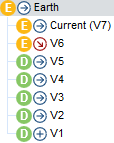

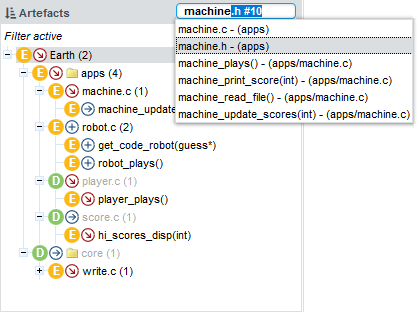

After completing the analysis of a new version of your project, you will probably want to investigate how it has evolved, more specifically for which artefacts the quality has decreased. Let’s look at the history of the Earth Project (which should be available if your Squore administrator has created the sample projects shipped with the Squore installation) to find out how to spot the worst-scored components in your project.

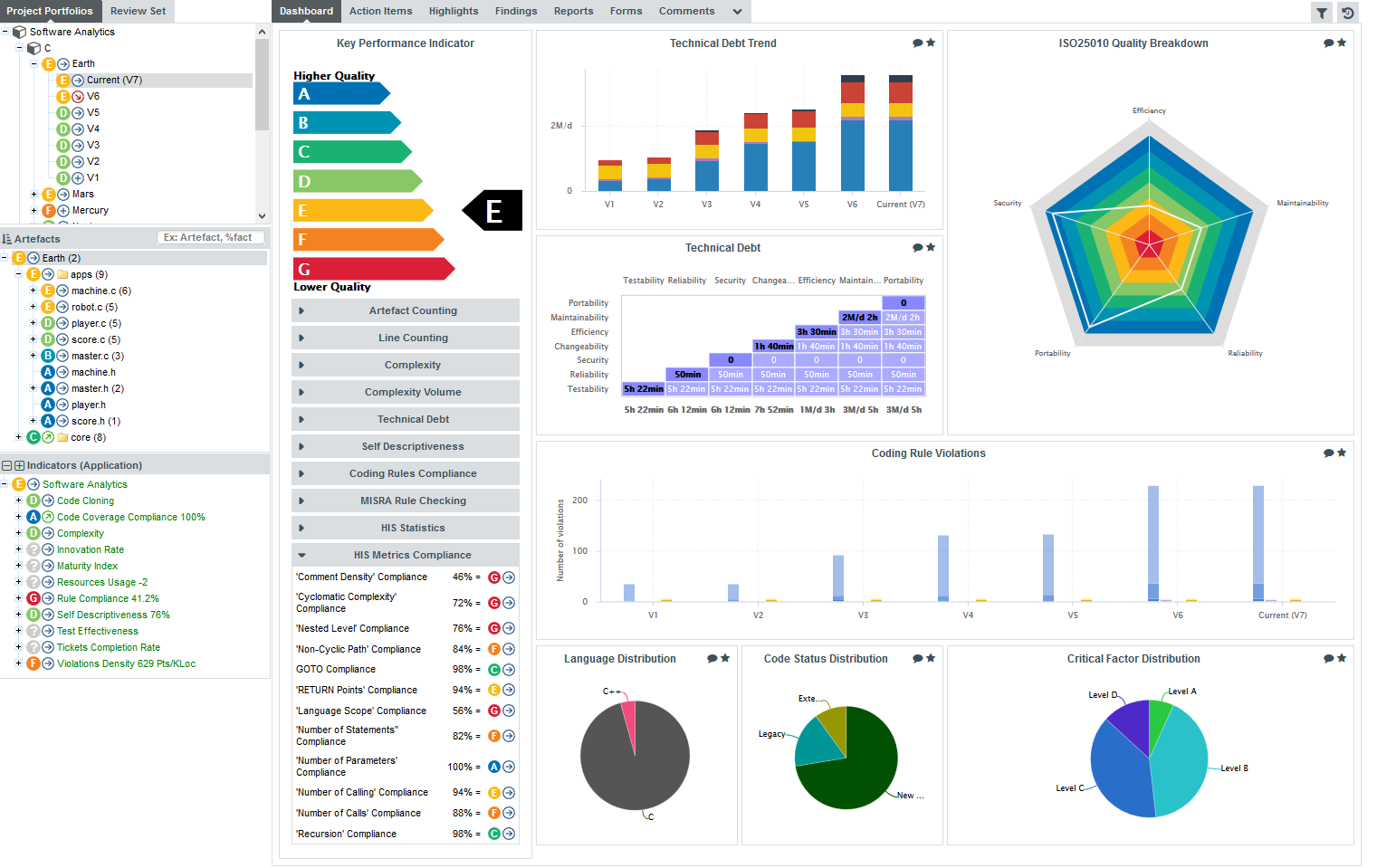

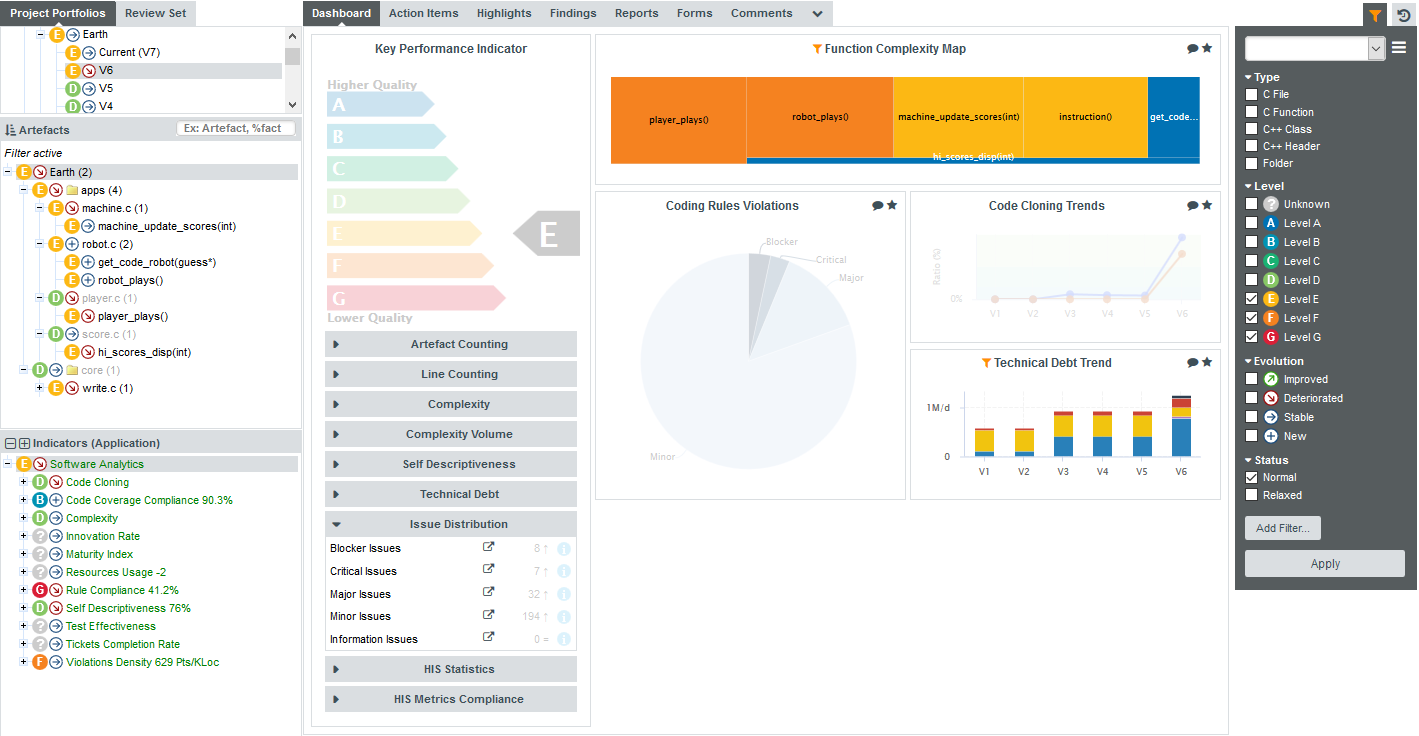

Log into Squore as the demo user using demo/demo and observe the evolution of the Earth project in the Project Portfolios:

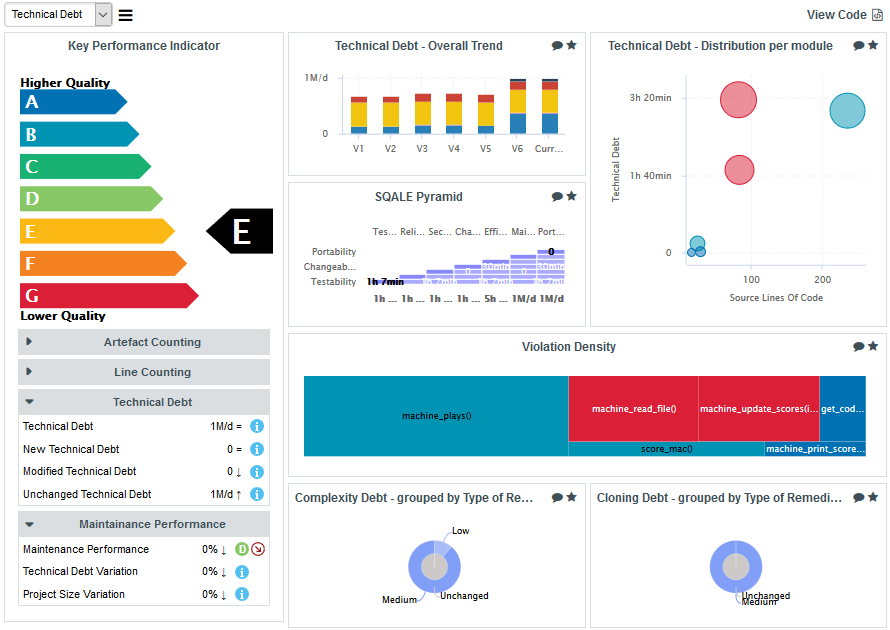

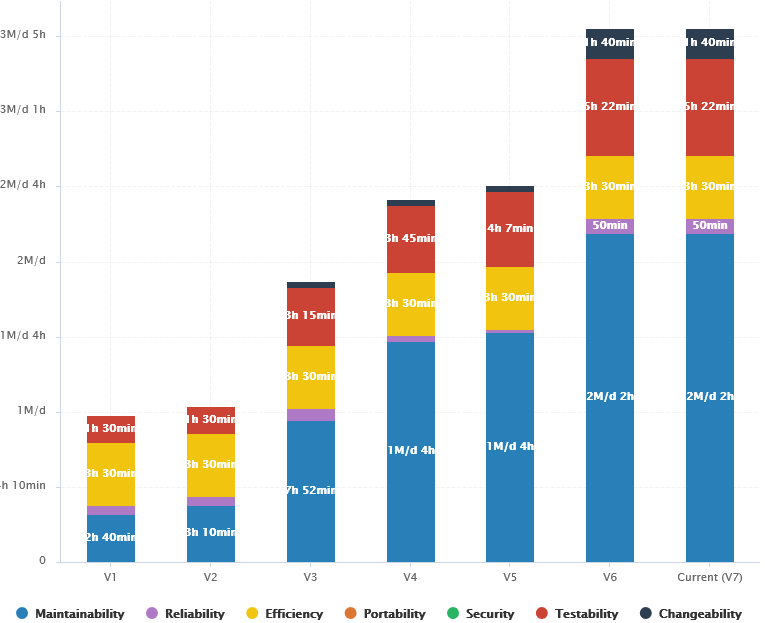

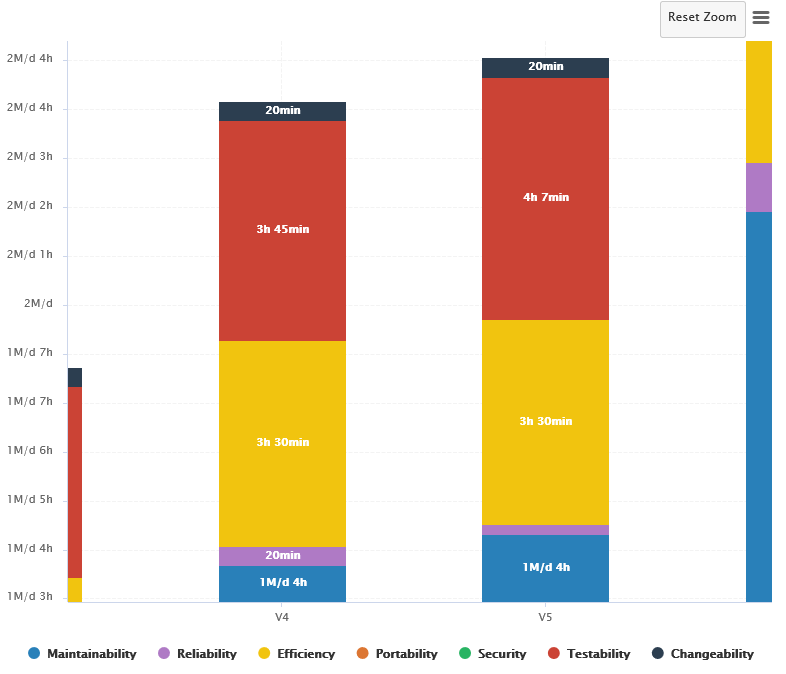

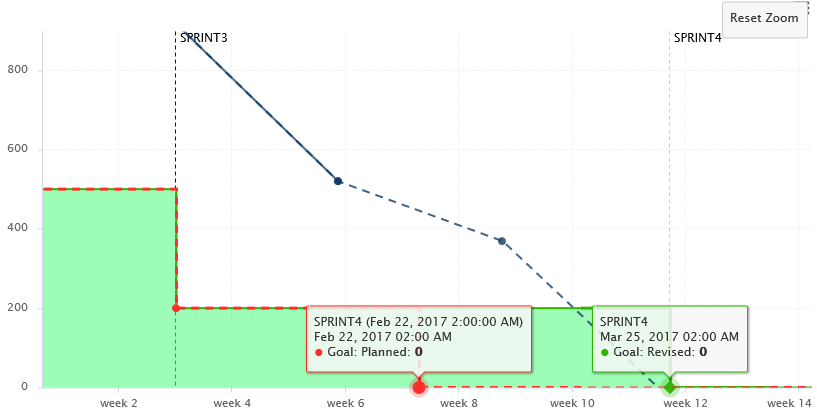

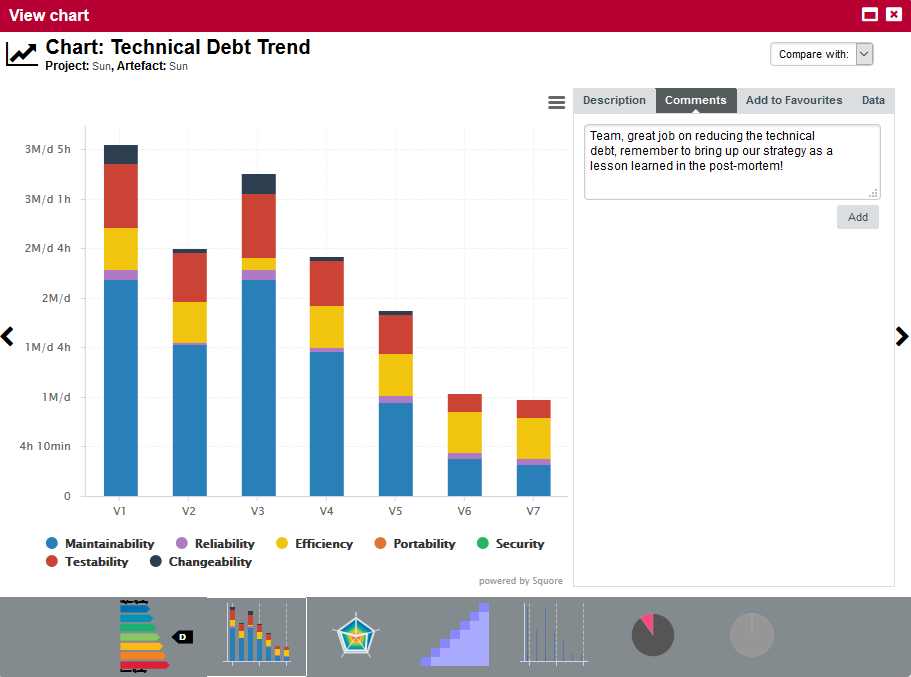

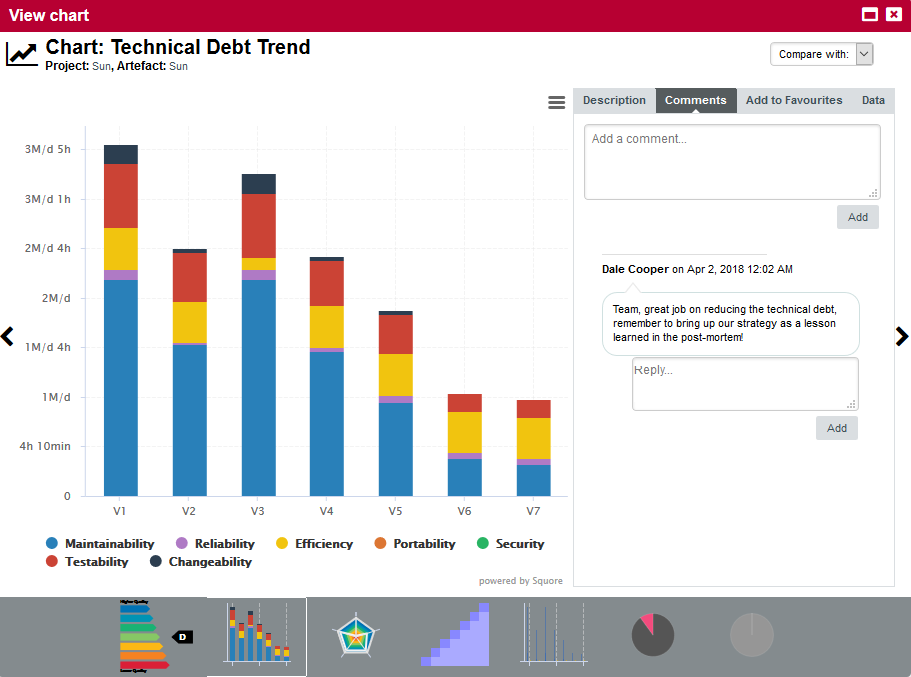

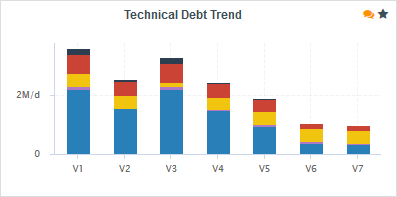

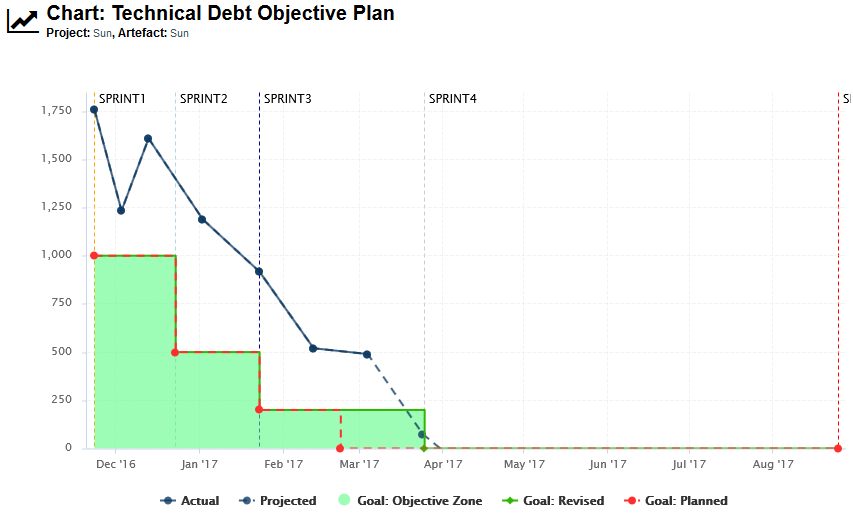

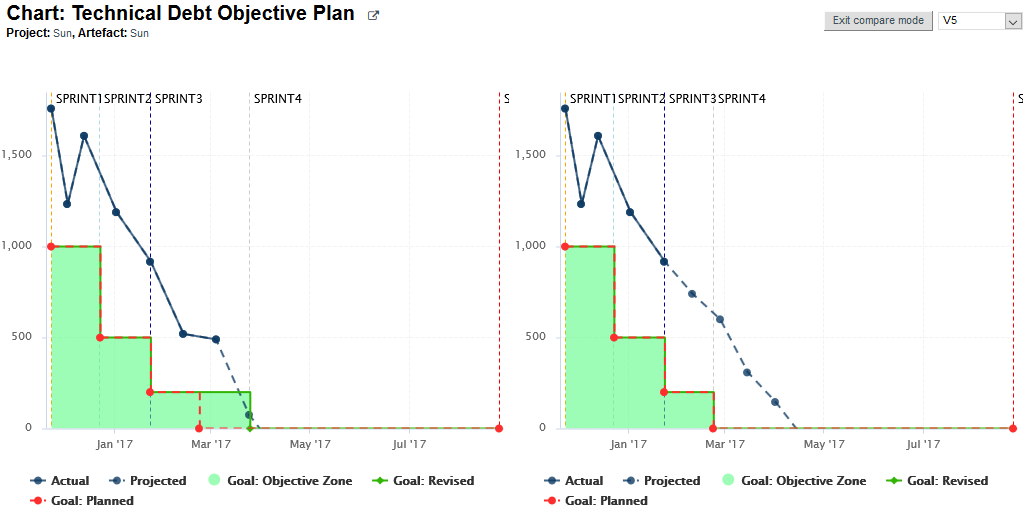

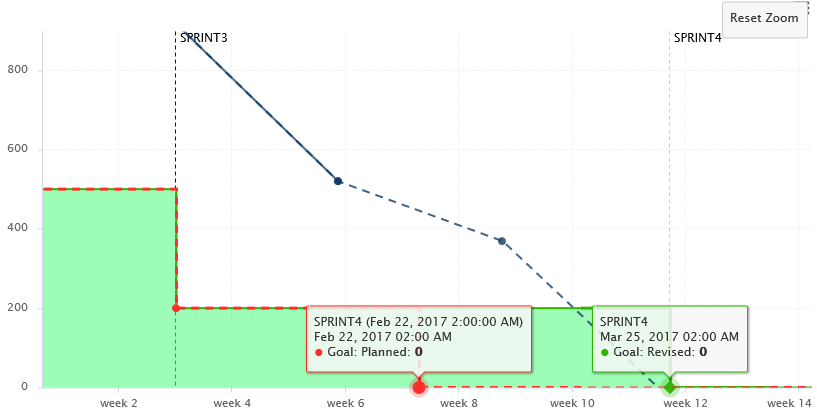

The trend arrows before the version names in the Project Portfolios indicate that the overall rating has recently deteriorated (More information about the quality indicator icons is available in Where Are My Analysis Results?). If you take a closer look at the Technical Debt Trend chart, you can notice that the technical debt is growing for this project.

|

You can zoom in on the chart by dragging your mouse over the section of the data you are interested in. After zooming in, you can keep the selected zoom level and move around the chart by holding the Shift key and dragging the chart around, or go back to the original view by clicking the Reset Zoom button in the Chart Viewer.

A zoomed-in section of the Technical Debt Trend chart

|

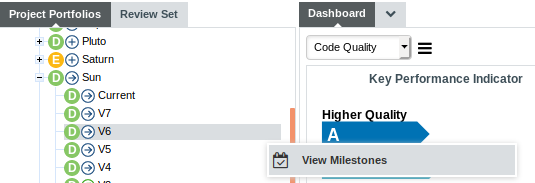

Since the trend accelerated in V6, we will focus on this version. Let’s click V6 in the tree and start our evaluation by looking at the score card, which rates Earth at E.

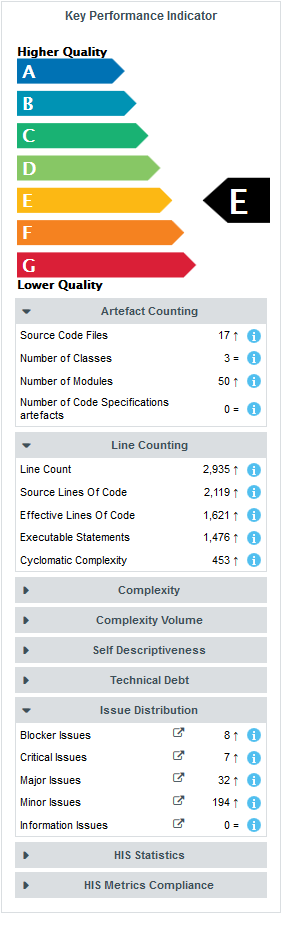

Let’s also look at the Code Quality dashboard, which offers more insight into coding violations. Select Code Quality in the Dashboard drop-down list:

Some values under Artefact Counting, Line Counting and Issue Distribution explain the lower score: the application contains more files and functions, more lines of code, less comments and more rules violations .

By now you probably want to find out which components in your project are responsible for increasing the technical debt the application in this version.

If you want the Artefact Tree to reflect this information, you can change the sort order to show the worst scores first by clicking on the sort icon

( ) and selecting LEVEL > Worst first to display artefacts from worst-scored to best-scored.

) and selecting LEVEL > Worst first to display artefacts from worst-scored to best-scored.

Finding Artefacts Using Filters and Search

This section explains the basics of looking for artefacts using filters and search. For a more automated way to find artefact that fit a specific category, take a look at Finding Artefacts Using Highlights.

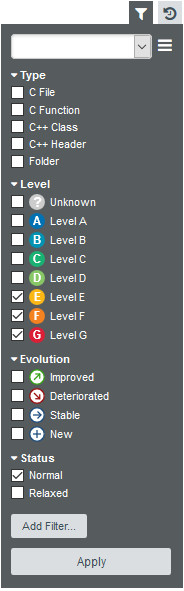

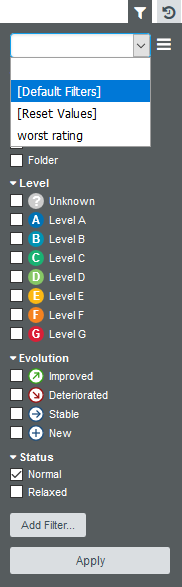

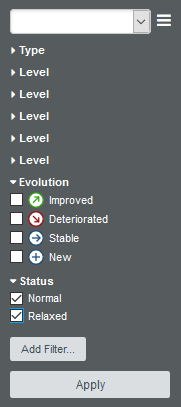

Click the Filter icon

(![]() ) in the top-right corner of the Explorer to reveal the Filter Panel. The Filter Panel allows you to set criteria that artefacts need to meet in order to be displayed in the artefact tree or taken into account in charts on the dashboard.

For this example, we want to restrict the visibility of artefacts to those rated E and lower:

) in the top-right corner of the Explorer to reveal the Filter Panel. The Filter Panel allows you to set criteria that artefacts need to meet in order to be displayed in the artefact tree or taken into account in charts on the dashboard.

For this example, we want to restrict the visibility of artefacts to those rated E and lower:

Click Apply to apply the changes The Artefact Tree and the dashboard refresh to show results for the artefacts in the levels selected, as shown below:

The notice Filter active is always displayed above the Artefact Tree and the filter icon turns orange when you are using a filter.

The tree now only shows artefacts rated E and lower, along with their ancestors, which are greyed out if they are not rated E and lower. On the dashboard, the charts that support displaying filtered information are highlighted with an orange filter icon (![]() ) as in the Function Complexity Map.

Other charts and score card items that cannot be filtered are greyed out.

) as in the Function Complexity Map.

Other charts and score card items that cannot be filtered are greyed out.

|

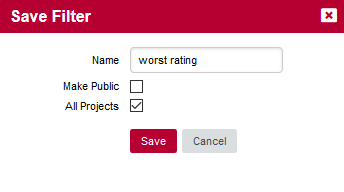

You can save your filter for later use and even share it with other Squore users by clicking the hamburger menu in the Filter Panel and giving your filter a name.

The filter saving options

Saved filters are displayed in the dropdown list at the top of the Filter Panel so you can reuse them later.

The list of saved filters

For more details about advanced filtering functionality in Squore, consult Advanced Filtering. |

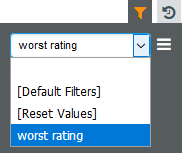

While a filter is active, you can still search for other artefacts by typing a search term in the search box. Try typing ma in the search box above the Artefact Tree, and watch the search results list get populated as you type:

If you select the highlighted search result in the list above, you will open the dashboard for machine.h.

Let’s go back to our filtered tree. The filter singled out three files whose rating deteriorated that contain functions in the required score range: player.c, machine.c and write.c. Click on the artefact player.c to view its dashboard. Note how the score card indicates that the artefact has more critical issues than in the previous analysis.

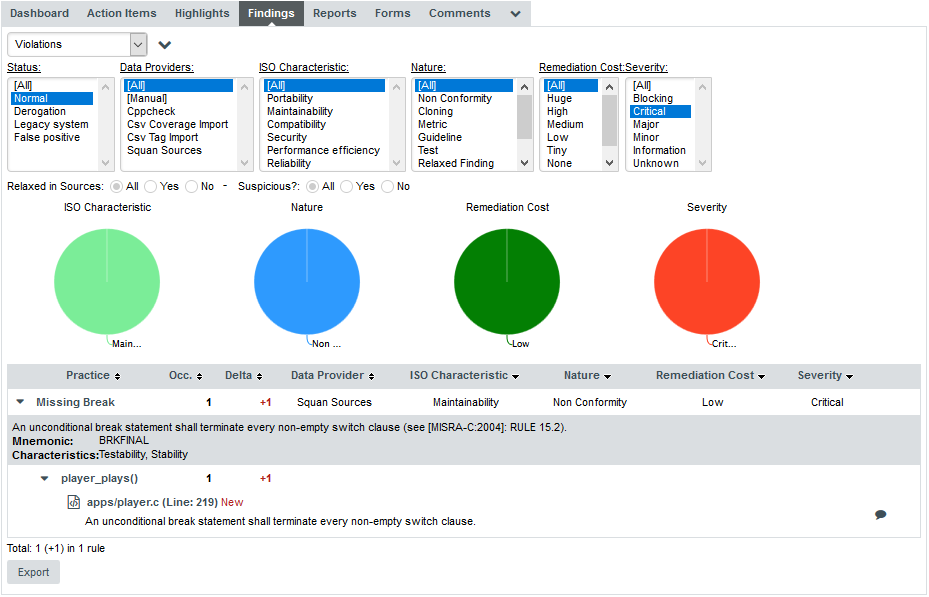

You can click the link in the table to directly view the new critical violation on the Findings tab. In this case, the rule BRKFINAL was broken:

Another convenient way to try and find why a project’s quality is deteriorating is to filter on the trend of an artefact.

Select version V6 of Earth again and edit the active filter: Uncheck the boxes for levels E and lower, and select the Deteriorated category in the Evolution section.

When applying the filter, you should see the artefacts in the tree that have the  icon

next to their name.

icon

next to their name.

The files you inspected earlier are still here, but there are more deteriorated artefacts that you can start reviewing. If you click on hi_scores_disp(int) for example, which is rated E.

In order to find out where the degradation took place, you can look at the indicators tree to understand where the decline in quality comes from. Expand all the nodes in the indicators tree to reveal the issues with the artefact:

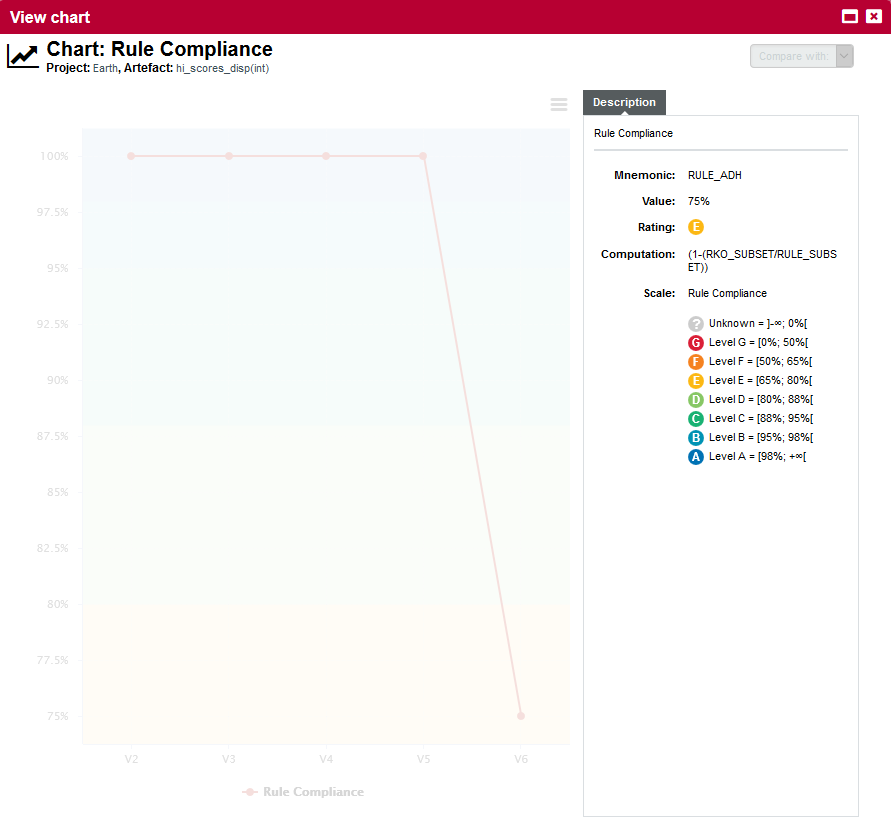

Squore makes it easy to spot the irregularities quickly, like the fact that the Rule Compliance indicator is one of the causes for the worse score in this version. This is probably the first item to review in this function.

By clicking the Rule Compliance indicator in the tree, you can learn more about its history and how it is computed:

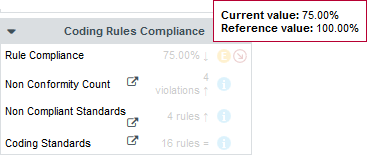

Finally, you can take a look at the Coding Rules Compliance section of the artefact’s scorecard to confirm the results:

By clicking the link icon, you can directly view the violations for this artefact in the Findings tab.

You can dive further into the analysis results by looking at the information contained in other tabs and assign action items to your team by referring to Managing Your To-Do List With Squore or report your progress as explained in Communicating With Squore.

Advanced Filtering

In the previous section, you learned how to filter artefacts based on their overall rating or trend, but Squore allows you to filter on more than the artefact’s main indicator. This section covers filtering on more than one metric, and how you can also save and share your filters with other Squore users.

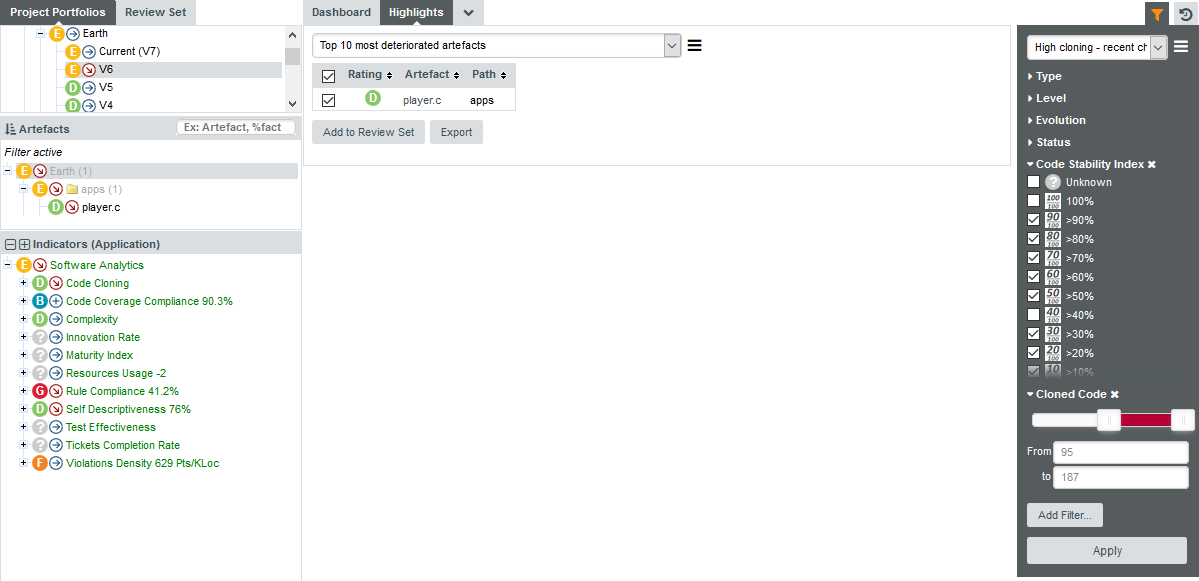

This example explores how to develop a strategy to reduce cloning in your application by finding the artefacts with the highest cloning that have also been modified since the previous analysis.

Start by displaying the dashboard for version V6 of Earth. In the Filter Panel, clear any existing filter by selecting [Default Filters] and clicking Apply to go back to an unfiltered dashboard.

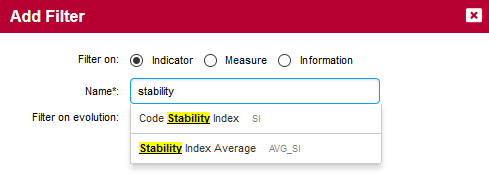

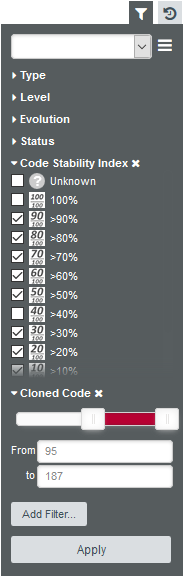

In order to find artefacts that have recently been modified, use Squore’s Stability Index indicator, a feature of the source code parser that computes the amount of changes in a file since the previous analysis. Add a filtering criterion by clicking Add Filter… in the Filter Panel. A popup appears so you can type the name of the indicator to filter on. Type stability and select Code Stability Index as shown below:

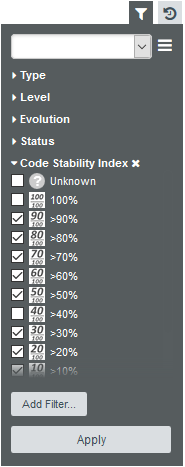

Clicking Add updates the Filter Panel with a section where you can select Code Stability Index levels to filter on. Since 100% means no changes, select all the levels except for 100%:

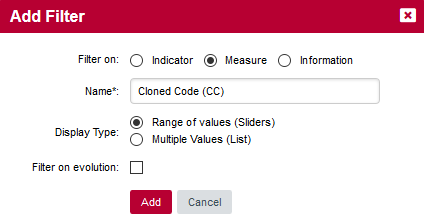

In order to find artefacts with the worst cloning, click Add Filter…, select Measure and find the metric called Cloned Code (CC). Configure this filter as a slider so we can dynamically change the range of cloning to look out for:

Click Add to add your filter. The slider shows you the minimum and maximum values for cloning in this project. Values can be modified either by entering values in the text boxes or by moving the sliders left and right. Move the left slider to about half the range for Code Cloning.

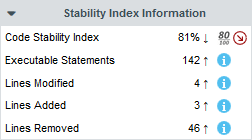

When clicking Apply to view the results of your filter, the artefact player.c is singled out as a recently modified file with high cloning. By switching to the Software Safety dashboard, you can view details about its stability index in the score card.

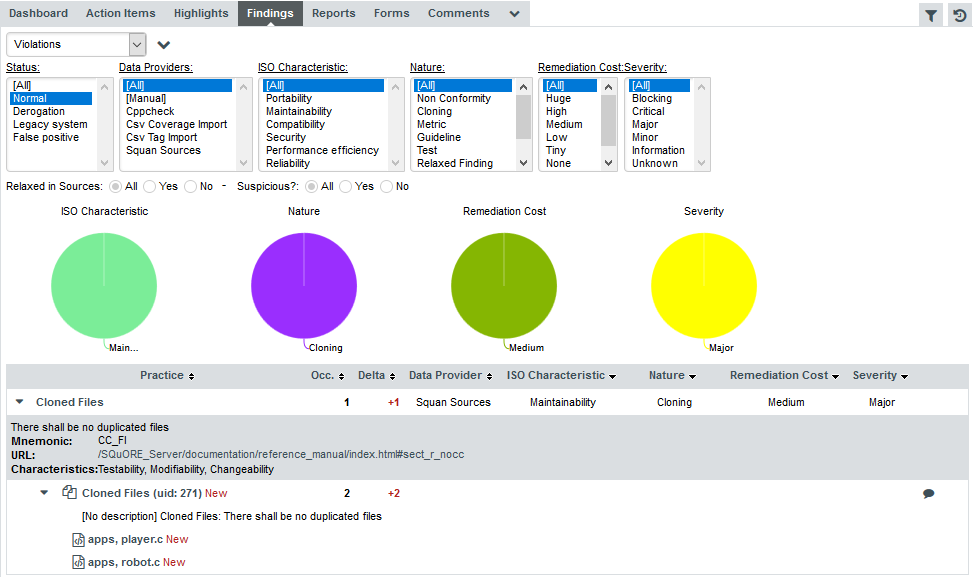

Clicking the Findings tab allows you to confirm that the cloning detected by Squan Sources was indeed introduced since the latest analysis.

Since this is a new finding in a recently modified file, it makes sense to address the issue to avoid creeping technical debt in the project.

If you want to repeat this exploration at a later time, you can save the filter you just created.

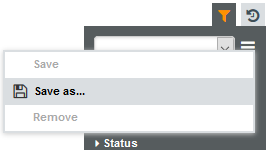

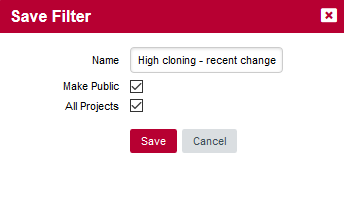

In order to save a filter, click the hamburger menu in the Filter Panel and select Save as…. The Save Filter popup appears and gives you the option to:

-

Name your filter

-

Make it public so other Squore users can apply it and adopt the same cloning exploration practice

-

Make it available across all projects in Squore

After you save a filter it becomes available in the drop-down list of filters in the Filter Panel:

|

There are more filtering options for metrics and textual information you can explore directly in Squore’s web interface and online help. |

Finding Artefacts Using Highlights

In the previous section (Finding Artefacts Using Filters and Search), you got familiar with searching and filtering to find the artefact that has a negative impact on the overall rating of a project. in this section, you will learn to master the Highlights functionality, which aims to make the process of finding certain categories of artefacts easier and allows to display additional information about each artefact.

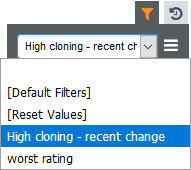

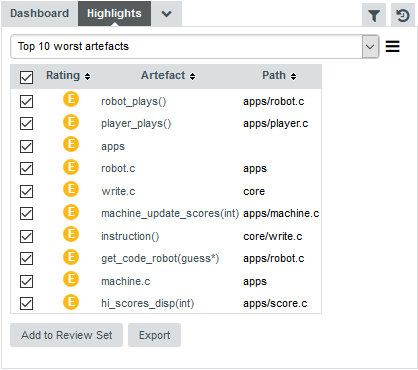

Highlights are flat lists of artefacts ordered in predefined categories for a model.

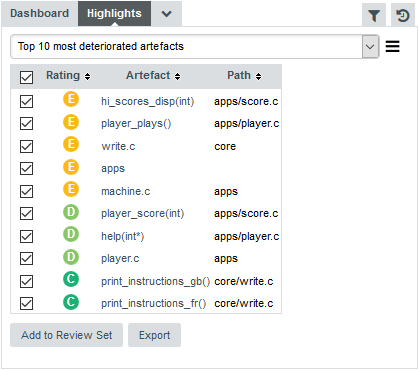

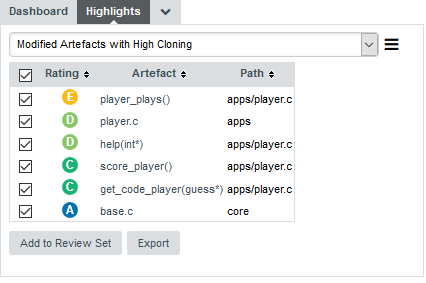

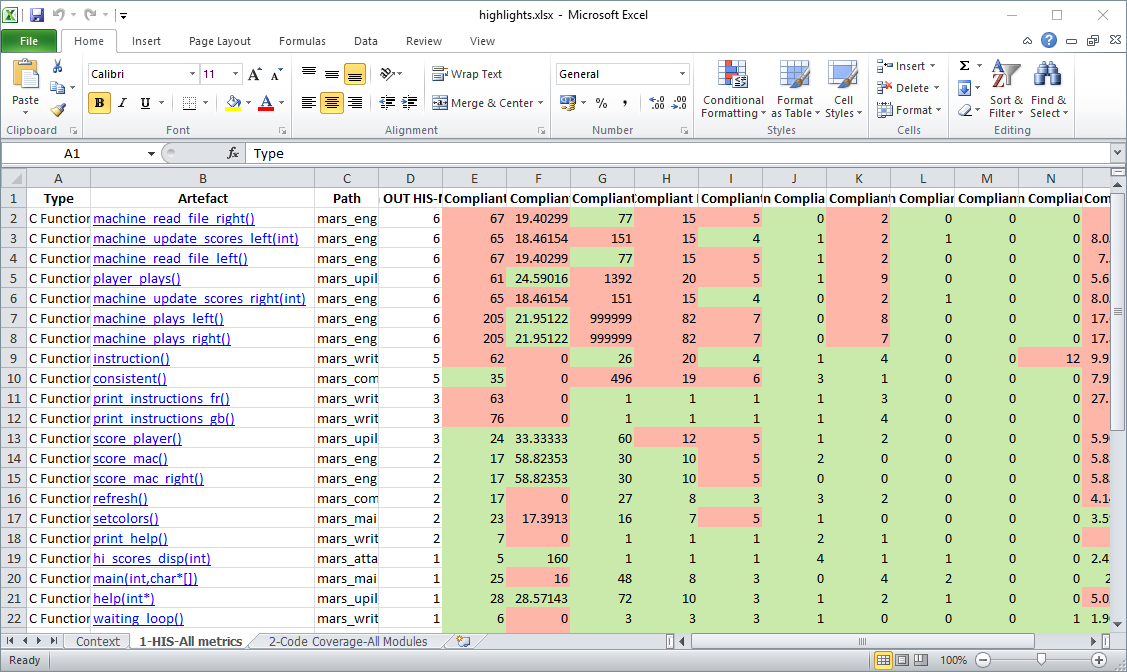

Let’s try to confirm our findings about the worst and most deteriorated artefacts in Earth. Click on the version V6 of Earth and clear the filter. Click the Highlights tab of the Explorer and select the Top 10 worst artefacts highlight. The list appears as shown below:

The list confirms that some fo the worst-rated artefacts are the ones you explored before. The Highlights table shows you the artefact rating, name and path, and allows you to go to the artefact’s dashboard directly by clicking the artefact name.

Now you can also find the deteriorated artefact hi_scores_disp(int) that you identified with a filter earlier in Finding Artefacts Using Filters and Search: select the Top 10 most deteriorated artefacts highlight to see the artefact appear in the list of deteriorated artefacts in this version.

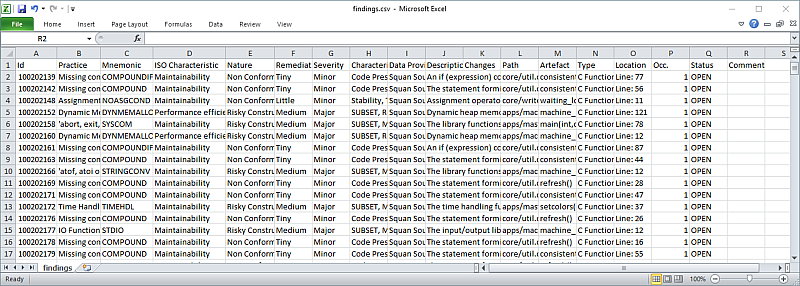

Artefacts are sorted by degradation, i.e. the difference between the value of the main indicator in the previous baseline version compared to the current value. By clicking the Export button, you can export the selected items to a CSV file. If the Export button is greyed out, your licence does not include the option to export data to CSV files.

|

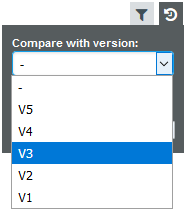

By default, the list of most deteriorated artefacts is compiled based on the previous version of the project. You can compare results with another version of the project by setting your reference version in the Reference Panel, as shown below.

Choosing a project version in the Reference Panel

Changing settings in the Reference Panel impacts deltas and trends in most tabs on the dashboard and is covered in more details in Comparing versions and hiding old analyses with the Reference Panel. |

Creating Highlights

From the Highlights tab, you can also create new highlights. These new highlights, like your filters can be saved and shared with other Squore users.

Let’s extract more data from the artefacts singled out by your advanced filter in Advanced Filtering, where you found recently modified code with a high cloning ratio. To begin, simply select your saved filter from the Filter Panel and apply it. As you apply and modify the filter, the list of artefacts for the current highlight responds to the filter parameters:

|

When a filter is applied, you can select a dynamic highlight called Currently Fitered Artefacts. This highlight displays a simple list of artefacts that match the filter that you can add to your review set or export to a CSV file. |

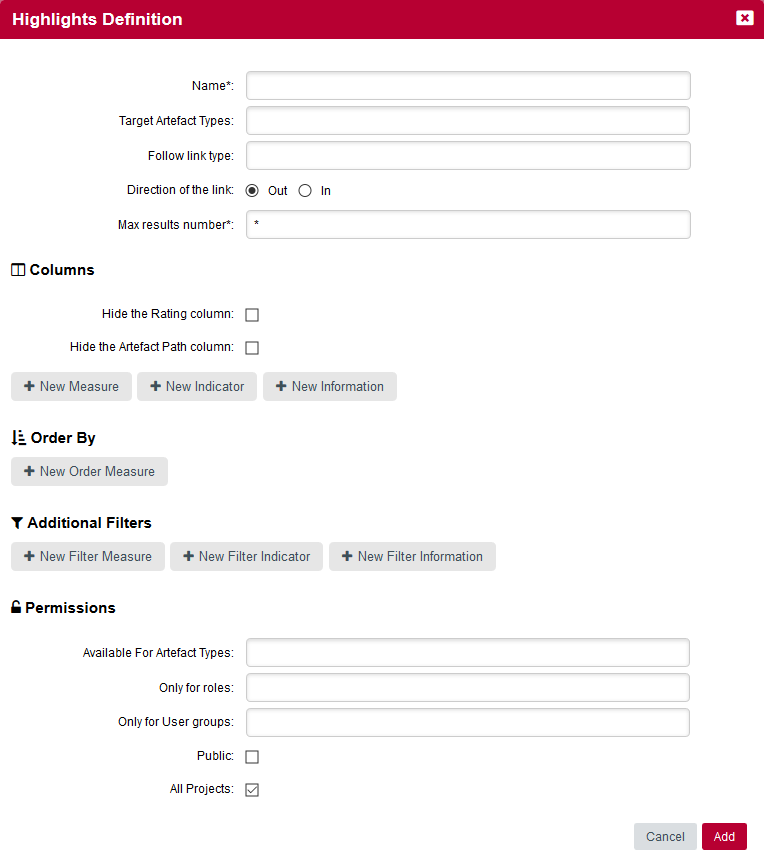

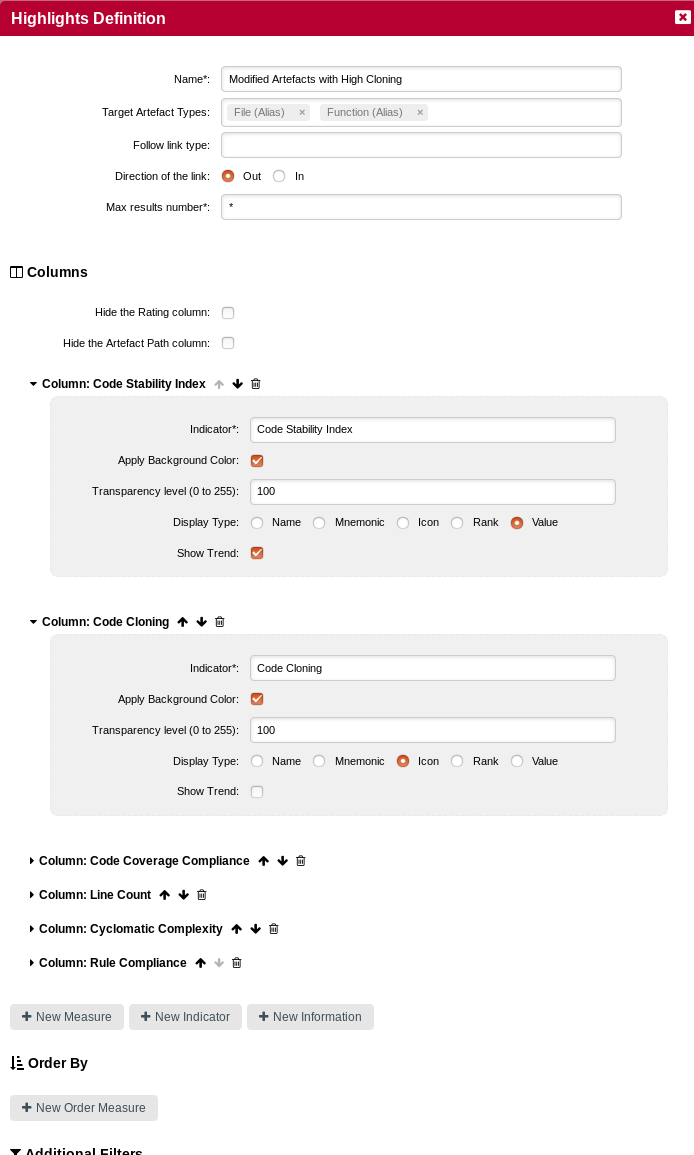

Let’s now build a highlight from scratch that uses the same filters and displays more information about the artefacts. Click the hamburger menu next to the list of highlights and select Add Definition. The popup that opens lets you configure the data columns to display for each artefact, as well as apply filters the same way you did in the Filter Panel:

The values in the popup above reproduce conditions of the filter you used earlier:

-

It applies to file and function artefacts (Target Artefact Types)

-

It displays all results with no limit (Max results number)

-

It defines two filter conditions

-

The measure Code Stability Index is lower than 100% (i.e.: the artefact was modified)

-

The measure Cloned Code is higher than 0 (i.e.: there is some cloning)

-

Please note that you can also hide the path column & the rating column from your Highlights by selecting the checkboxes present in the "Columns" section.

Click Add to create the highlight and view the results. You can also clear the current filter since the highlight defines the same conditions.

In order to add information about the artefacts displayed in the list, click the hamburger menu and select Edit…. The Columns section fo the popup lets you select additional data to display about each artefact. It makes sense to display the cloning ratio and stability details, as well as other indicators and metrics that will help you decide if modifying the code is risky or safe.

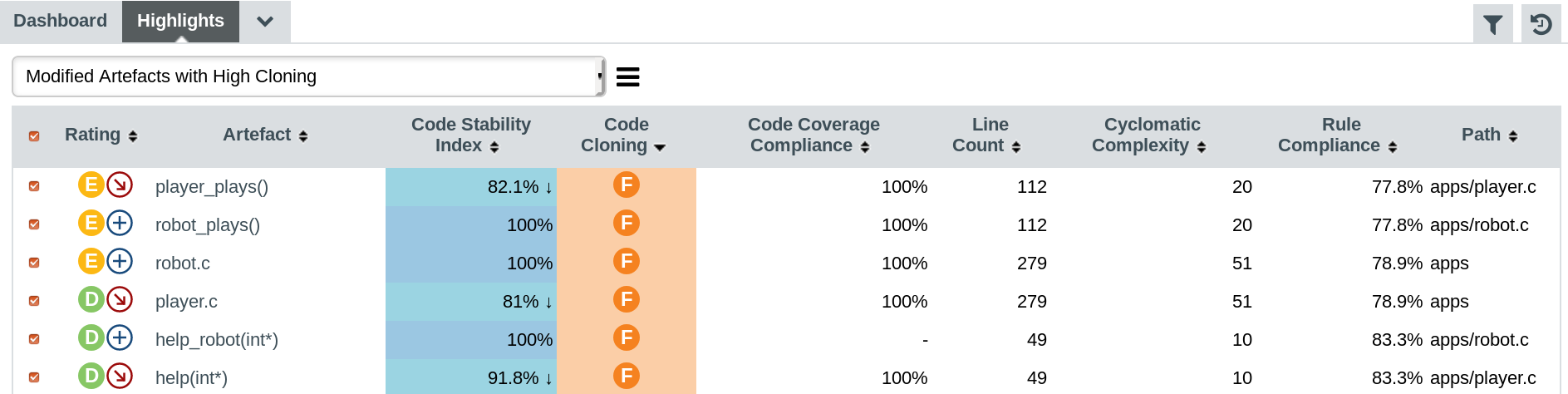

When you click Update, the highlight now shows all the requested details for the artefacts:

|

When you save a highlight definition, you can decide to make it available for all projects that use the same analysis model, and make it public so other Squore users can use it. |

Comparing versions and hiding old analyses with the Reference Panel

A project with a long history can make it difficult to analyse results. Squore provides two ways to make dashboards clearer by:

-

Picking a reference version to compare results with

-

Hiding old versions completely from the Explorer

Both filtering options are available in the Reference Panel, which is always accessible from the Explorer by clicking the Reference icon ( ).

).

Changing the settings in the Reference Panel impacts the following items in the Explorer:

-

Trends in the Dashboard and Highlights tabs are computed based on the version you specified instead of the previous version

-

Charts that display delta values are updated to compute deltas against the reference version instead of the previous version

-

Temporal charts are updated to hide versions whose version date is older than the reference date

-

Deltas on the Findings tab are computed based on the specified reference version instead of the previous version

-

Versions whose version date is older than the reference date are hidden from the Project Portfolios.

|

The Reference icon turns orange when the dashboard is impacted by your settings in the Reference Panel. |

How Do I Find and Keep Track of Artefacts?

For some projects, you may want to collect artefacts so you can review them later. Squore enables you to build a Review Set, a list containing artefacts that you want to keep track of. Let’s log in as the demo user to review all the new artefacts added to a project, in order to evaluate their level of quality.

Isolating the new artefacts can be done in three steps:

-

Log in using the demo user (demo/demo).

-

Click on version V6 of Earth in the Project Portfolios to display the dashboard for the last version of the project.

-

Create a filter to display only items in the Evolution column with the status New and apply your changes

You should see the following artefacts in the Artefact Tree:

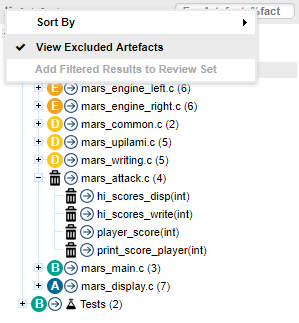

Squore makes it easy for you to keep track of these artefacts. Click on the icon above the Artefact Tree and select Add Filtered Results to Review Set.

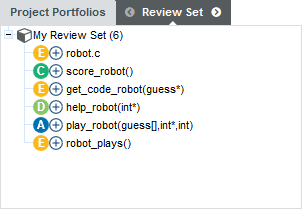

You can now clear your filter, the artefacts you want to review are stored in your Review Set. Click the Review Set tab in the left pane of the explorer to find the items you just saved.

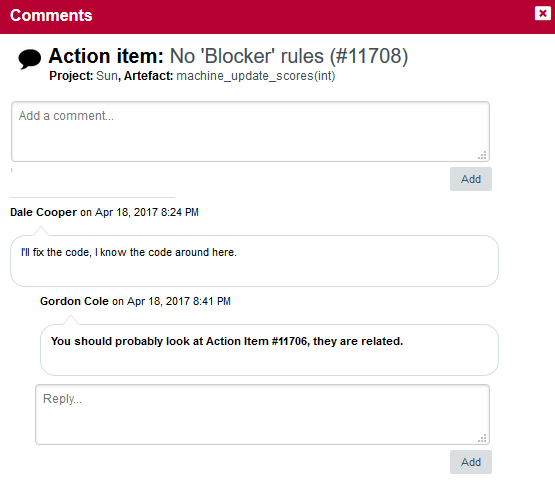

At any moment, the artefact currently selected in the Artefact Tree can be sent to the Review Set as well. Simply display the context menu for an artefact and click Add to Review Set to add it to the Review Set. Clicking an item in the Review Set pane has the same effect as clicking it in the Artefact Tree: the dashboard refreshes to show the information for that artefact. You can use the left and right arrows in the Review Set pane to go to the previous and next artefact in the list.

If you want to know more about what actions you can take after reviewing artefacts, refer to Managing Your To-Do List With Squore and Communicating With Squore.

How can I Understand and Enhance My Model?

Squore provides tools to understand, verify and enhance your model under the Models menu.

-

The Viewer, a graphical representation of all the analysis models on Squore Server

-

The Validator, a debug tool for model writers

-

The Dashboard Editor, which allows customising the dashboards that all users will see

Users whose profile grants the "View Models" permission have access to the first two tools.

Users whose profile grants the "Modify Models" permission have access to the last two tools.

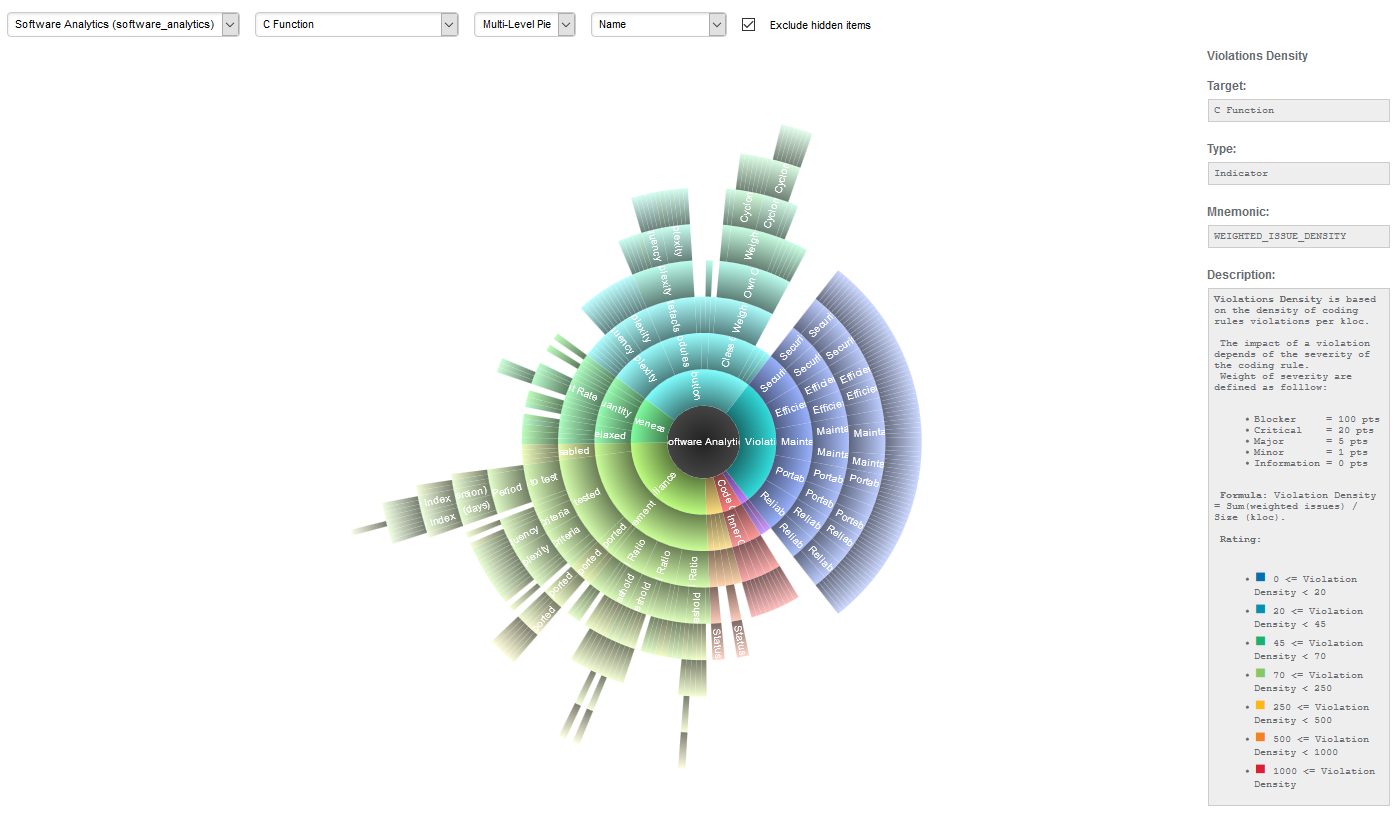

Viewer

To use the Viewer:

-

Click Models > Viewer in the toolbar.

-

Select the analysis model you want to browse.

-

Select the artefact level you want to browse.

-

Choose your preferred graphical representation between Space-Tree

-

Select whether measures are displayed using their full name or their mnemonic

Upon selecting the parameters above, the page is refreshed with the top-level indicators in the model, and you can click each indicator to unveil sub-indicators and their characteristics. You can drag the tree left and right to reveal all sub-levels if necessary. For each indicator selected, Squore displays the following information:

-

Target is the target artefact type for the selected item

-

Type is the type of the selected item

-

Mnemonic is the shortcode for the selected item

-

description is the description of the selected item

-

Data Provider is the Data Provider responsible for computing the selected item

-

MeasureId is the measure ID of the selected item

-

Computation is the formula used to compute the value of the selected item

-

ScaleId is ID of the scale associated with the selected item

-

Levels is the list of levels available for the selected item and their ranges

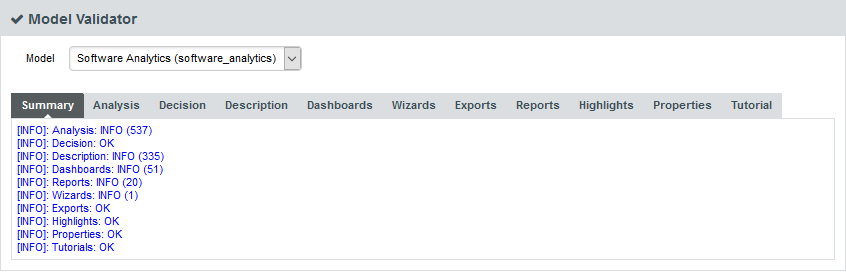

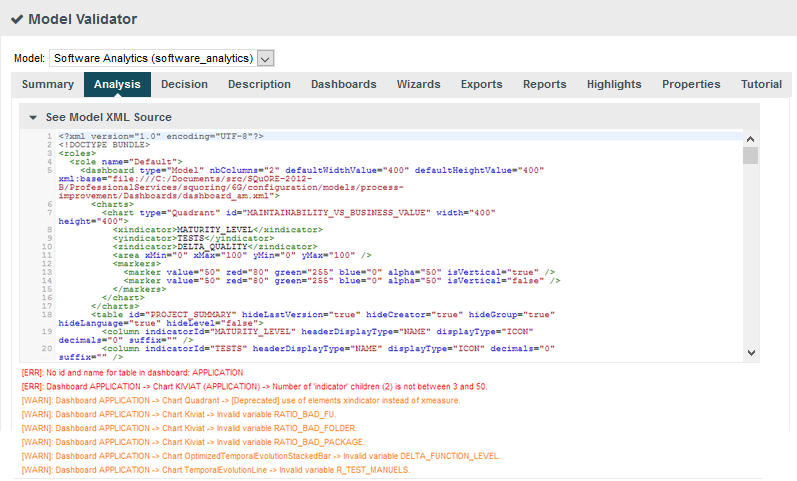

Validator

If your work involves adjusting the model’s metrics or dashboard, you can use the Validator to verify its integrity during as you make changes. Click Models > Validator to display the diagnostics organised by category, as shown below:

The Summary tab displays a summary of all the diagnostics run for each category. By clicking any of the other tabs, more details are shown about potential problems found in your model. You can also show the complete XML of the model to understand the errors reported. The XML can be searched by using the Ctrl+F key combination to bring up the search dialogue, and then Ctrl+G to search for the next occurrence of the search term:

Your Squore administrator can help you get more information model development. You can also refer to the Squore Configuration Guide for a complete reference.

Dashboard Editor

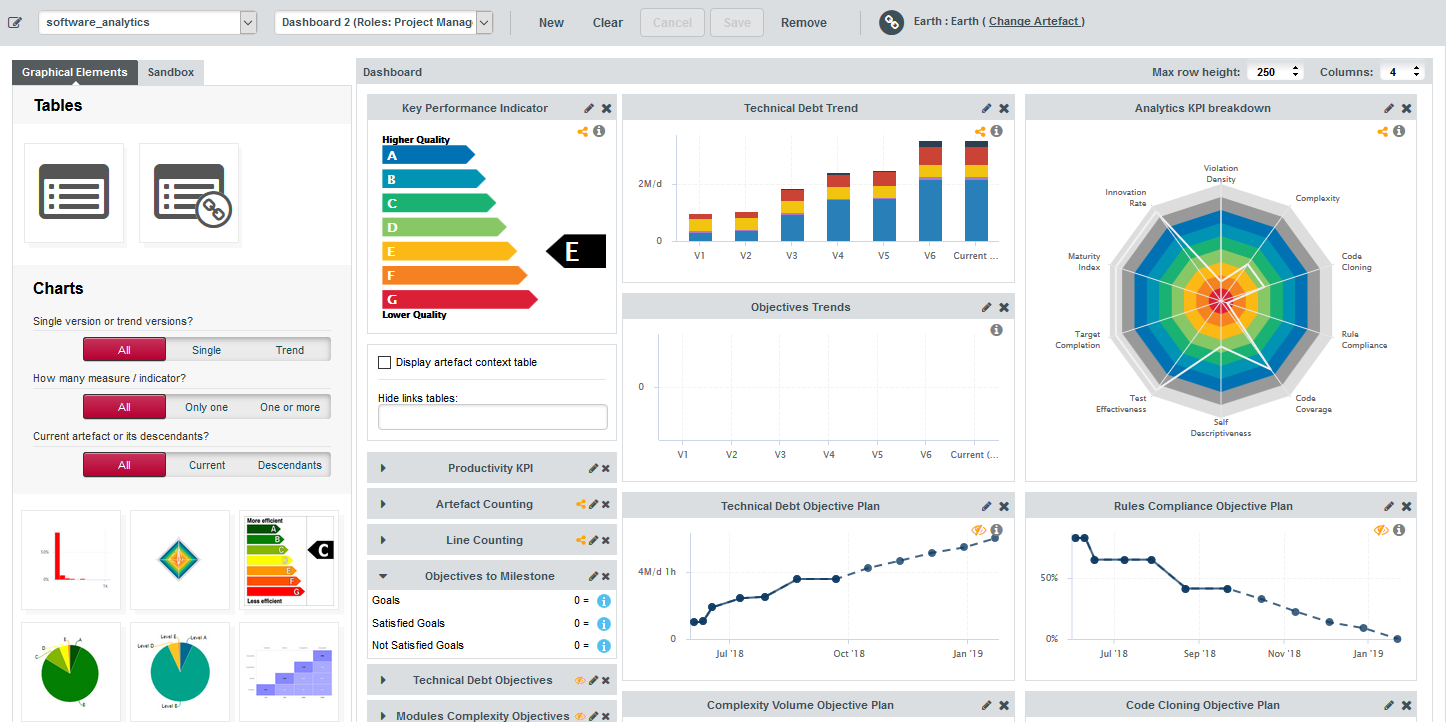

The Dashboard Editor is a graphical editor for the dashboards of a particular model. Dashboards consist of a key performance indicator, a list of tables and one or more columns of predefined charts. With the Dashboard Editor, you can rearrange the information shown on the dashboard for all users, or create a completely new dashboard for a new role or new group in your project.

In order to use the Dashboard Editor:

-

Click Models > Dashboard Editor

-

Select a model and load an existing dashboard

The current dashboard skeleton is loaded in the editor, as shown below:

The right panel displays your current dashboard, where every chart can be resized or edited, while the left panel allows you to add new charts or tables by dragging them to your current dashboard. When you are satisfied with your changes, you can save your modifications. You can also create a new dashboard, using an existing one as a basis for the new one, or start from a blank canvas.

Your changes are saved in Squore’s workspace folder on the server and are made available to all other Squore users when you click Save.

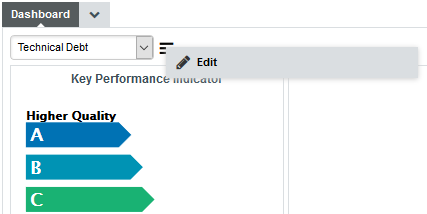

|

You can edit a dashboard directly from the Explorer by clicking the Edit Dashboard… entry in the Explorer Settings.

The Edit Dashboard... menu loads the current dashboard in the editor so you can change it

|

More detailed explanations about the Dashboard Editor can be found in Squore’s Online Help.

Analysis Model Editor

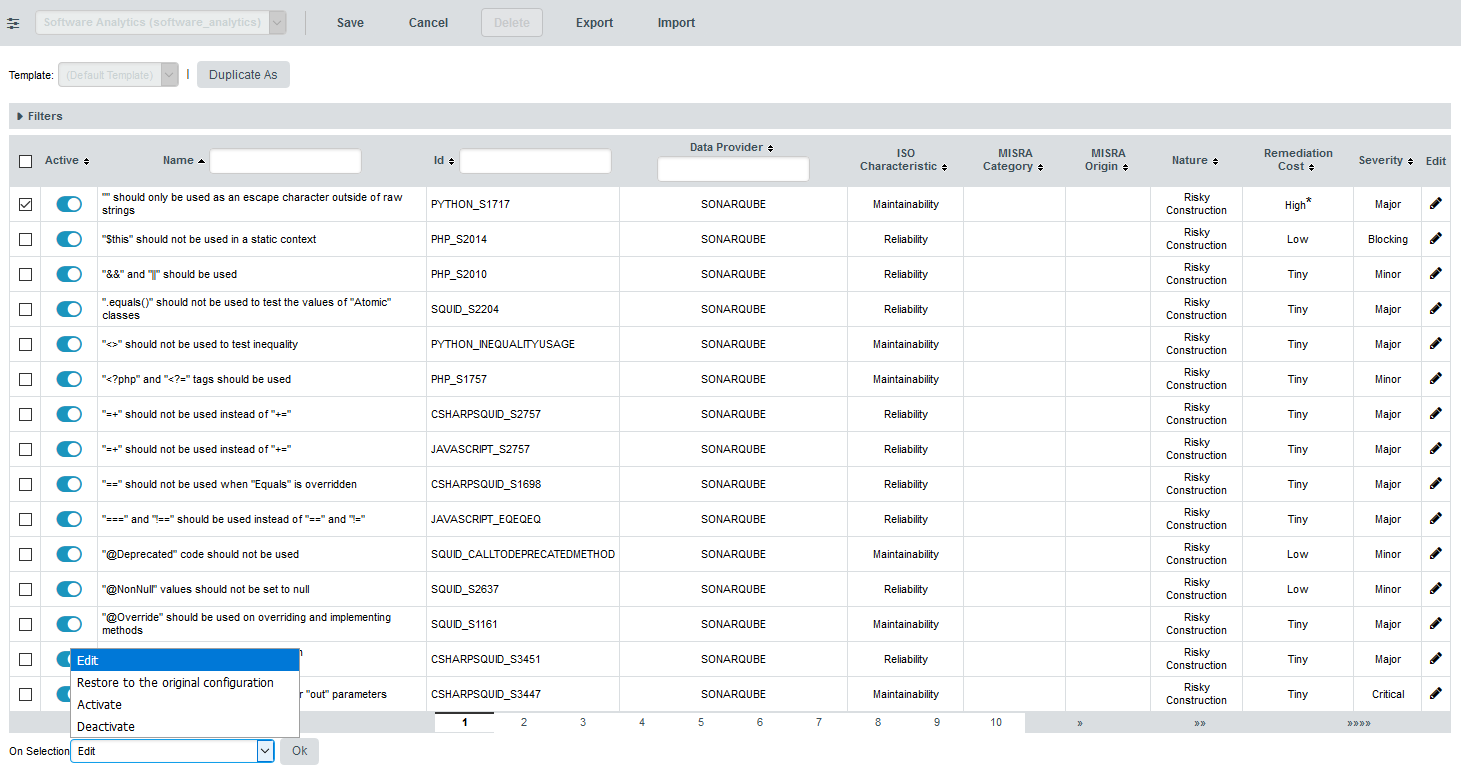

The Analysis Model Editor is a graphical ruleset editor where you can turn rules on and off, or adjust the categories associated with each rule in your model.

It also allows you to save ruleset templates so that you can use a different set of rules for each project you create

In order to use the Analysis Model Editor:

-

Click Models > Analysis Model Editor

-

Select a model to load its ruleset

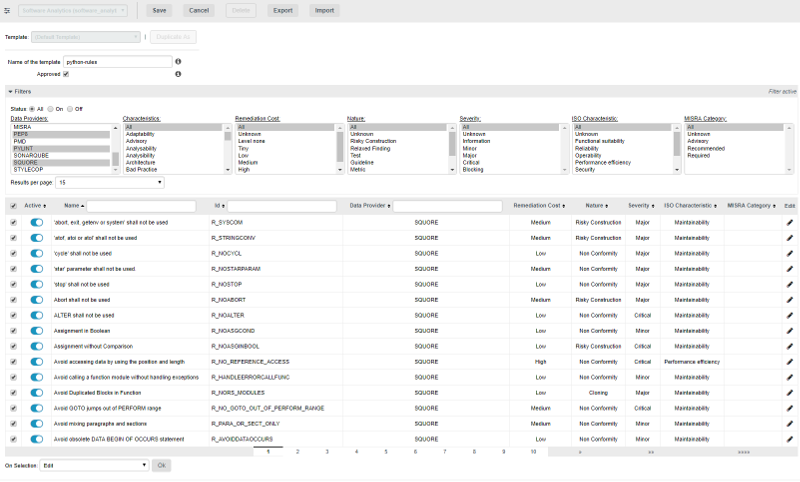

The entire ruleset for the current model is displayed in table form, as shown below:

Use the filter panel and the table headers to find the rule you want to modify. You can activate or deactivate a rule by clicking the on/off switch in the table. If you want to make more modifications, click the Edit icon for this rule.

You can edit multiple rules at once by checking several rules and using the actions list at the bottom of the page. When you save your changes, the configuration is reloaded and every new analysis for this model will use the new settings.

|

Changes made in the web interface are saved in a workspace folder on the server. |

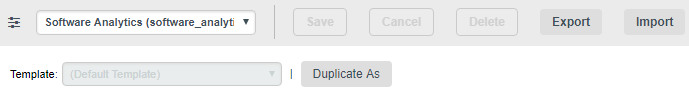

Using Ruleset Templates

Using the Analysis Model Editor, you can set up various ruleset templates to modify or ignore rules that do not apply for certain departments or project teams within your organisation.

Users with model edition privileges (see the Modify Models permission in User Profiles) can define templates right from the Analysis Model Editor. Project managers can decide to modify existing templates or create new ones from the project wizard. In order to ensure that projects are analysed using company-wide standards, templates can be marked as approved, which prevents them from being modified by other users.

In order to create a ruleset template:

-

Click on Models > Analysis Model Editor

-

Select an analysis model and locate the Template selection list above the filter tools. For a model where no templates exist yet, only the Duplicate As button is available so can create a new template from the default one.

-

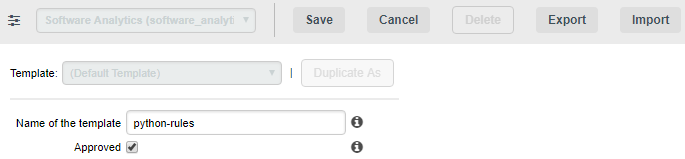

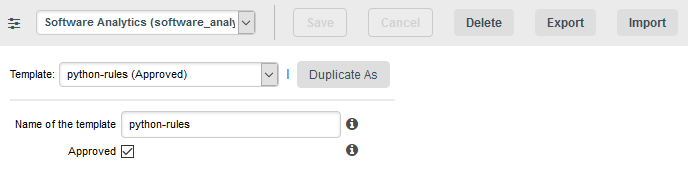

Click Duplicate As to create a new template and enter edit mode. In this example, we are creating a ruleset that contains only rules that apply to the Python programming language. By checking the Approved box, we are defining this ruleset template as read-only for project managers.

-

Activate, deactivate or modify any rule you want for the template. In this example, we use the filter tools to select all Data Providers, turn off all the rules, and then select the Python-related Data Providers to activate all their rules

-

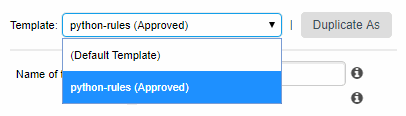

When you are satisfied with your rule selection, click Save to save the template. It now appears in the template selection list. You can still modify it as needed, or click Duplicate As to start creating a new template based on your first template.

Project managers can start using your template immediately by selecting it in the Ruleset Edition page of the project wizard, which is displayed after the Data Provider selection screen:

|

Templates can also be applied to projects from the command line using the --rulesetTemplate parameter: |

Managing Ruleset Templates

Export and Import

Aside from creating and deleting ruleset templates, the Analysis Model Editor also allows you to export them to XML and import them again. This is useful if you want to copy your templates to another Squore Server or back them up before resetting your server.

The exported XML file contains the entire ruleset.

Editing the XML template file manually before importing it as a new template is not recommended, however, it can be useful so you can modify it to determine what happens to the rules not contained in the XML, using the disableOtherRules attribute.

<?xml version="1.0" encoding="UTF-8"?>

<UpdateRules disableOtherRules="false">

<!--This file is auto-generated, please don't modify.-->

<UpdateRule measureId="R_MISRA_SAME_DEFPARAMS" disabled="false" categories="SCALE_SEVERITY.MINOR;SCALE_NATURE.NON_CONFORMITY;CHARACTERISTIC.PORTABILITY;SCALE_REMEDIATION.LOW" />

...

</UpdateRules>Setting disableOtherRules to true deactivates all the other rules in the model that are not specified in the template.

By default, or if the attribute is not specified, its value is set to false.

Handling Model Upgrades

Your existing templates will be retained when you upgrade your model to add new rules or upgrade to a new version of Squore:

-

Existing templates will have all the new rules as disabled (off) by default

-

The default template will have all the new rules as enabled (on) by default

-

Projects analysed using project-specific templates (i.e. not using a saved template in the Analysis Model Editor) will have all the new rules as enabled (on) by default

Reviewing Multiple Projects

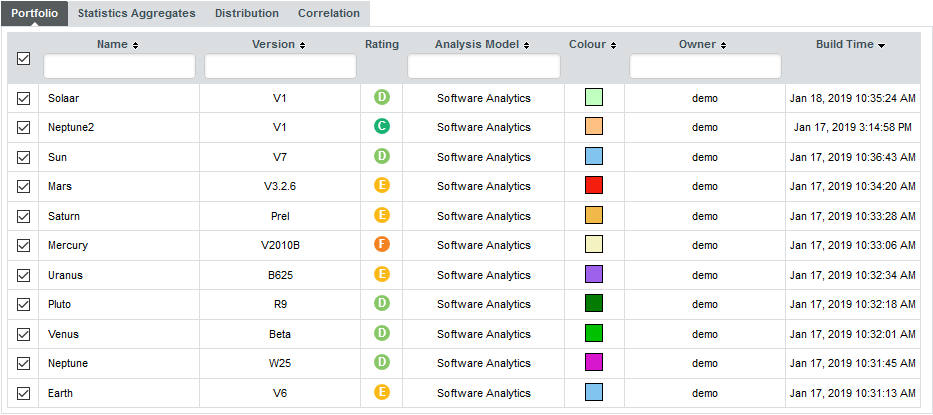

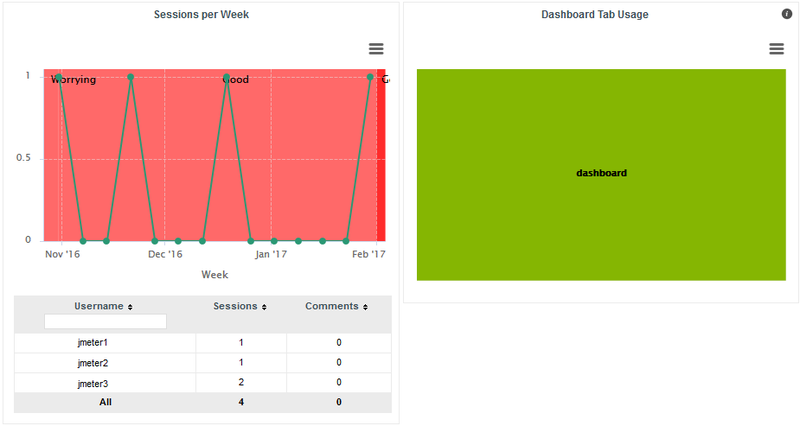

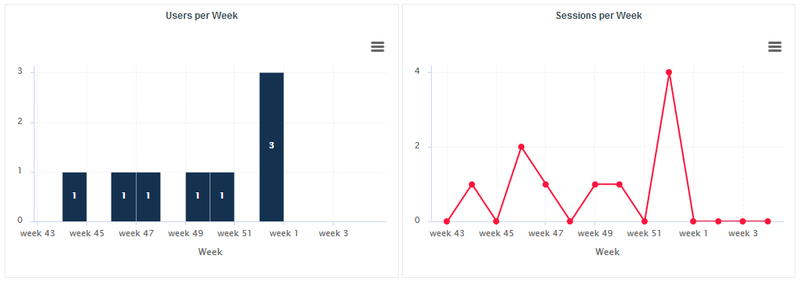

Project Managers may be interested in monitoring several projects as a whole. Squore provides a special dashboard view which compounds information about several projects into an Model/Group Dashboard, which can help you prioritise projects according to their current status.

In order to view the Model/Group Dashboard:

-

Log into Squore with the demo user.

-

Click the model name "Software Analytics" in the Project Portfolios.

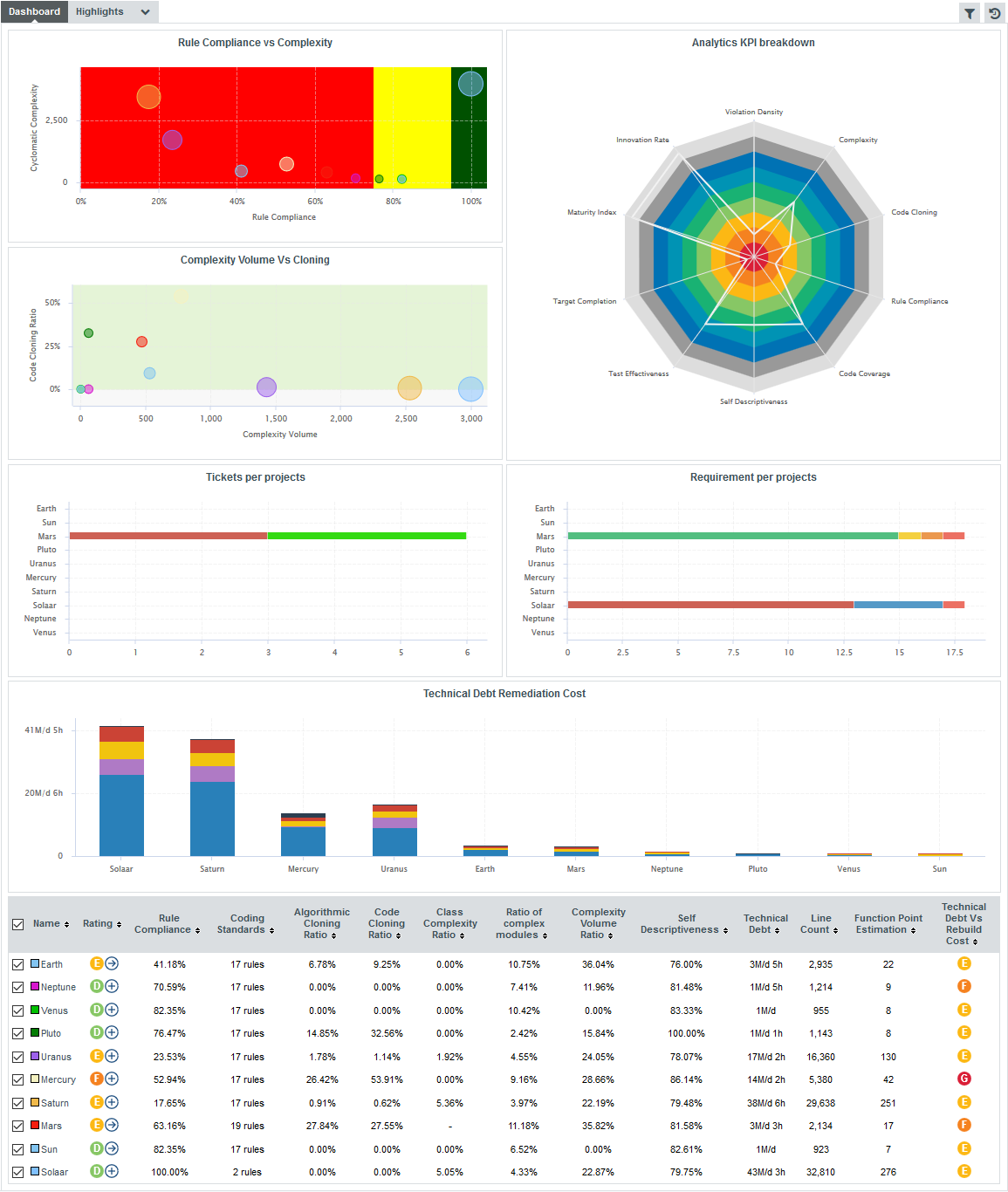

The dashboard refreshes to show the compounded information for all projects analysed with this model using charts and a summary table of the main indicators, the rating and the trend of each project.

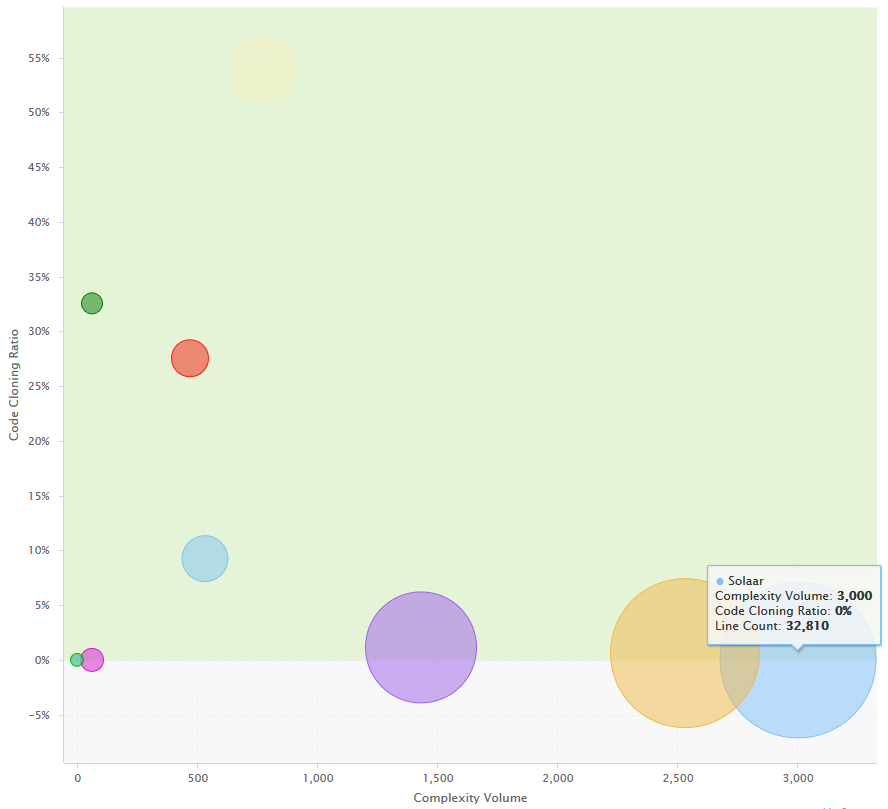

In the quadrants, each project is represented as a bubble . Two indicators define the horizontal and vertical position of the bubble along the axes, while a third indicator defines the bubble size. Let’s see how you should prioritise maintenance work on your project portfolio for the sample projects. Click on the Complexity Volume Vs Cloning quadrant to view the full version:

In this chart, projects with a high code cloning ratio appear higher, while more complex projects appear more to the right. The size of each bubble indicates the size of the project in terms of source lines of code. Therefore, it may be easier to improve the quality of a project with more cloning but less complexity like Pluto (dark green) than a project with less cloning but more complexity (Mars, in red) As a project manager, you know that as a general rule you need to focus on moving projects towards the bottom-left corner of the chart for a healthy portfolio of projects.

Below the quadrants, Squore displays tables with the values used in the charts so you can refine the information read in the charts. All the information shown in the analysis model dashboard can be configured by a Squore administrator. Refer to the Squore Configuration Guide for more information.

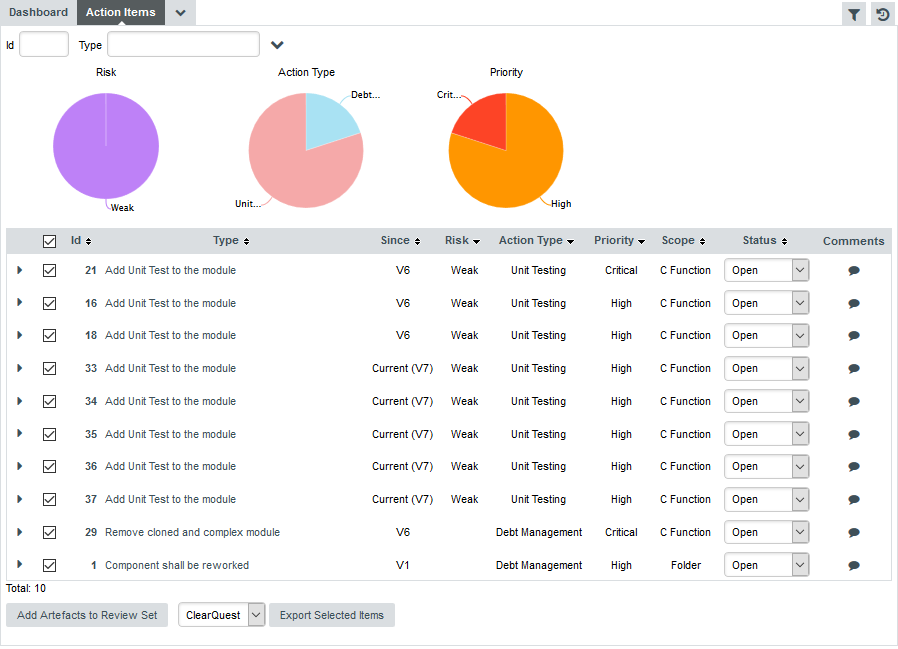

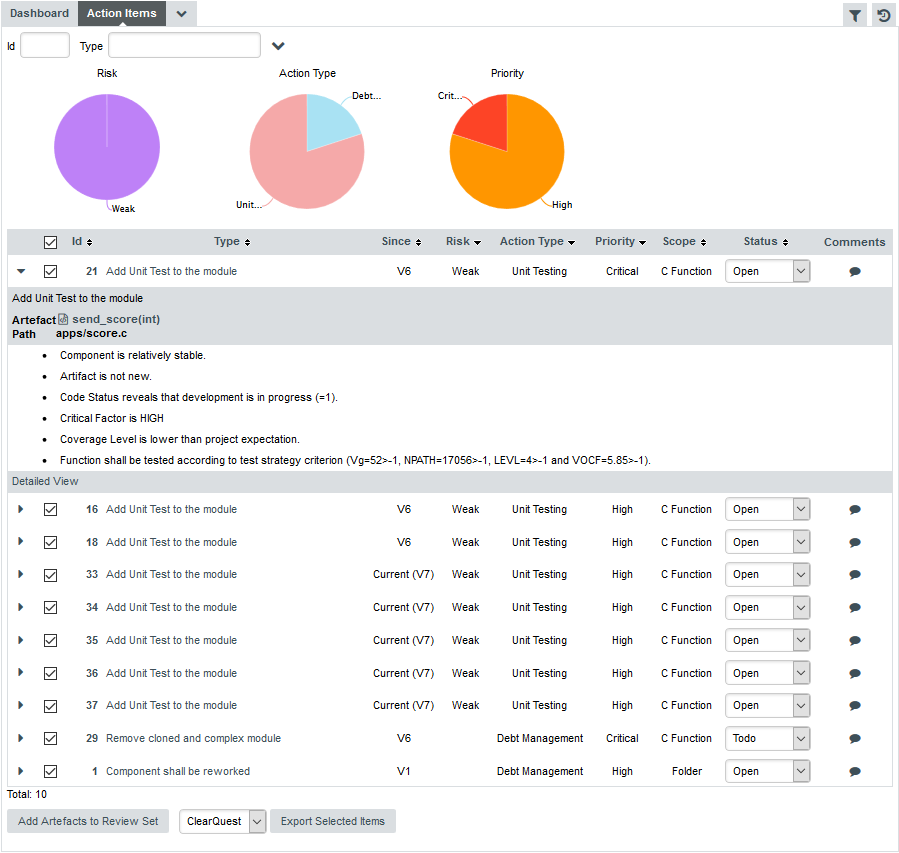

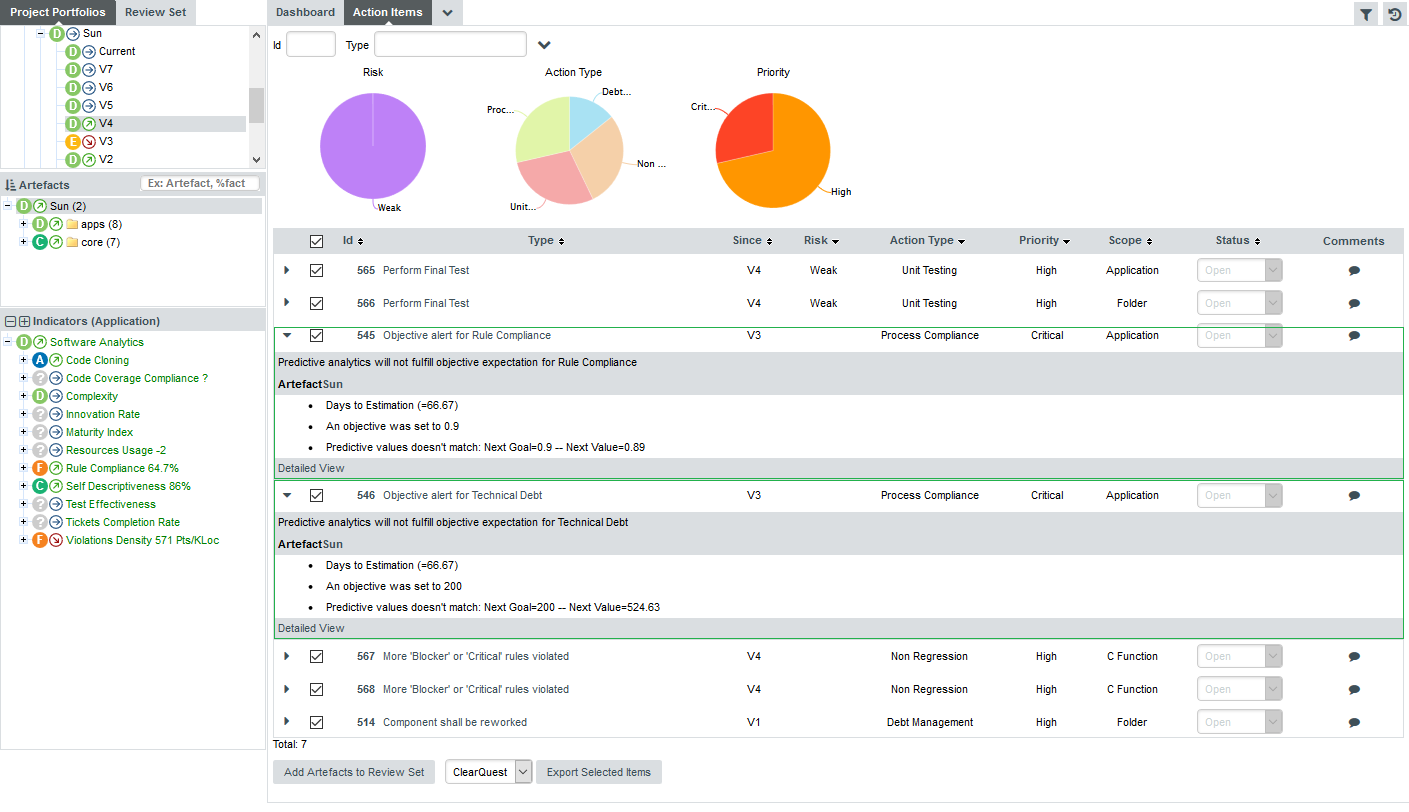

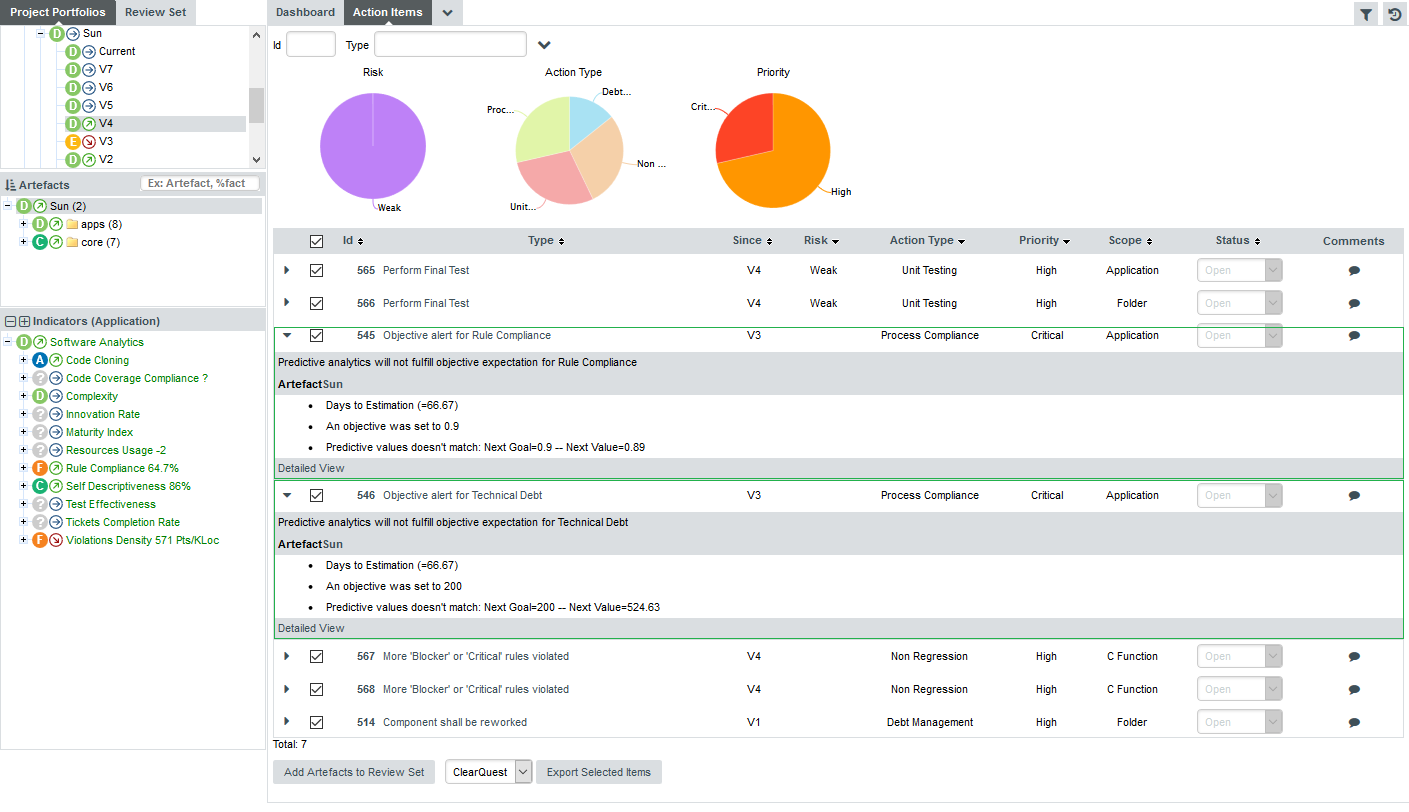

6. Managing Your To-Do List With Squore

The analysis results you obtained by creating your first projects in Creating Projects and Versions and observed in Understanding Analysis Results can be drilled down further by looking at the other tabs available in the Explorer. In this chapter, you will learn how to use the information contained in Indicators, Measures, Findings and Action Items to better understand and reuse the information provided by Squore in your development workflow.

How do I understand and Improve My Ratings?

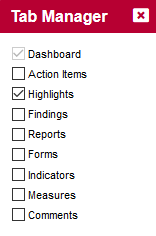

If you need more background information about the measures and indicators used in the charts and tables in the dashboard, the Indicators, Measures and Findings tabs can provide more details about the statistics recorded for the current artefact. Note that these tabs are not displayed by default. If you want to show them in Squore, click the Explorer Settings menu and then Manage Tabs to display the Tab Manager to enable these tabs, as shown below:

If you want to understand the scale used for a particular indicator, to see for example how close you are to moving up the rating scale, you can check the scale used for this indicator in the Indicators tab of the dashboard.

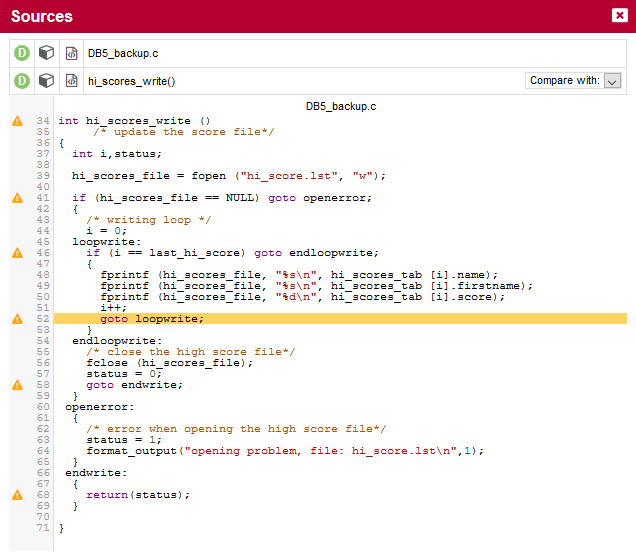

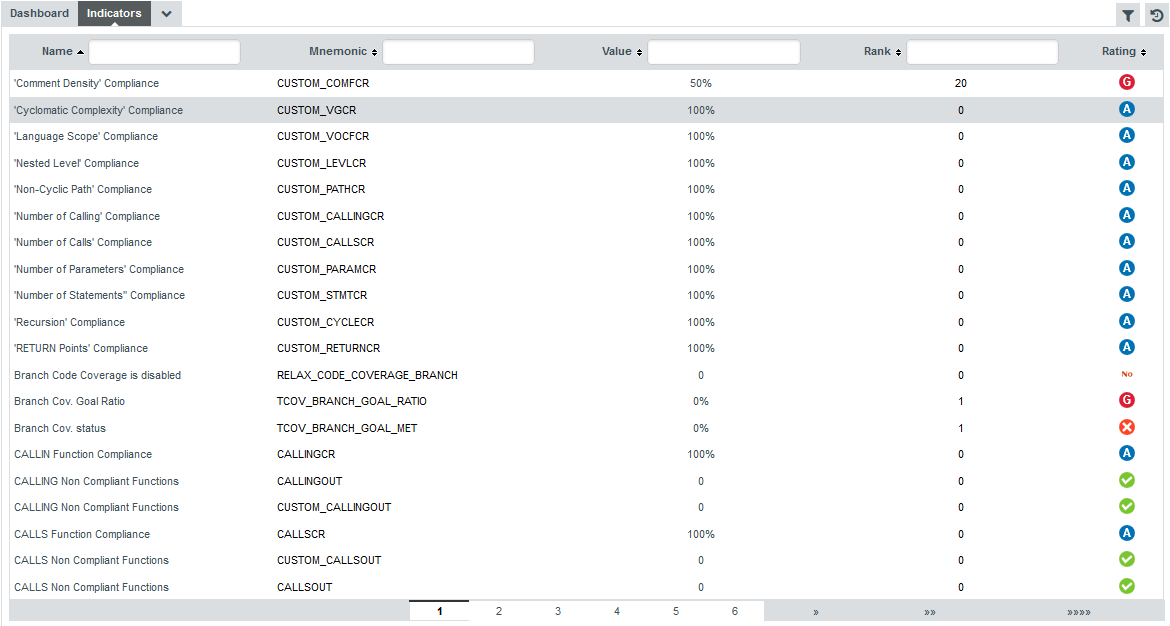

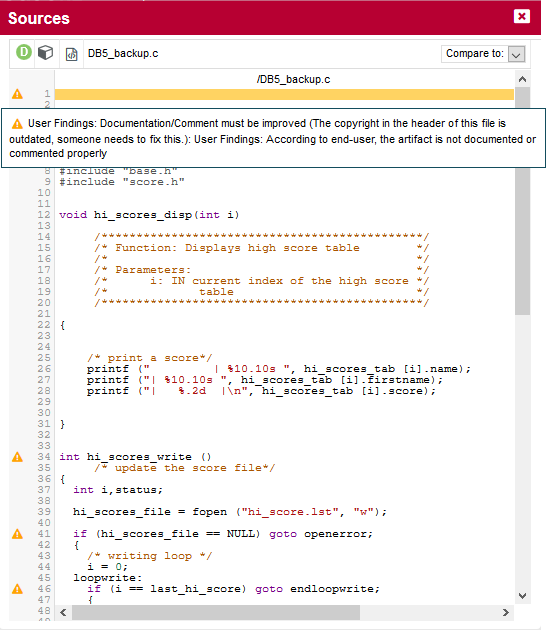

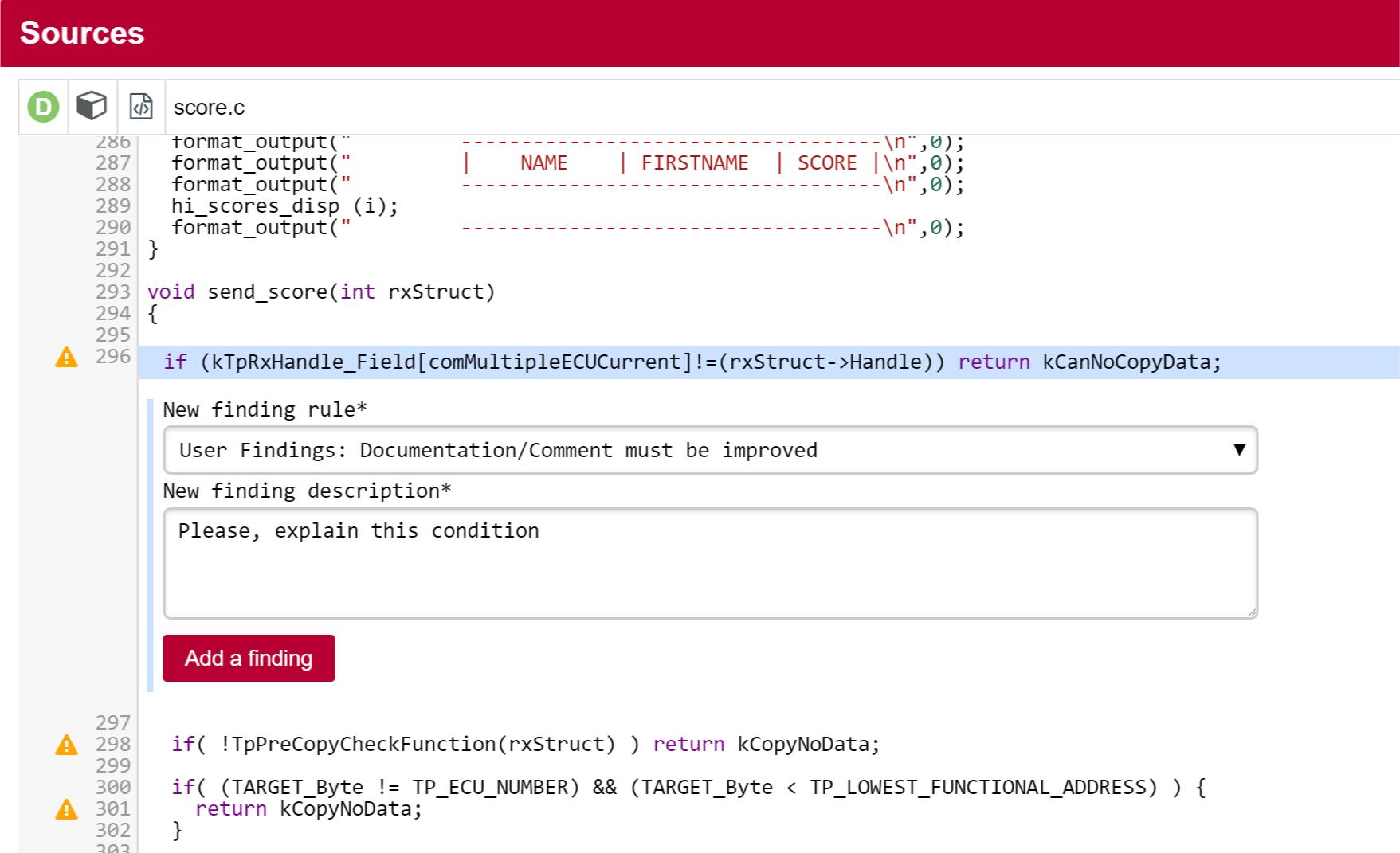

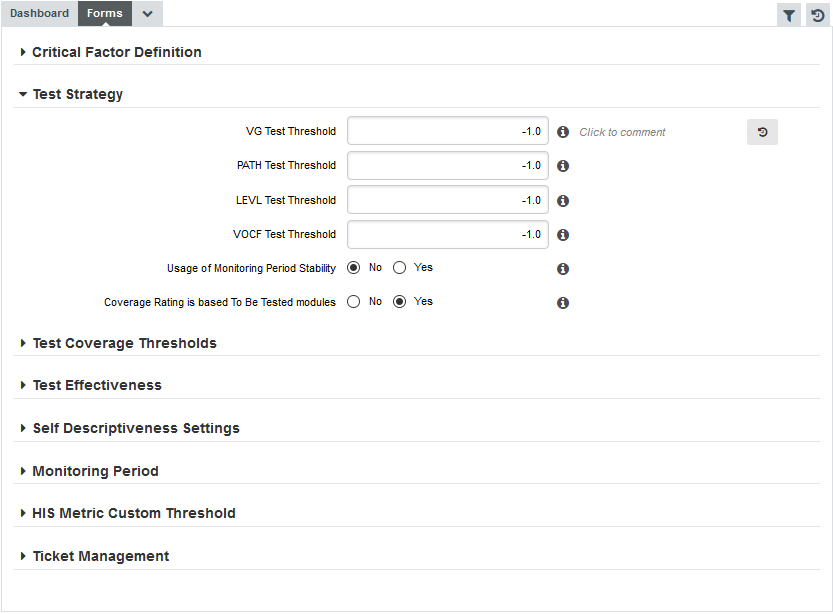

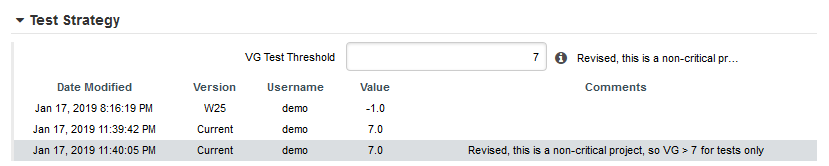

Log in and search for the artefact DB5_backup.c in the Neptune project, where the indicator Maintainability Non-Conformity is rated E. While this tells you about the current rating for this artefact, this does not tell you how to improve it. In order to learn how to improve this score, let’s first take a look at the scale used for this indicator. Click the Indicators tab of the Explorer. The table of indicators opens, as shown below:

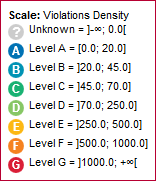

The table lists all the indicators available for the artefact over several pages. The scale and levels available for an indicator can be viewed in a tooltip by placing your mouse over a rating. Using the filter above the "name" column, look for the entry named Maintainability Non-Conformity, then click its value in the rating column. The scale for the indicator indicates that the artefact is rated E because the value of the indicator is 472.09. In order to improve the score, the value would need to decrease to under 250 to be rated D, as shown below:

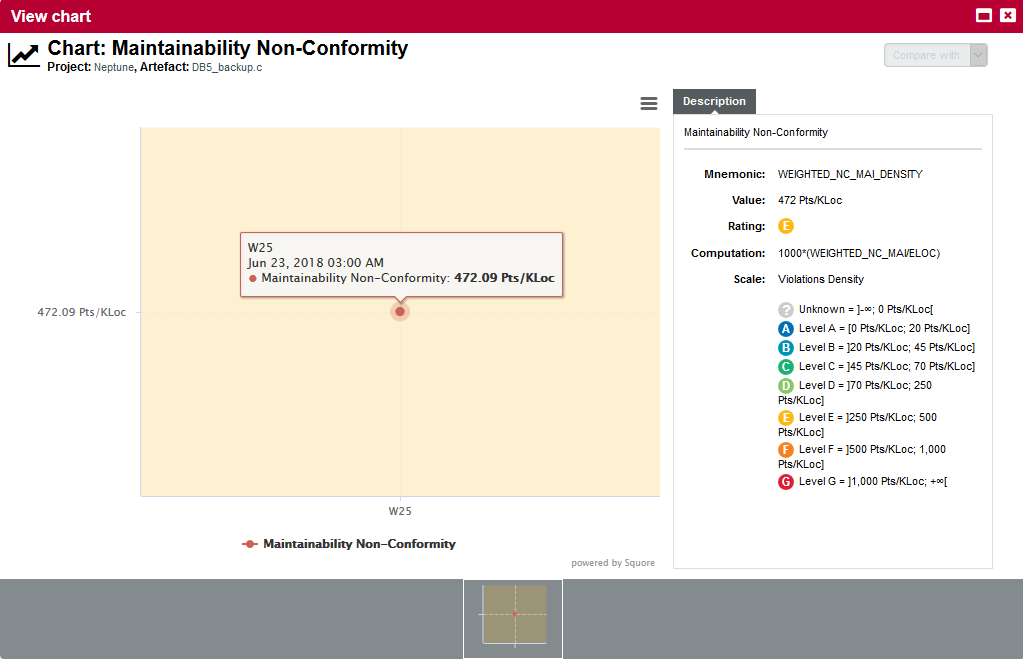

To understand how to improve the rating , you need to know how the indicator’s value is computed. Clicking the indicator name in the Indicator Tree shows the following explanation in the indicator popup:

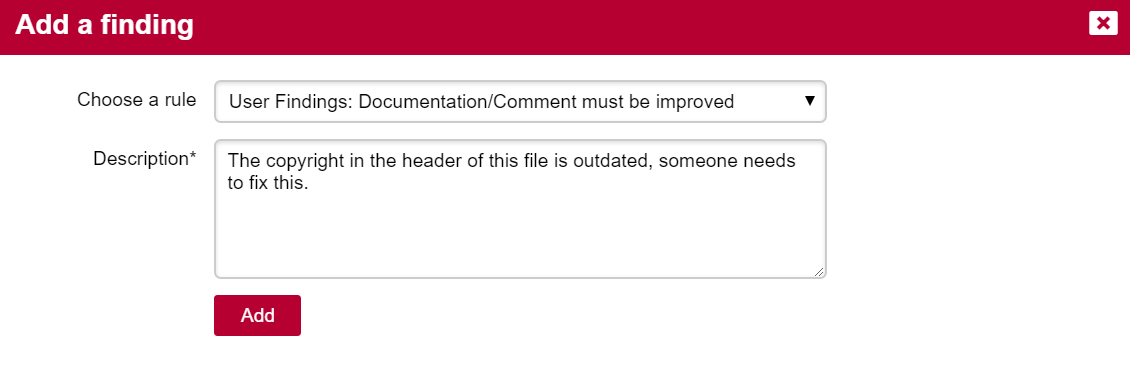

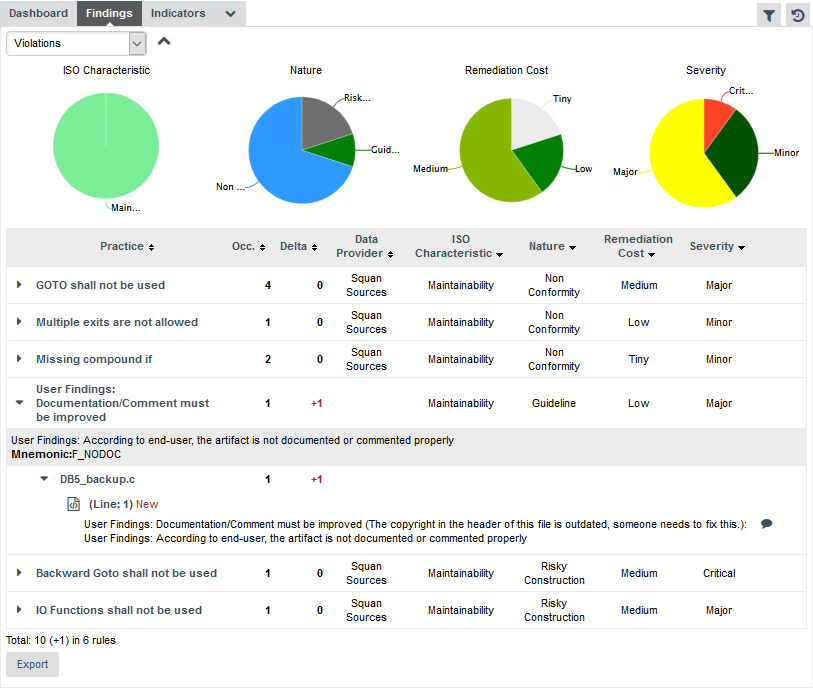

The computation , i.e. the formula used to calculate the rating is 1000*(WEIGHTED_NC_MAI/ELOC), meaning that the indicator computes a ratio of broken Maintainability rules. To find out what these rules are, click the Findings tab.

Squore displays all the findings for a particular artefact in a table in the Findings tab. Next to the finding’s label is a number of occurrences followed by a colour-coded delta value (red for more occurrences, green for less) compared to a previous analysis.

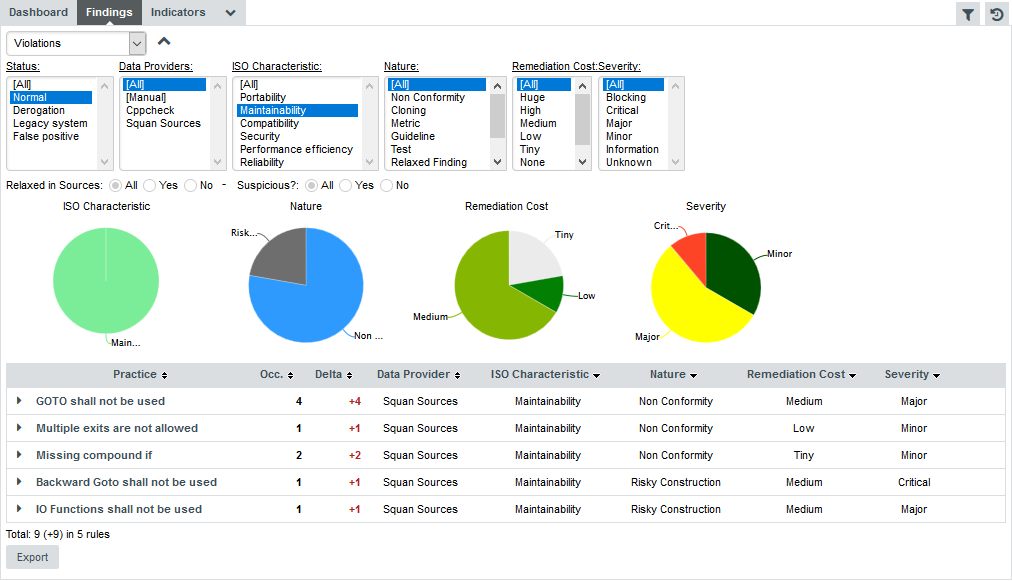

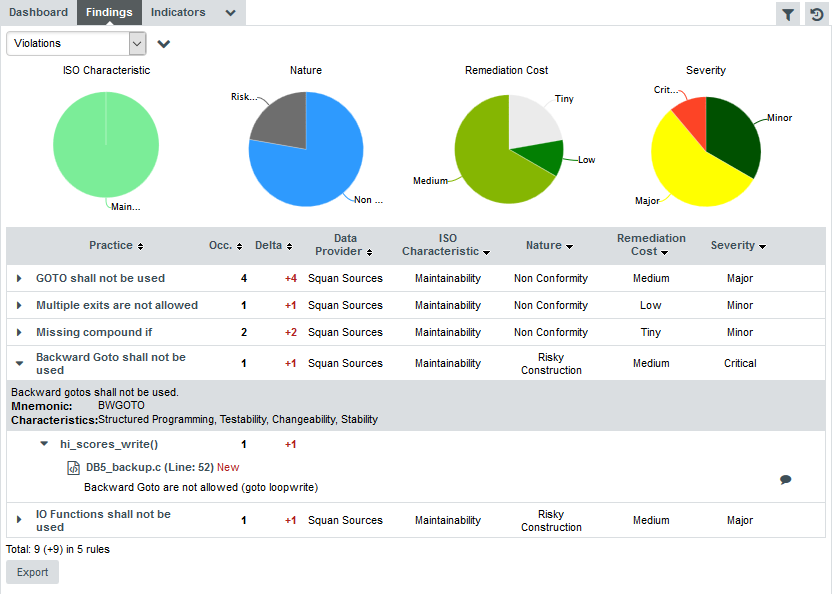

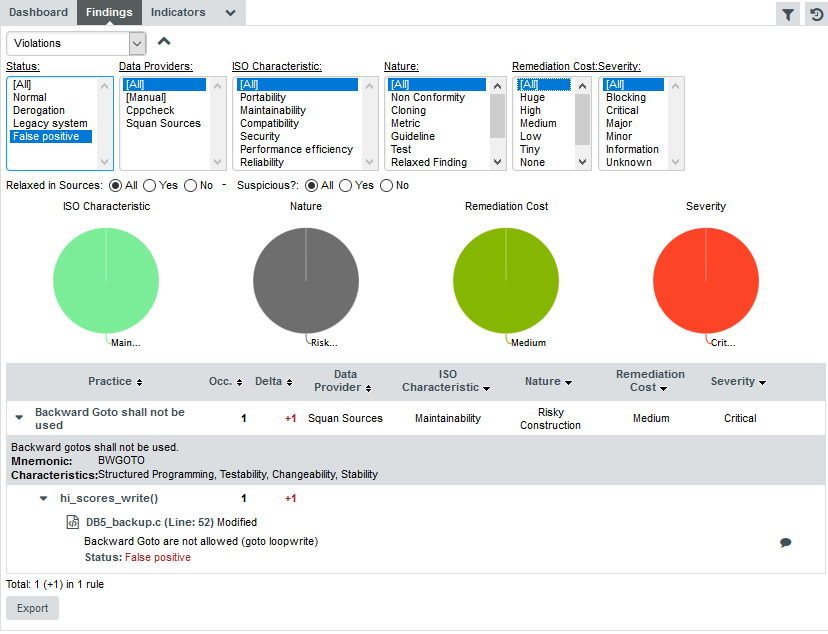

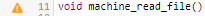

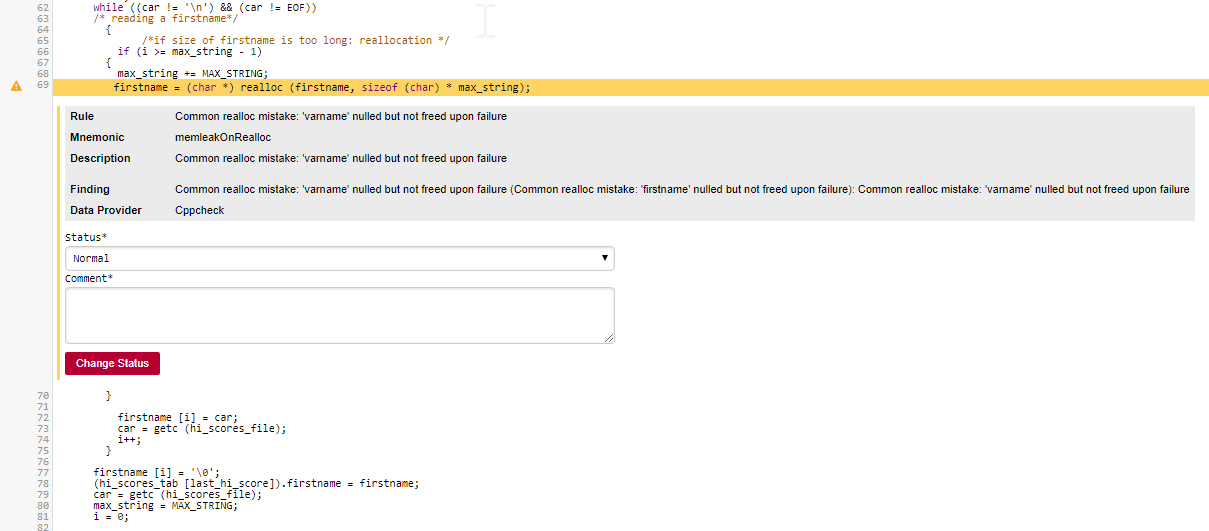

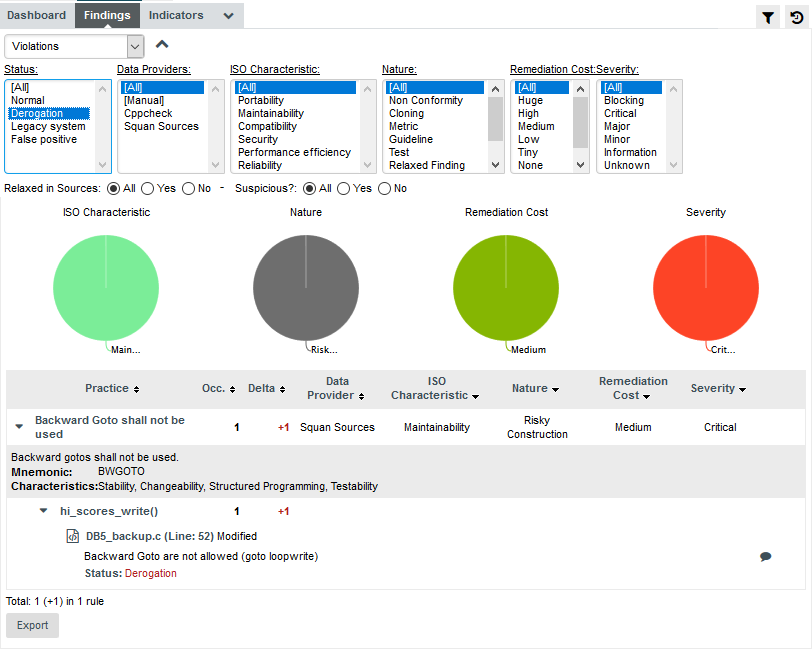

If you want to find out which rules are taken into account by the Maintainability Non-Conformity indicator, expand the filter to show the advanced filtering options. Highlight Maintainability in the ISO Characteristics filter to see the corresponding rules, as shown in the picture below:

|

You can filter violations according to many criteria, including relaxation status, origin, artefact type and other characteristics from the analysis model |

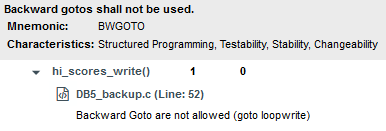

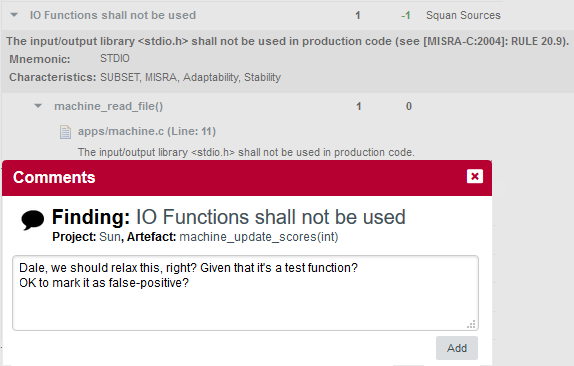

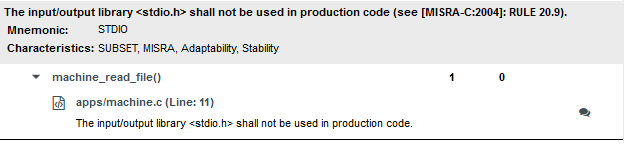

The rules BWGOTO, STDIO, NOGOTO, RETURN and COMPOUNDIF are the rules that should be fixed in order to improve the Maintainability rating of DB5_backup.c.

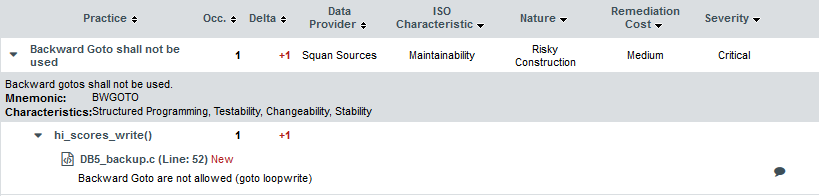

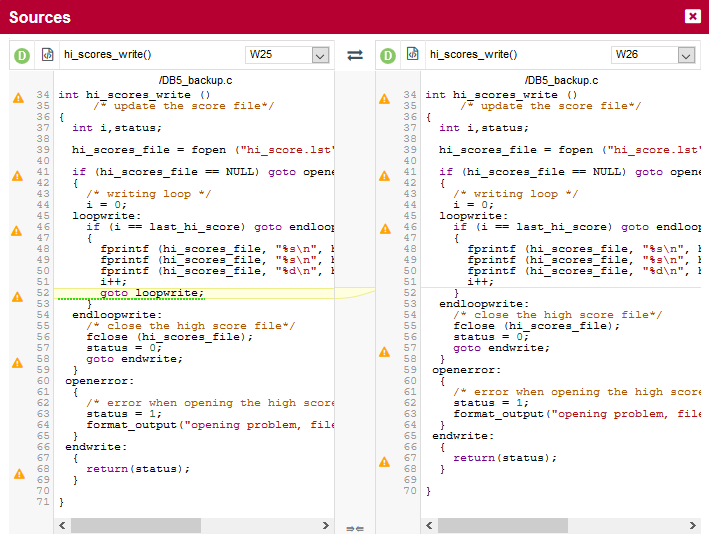

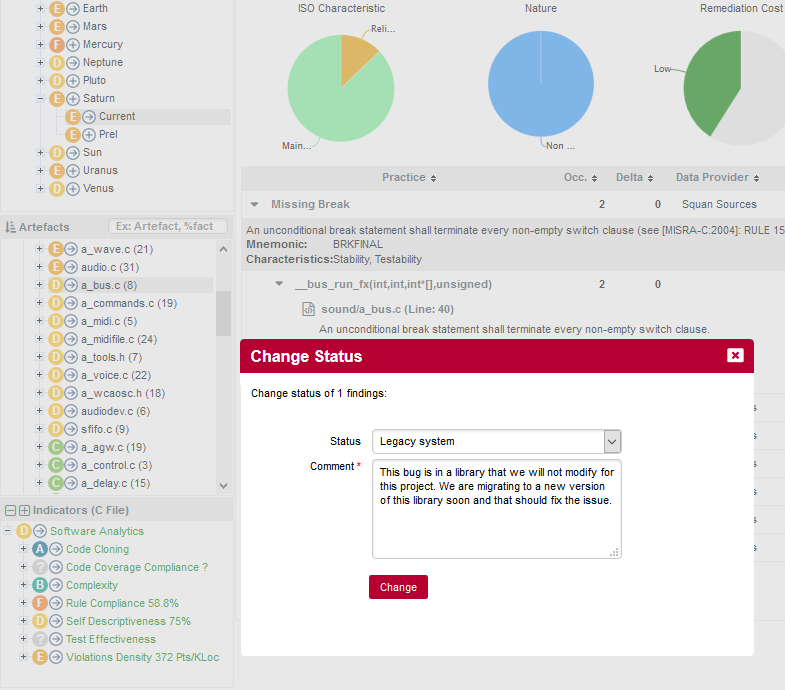

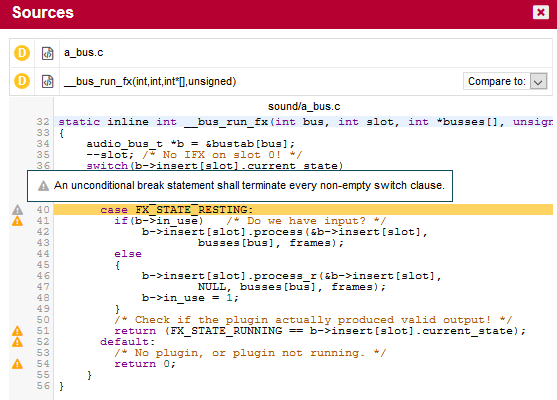

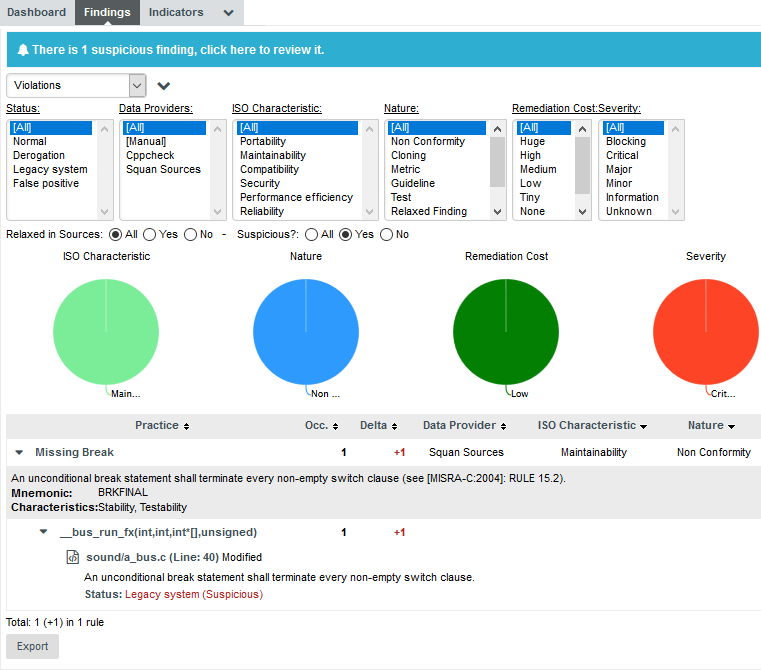

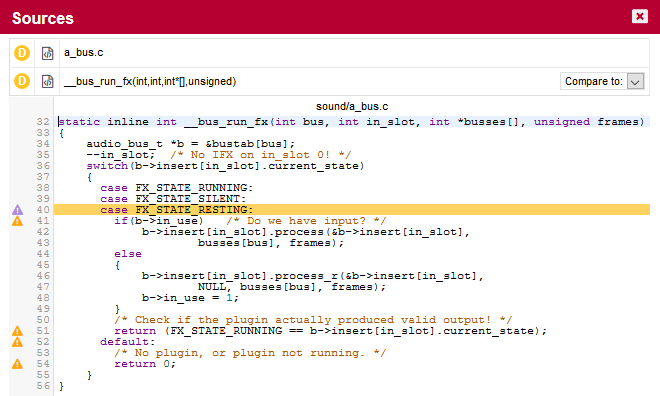

You can expand the BWGOTO rule to show each occurrence of the rule being broken, and also review the location in the source code that breaks the rule, as shown below:

|

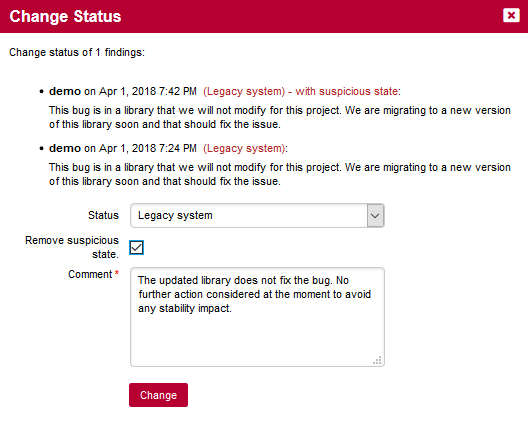

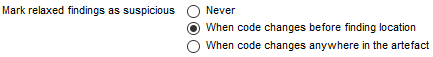

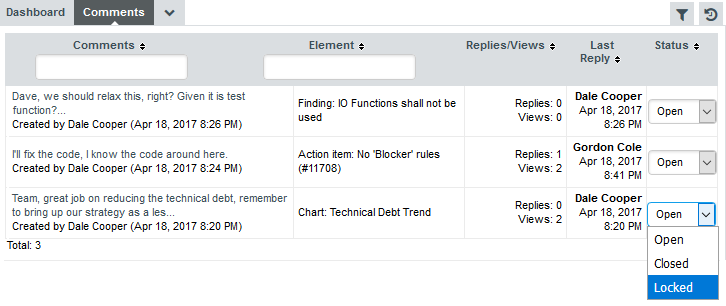

The list of findings indicates if a finding is New, Closed or Modified since the reference version. Findings are traceable through time, so even if your code is modified, you can to go back to the version in which it was first detected. |